Generate test cases with AI

Test Companion’s AI can write test cases for you, accelerating your test creation process. Test Companion provides two primary agents for creating new test cases. You can either provide a document (like a PRD) or a URL for the AI to explore.

Generate from Requirements

This method is ideal when you have a specification, PRD (Product Requirements Document), or user story. The AI will read the document and generate a complete list of relevant test cases.

It is recommended to use the Test Case Generator agent for this task.

-

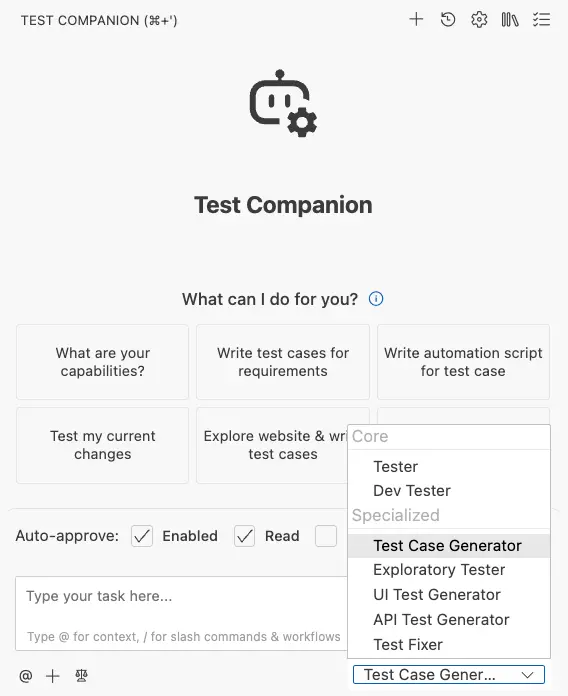

In the Test Companion panel, click the agent dropdown at the bottom.

- Select

Test Case Generator. - In the chat box, provide the AI with context. You can combine multiple methods:

-

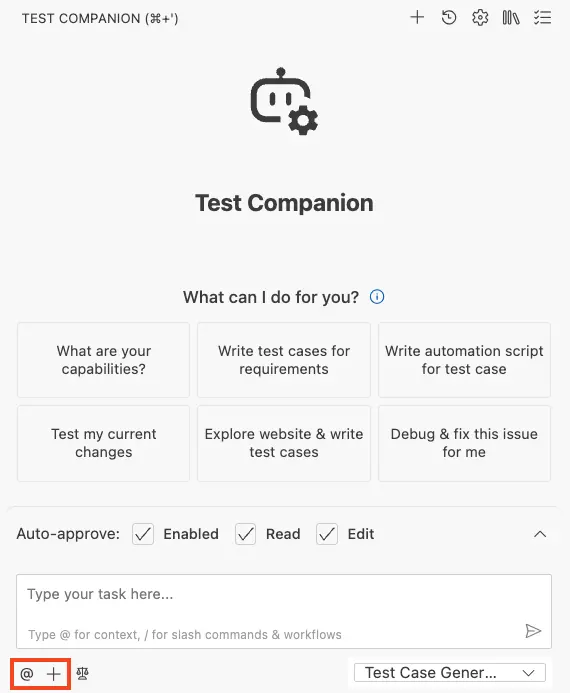

Attach a file: Click the

+(Add Files & Images) icon to attach your main PRD, spec, or image file. -

Add Workspace context: Type

@to add specific files, folders, git commits, or terminal output from your local project. - Paste text: Provide a clear prompt or directly paste your requirements or user story into the chat.

-

Attach a file: Click the

- Send the message.

The agent will read and analyze the document, then populate the test case list with new scenarios and tests, complete with descriptions, steps, and priority.

The AI will process your request and add the newly generated test cases to your list in the panel.

Generate from a website

This method is perfect when you do not have formal documentation or want to find test-coverage gaps on a live page. The AI will open a browser, explore the URL you provide, and write test cases based on its findings.

It is recommended to use the Exploratory Tester agent for this task.

-

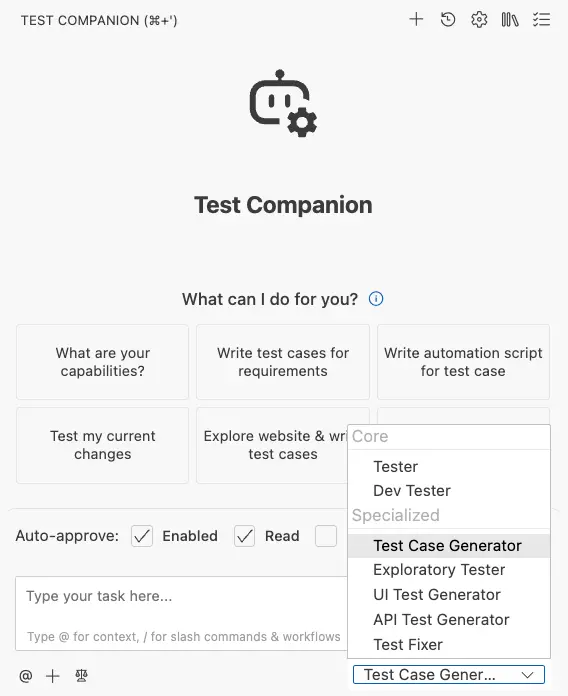

In the Test Companion panel, click the agent dropdown at the bottom.

- Select the

Exploratory Testeragent from the dropdown. - In the chat box, write a prompt telling the AI what to test and provide the URL.

(For example: Generate test cases for the authentication flow onexample.com) - Send the message.

A browser window will open as the AI explores the feature. It will also check your existing tests to identify and fill coverage gaps.

Review your new test cases

After either agent finishes, your new test cases will appear in the Test Case Management panel, ready to be reviewed, edited, and synced.

Prompting examples

The AI is flexible. You can provide high-level instructions or specific URLs.

-

Example 1: From a Requirement you can write a simple prompt describing the feature you want to test. Write test cases for requirements

-

Example 2: By exploring a URL you can provide a URL and ask the AI to analyze it for testable features. Explore ostc.demo.com and write test cases for the veneer demo [bidi]

Next steps

- Manage test cases: Now that you have generated tests, learn how to organize, edit, and sync them.

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

Thank you for your valuable feedback!