The AI Evaluation Platform

AI apps break unpredictably, cost silently, and are painful to debug, evaluate, secure, and scale.

BrowserStack AI Evals — the all-in-one platform to build agents, trace behavior, and evaluate performance with confidence.

Trusted by more than 50,000 customers globally

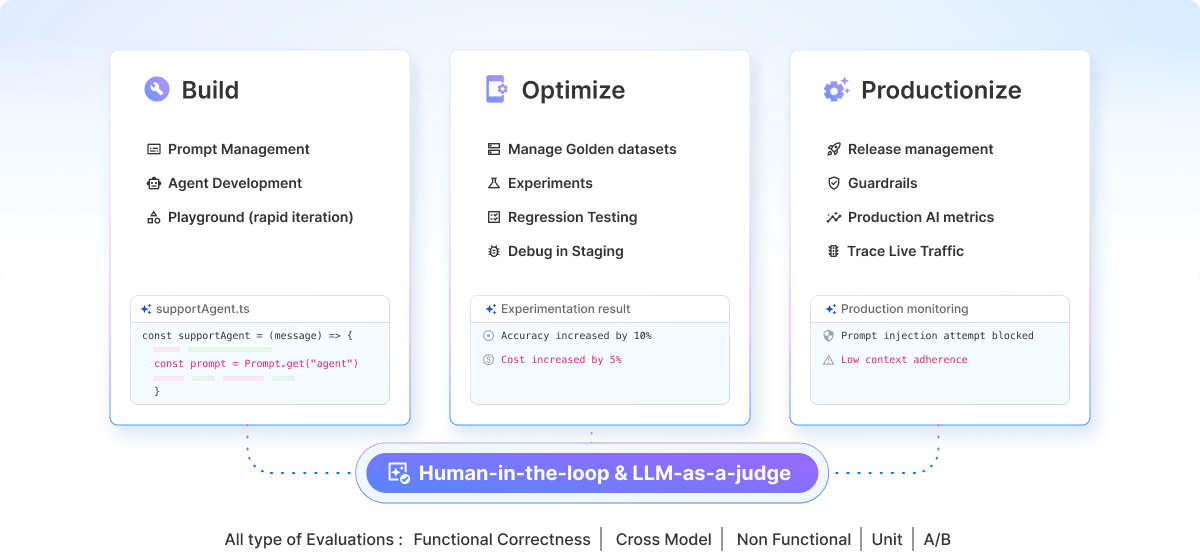

Evals across the entire AI development lifecycle

Observe, Iterate and Ensure Accuracy for your AI apps across every release.

Built for every role in the AI lifecycle

Testers

Comprehensively evaluate AI apps – for correctness, safety, and regression at scale.

Developers

Quick iterations and faster feedback to build reliable AI apps.

Product Managers

Define functional requirements (Evals) and link user feedback back to AI development.

Move from experimental to enterprise-grade AI

One platform to build Agents, get visibility into behaviour, measure accuracy objectively and enforce safety - so you can scale them with confidence, not guesswork.

Evaluations for the entire development lifecycle

- Quick iterations – playground helps evaluate prompts quickly.

- Comprehensive pre-release evaluation – run LLM-as-judge and Human-in-the-loop evaluations.

- In-production monitoring – evaluations keep an eye on your Agents.

End-to-end Agent Observability within minutes

- Effortless setup – our SDK integrates within your codebase with virtually no code changes.

- Comprehensive tracing – track LLM calls, knowledge lookups, tool calls and much more.

- Instant feedback – on behaviour, cost and performance.

A platform built for enterprise scale

- Scale seamlessly – run evaluations on large datasets to have complete confidence.

- Deployment flexibility – ranging from on-cloud to a complete on-premise deployment.

- Enterprise-grade compliance – built to keep your data safe.

Seamless integrations that make life easier

We work with the tools and frameworks you use. Seamlessly connect BrowserStack with your tools for faster, simpler evaluation.