Results from closed test runs widget

The results from closed test runs widget shows the outcomes of completed tests clearly. It helps you quickly understand test quality issues and gaps in test coverage.

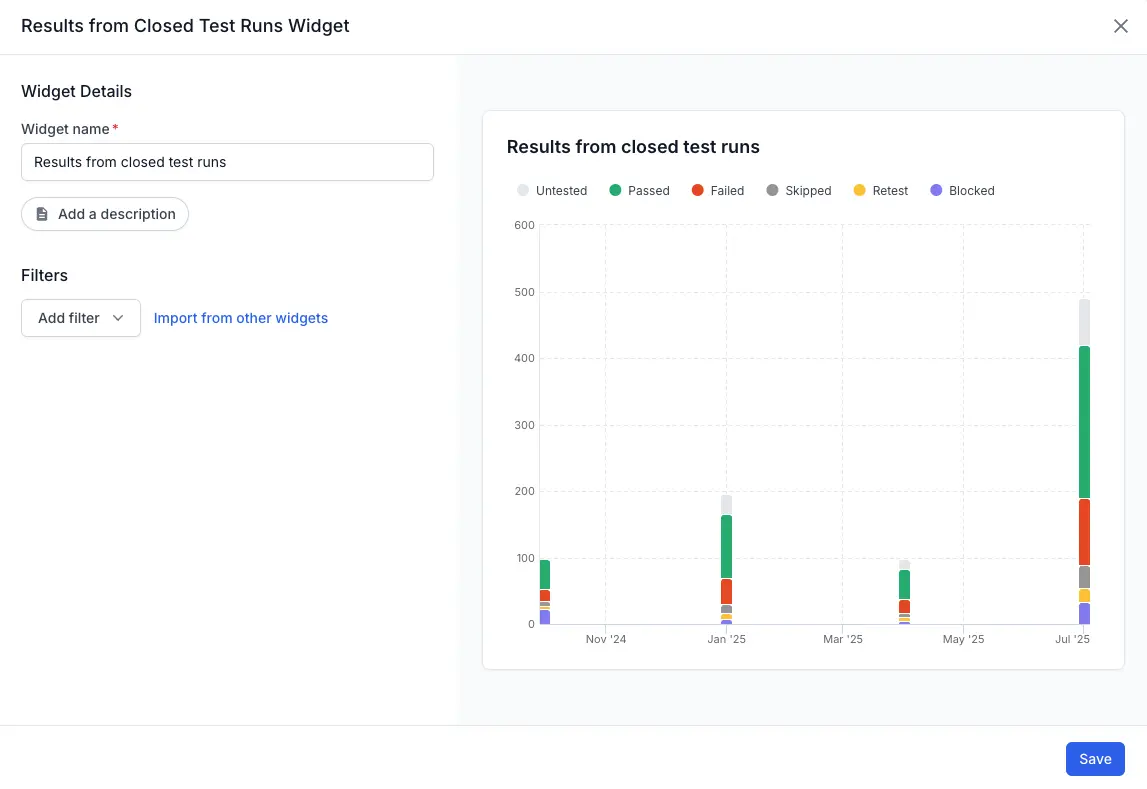

Key metric information and visualization

This widget provides detailed insight into how tests ended, allowing you to monitor test quality efficiently. The stacked bar chart displays the number of test outcomes clearly by date, segmented by status. Looking at several months side‑by‑side makes it easy to see whether failures are trending up, coverage is improving, or skipped tests are creeping in.

- Horizontal Axis (X-axis): Represents the timeline.

- Vertical Axis (Y-axis): Indicates the number of test results.

- Different colors represent each test result status.

Metrics

Colors represent different test case statuses. Hover over any color to see exact numbers.

| Status | Description |

|---|---|

| Total Test Cases | The number of test cases currently running. |

| Passed | Tests that ran without issues. |

| Failed | Tests that found issues needing fixes. |

| Untested | Tests that have not started yet. |

| Skipped | Tests intentionally not run. |

| Retest | Tests marked to run again. |

| Blocked | Tests that cannot run because of other problems. |

Available filters

Apply the following filters to narrow-down to specific test runs based on your criteria.

| Filter | Description |

|---|---|

| Test Run Owners | Show test runs by the responsible team member/owner. |

| Test Run Tags | Use match all or match any one value to filter tests with specific labels. |

| Test Run Types | See only manual tests, automated tests, or all types of test runs. |

| Unique Test Run Name | Filter by a specific test run. |

| Branch | Show tests associated with a particular code branch. |

| CI runs only | Show only tests triggered by Continuous Integration (CI). |

To apply filters, click Add filter dropdown and select the applicable filters.

Use cases and actionable insights

Consistently reviewing this widget helps maintain high test quality by promptly addressing failures and blockages.

| Insight | Action |

|---|---|

| Quickly identify periods with high test failures. | Investigate recent code or process changes causing increased failures. |

| See if many tests remain untested or skipped. | Review test planning and resource allocation to improve test execution coverage. |

Interpreting test data

-

Frequent failures indicate recurring bugs or unstable features.

-

Many skipped tests suggest unclear test strategies.

-

Blocked tests highlight dependency or environmental issues needing resolution.

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

Thank you for your valuable feedback!