Test case activity report

This document provides a comprehensive overview of the test case activity report feature, its functionality, and implementation details.

A test case activity report provides a summarized view of all testing aspects of test cases in that project. Within a project, you can immediately generate Test Runs summary and detailed reports or schedule to generate these reports at a specified date and time.

Follow these steps to create a Test case activity report:

- Go to the project where you want to create the Test Run report.

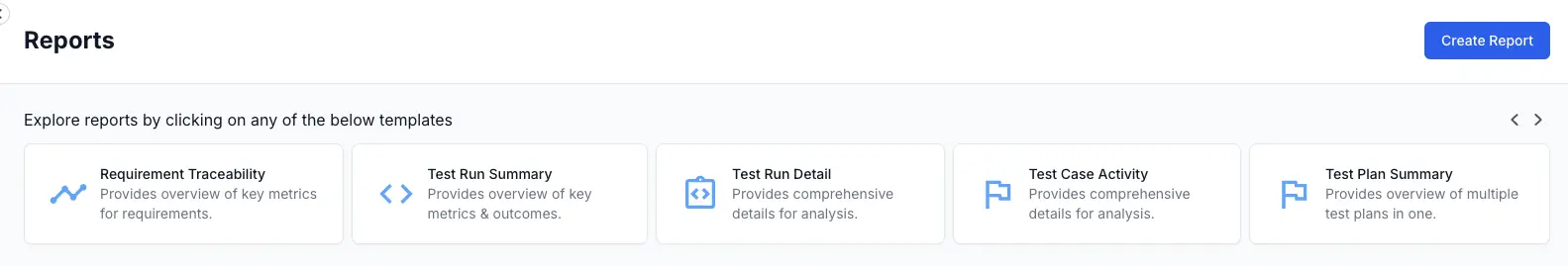

- Click Reports tab on the navigation menu.

-

Click Create Report from the reports dashboard.

A Create Report window appears.

-

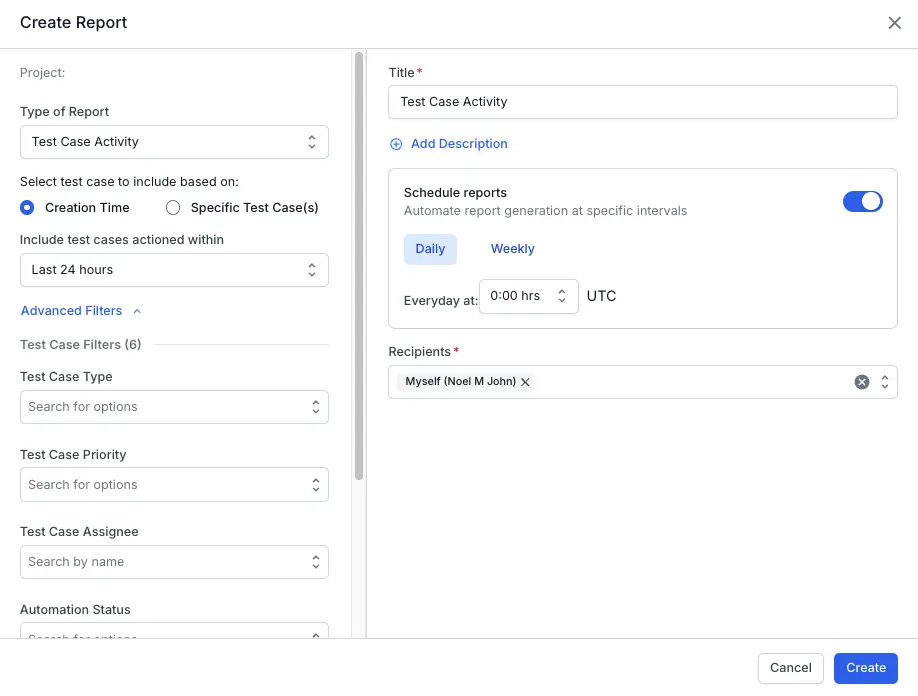

In the Create Report window, enter the following details:

- Select report type:

- In the Type of Report dropdown, select Test case activity report.

- Select test cases to include based on:

- You will see two options:

-

Creation Time:

- Choose a time range (e.g.,

Last 1 month,Last 1 week,Last 24 hours, or acustomrange). -

Advanced Filters (optional):

Expand Advanced Filters to refine which test cases are included. You can filter by:- Test Case Type: Focus on specific types (e.g., functional, UI, regression).

- Test Case Priority: Include only high, medium, or low priority cases.

- Test Case Assignee: Filter by who is assigned to the test cases.

- Automation Status:Filter test cases based on whether they are automated or manual, facilitating easy tracking and management of automated workflows.

- Created Date:Filter by the creation date of test cases. Helpful for reviewing new or recently added test cases.

- Last Updated:Filter reports based on the date the test cases were last updated. Useful for tracking recent changes and activity in your test management processes.

- Choose a time range (e.g.,

-

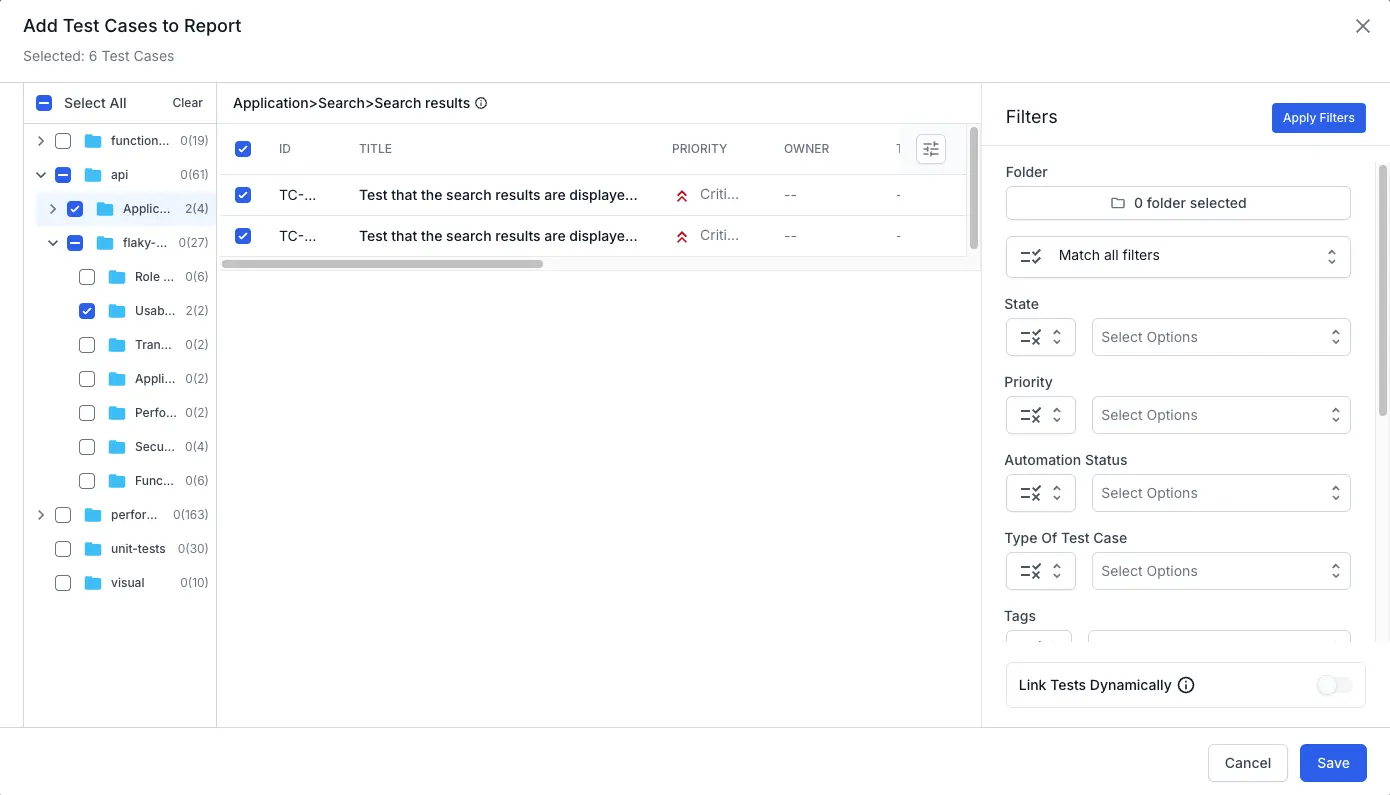

Specific Test Cases:

- Click Update and choose one or more specific test cases that you want include in the report.

-

Enable Link Tests Dynamically toggle to automatically include relevant future test cases based on filter criteria.

- Configure scheduling:

Toggle Schedule reports on to set up automated delivery.- Daily: Select the time (in UTC) for the report to be generated each day.

- Weekly: Choose the day of the week and the time (UTC) for the report to be sent.

- Add recipients:

In the Recipients field, enter the names or emails of the individuals who should receive the scheduled report. You can add multiple recipients. - Title and description:

Provide a descriptive Title for your report. Optionally, you may add a brief Description to provide the context.

- Select report type:

- Save your settings:

After all fields are set, click Update or Create to schedule the report.

A “Report generated successfully” notification appears on the dashboard, and reports are sent to specified recipients. If you have created reports with automatic recurring reports, they will appear in the Reports dashboard.

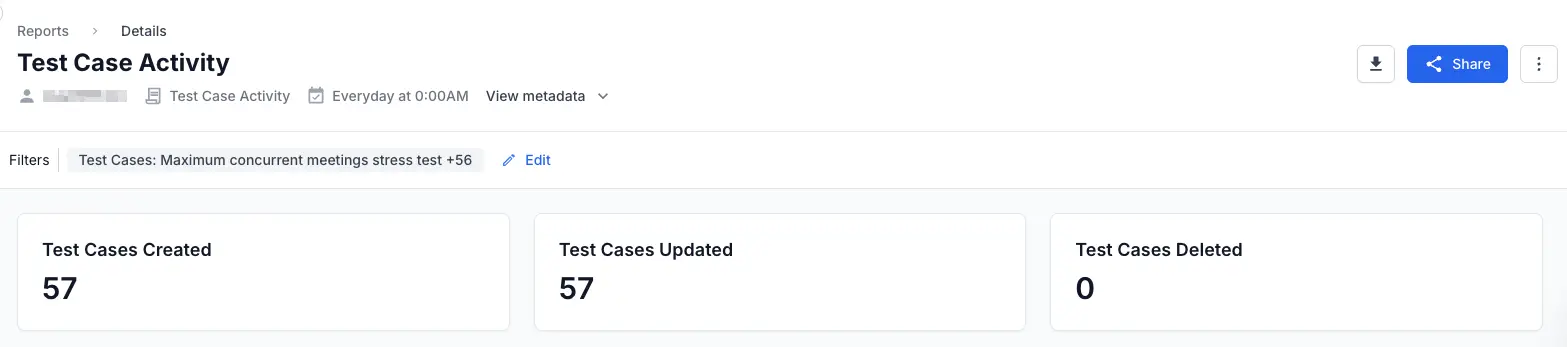

Understand the test case activity report

The test case activity report displays key information using widgets. The following section details what each widget means and how to interpret the data:

Summary Metrics

At the top of the report, you see three important metrics:

- Test Cases Created: Total number of new test cases created during the selected period.

- Test Cases Updated: Total number of test cases modified during the selected period.

- Test Cases Deleted: Total number of test cases removed during the selected period.

Use these metrics to quickly measure and analyze team activity and test case maintenance.

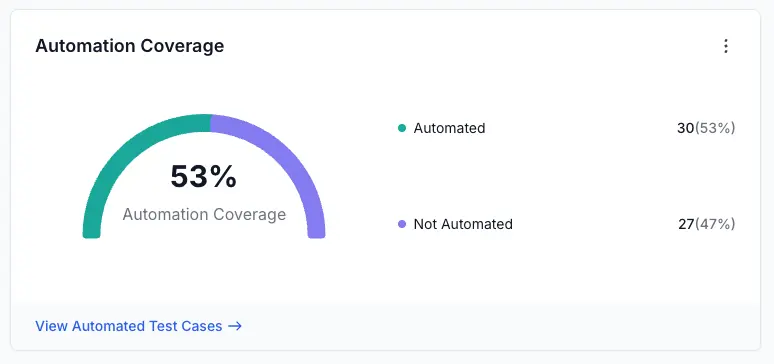

Automation coverage

This widget shows the percentage of test cases that are automated:

- Automated: Number of test cases configured for automated execution.

- Not Automated: Number of test cases still requiring manual execution.

- Automation Not Required: Test cases that do not need automation.

- Cannot Be Automated: Test cases that cannot be automated due to technical or practical reasons.

- Obsolete: Test cases that are no longer relevant or in use.

Check this regularly to track your automation progress.

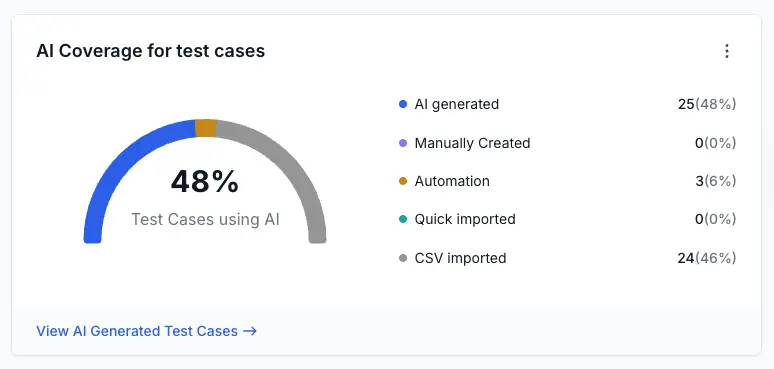

AI coverage for test cases

This visual shows how test cases were created, highlighting if AI was used:

- AI generated: Test cases created automatically using AI.

- Manually created: Test cases written manually by users.

- Automation: Test cases configured for automated execution.

- Quick imported: Imported using quick-import methods.

- CSV imported: Imported via CSV files.

Monitor this to see how your team leverages AI and automation in test creation.

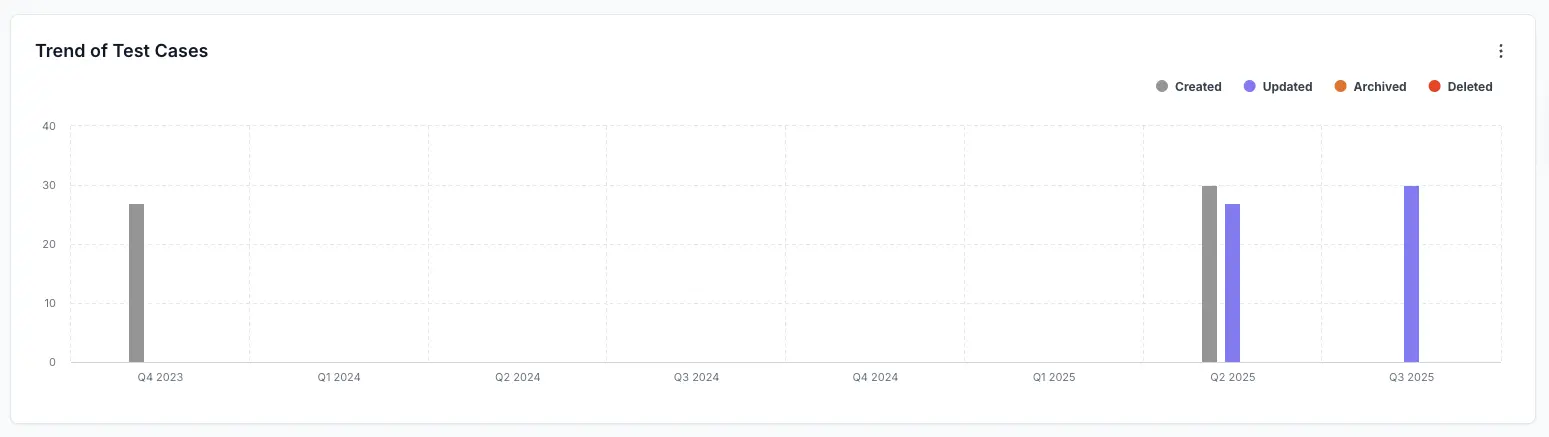

Trend of test cases

This graph shows test case activities over time:

- Created: New test cases added.

- Updated: Existing test cases modified.

- Archived: Test cases marked as archived.

- Deleted: Test cases permanently removed.

Use this widget to identify active or inactive periods in your test management process.

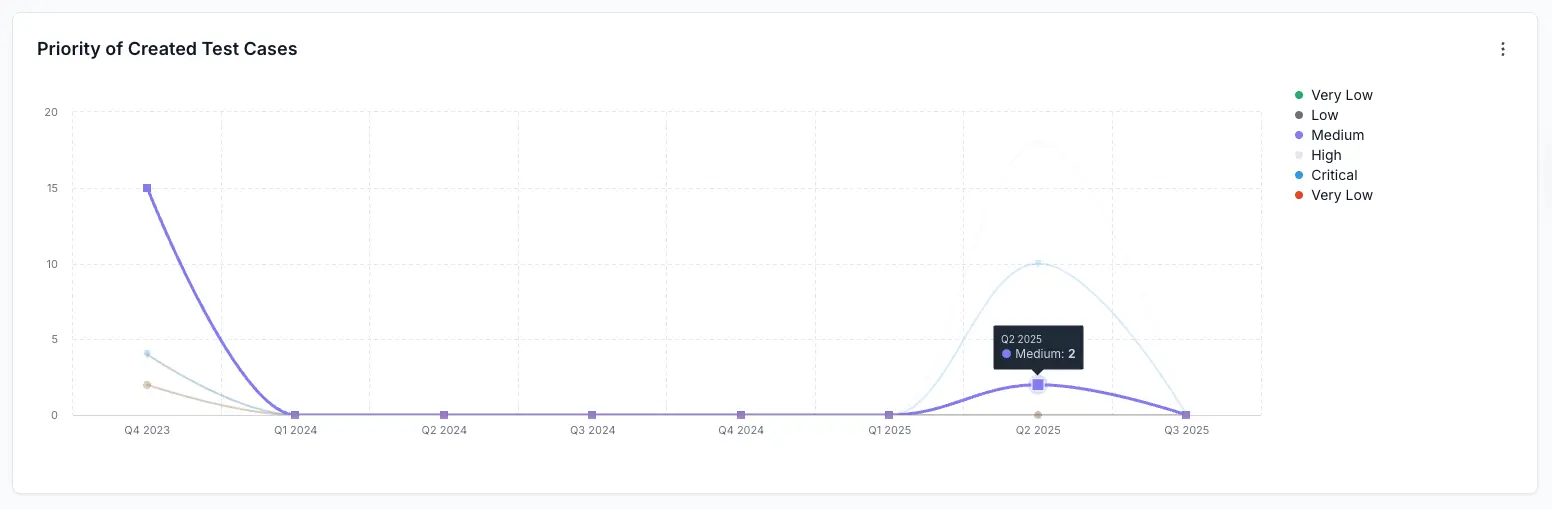

Priority of created test cases

This graph illustrates the priority level distribution of newly created test cases:

Priorities include Critical, High, Medium, and Low.

This helps you verify if your team is focusing on high-priority testing needs.

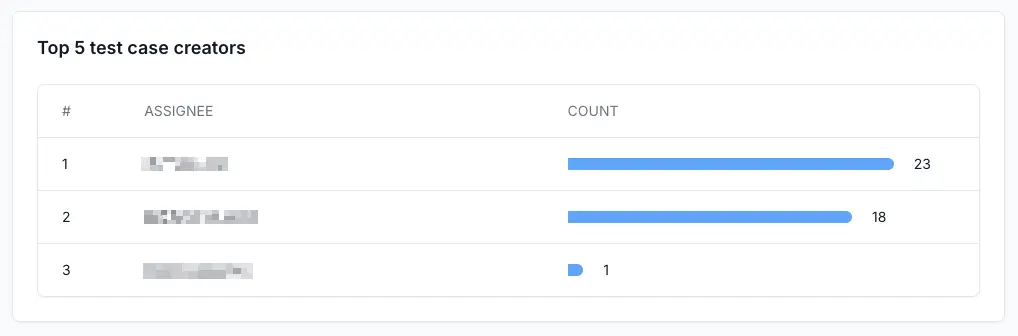

Top five test case creators

This list shows team members who created the most test cases, ranked from highest to lowest:

- Assignee: Name of the creator.

- Count: Number of test cases created by each user.

Useful for recognizing highly active team members or identifying gaps in contributions.

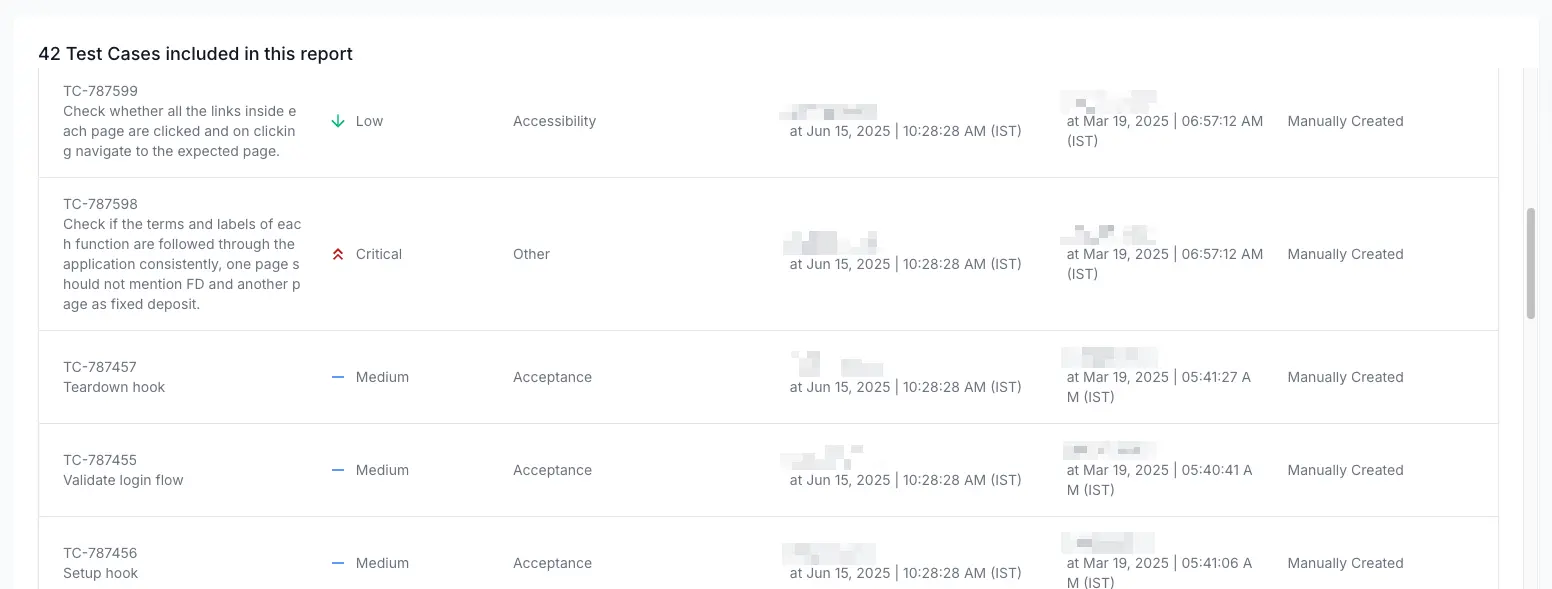

Detailed list of test cases

This table provides details of every test case included in the report:

- Test Case ID: Unique identifier for the test case.

- Priority: Importance level (Critical, High, Medium, Low).

- Test Case Type: Category or purpose (e.g., Security, Functional).

- Last Updated: Date and time when the test case was last modified.

- Created By: Name of the person who created the test case.

- Source: How the test case was created (e.g., Manually Created, Imported).

This detailed view helps you inspect specific test cases for further action or analysis.

Next steps

- Learn how to use advanced filters in your reports.

- Learn how to share and download your test case activity report.

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

Thank you for your valuable feedback!