How to perform Web Scraping using Selenium C#

By Arjun M. Shrivastva, Community Contributor - December 19, 2024

Web scraping is essential for automating the extraction of information from websites, saving time and effort compared to manual collection. It enables businesses to gather insights for market research, price monitoring, and trend analysis. It is especially helpful when dealing with dynamic or interactive websites.

By combining the performance and versatility of C# with the browser automation power of Selenium, you can efficiently scrape data and handle complex web interactions to drive informed decisions.

Disclaimer: This content is for informational purposes only and does not constitute legal advice. The legality of web scraping depends on various factors, including website terms of service, copyright laws, and regional regulations. It is your responsibility to ensure compliance with applicable laws and the website’s policies before engaging in any web scraping activities. For specific legal advice, please consult a qualified attorney.

- What is Web Scraping and its uses?

- Role of C# in Web Scraping

- How Selenium Enhances Web Scraping in C#

- How to perform Web Scraping using Selenium C#

- Prerequisites

What is Web Scraping and its uses?

The technique of automatically gathering data from websites is referred to as web scraping, also known as web harvesting or web data extraction. To extract specific information or interesting data points, it first involves extracting the HTML code of web sites. You may gather structured data from numerous websites via web scraping, which can subsequently be applied to a variety of tasks.

Web scraping is advantageous for a number of reasons:

- Data Gathering: Web scraping makes it possible to swiftly collect a lot of data from numerous websites. Market research, competitor evaluation, sentiment analysis, price comparison, and trend monitoring are just a few uses for this data.

- Automation: Web scraping automates the process, saving time and effort compared to manually copying and pasting data from websites. You can use it to automatically retrieve data whenever you need it or on a regular basis.

- Data Integration: Web scraping makes it easier to integrate data from various websites into a single database or application. You can integrate information from numerous sources and analyse it to get insights and make wise judgements.

- Real-time Data: Web scraping enables you to obtain current information from websites. This is especially helpful for keeping track of stock prices, news updates, weather predictions, social media trends, and other information that must be current.

- Research and Analysis: Web scraping is a common method used by researchers and analysts to collect data for scholarly, scientific, or market research projects. They can use it to analyse big databases, spot trends, and make judgements based on the facts gathered.

- Data Aggregation and Comparison: Web scraping gives you the ability to combine and contrast data from many websites or online platforms. To discover the best pricing, for instance, you can scrape product information and prices from various e-commerce websites.

- Monitoring and Tracking: Using web scraping, you can keep tabs on how websites change over time. To stay informed and respond appropriately, you may keep an eye on price adjustments, product availability, content updates, and other changes.

Web scraping can be a powerful tool, but it should only be used in an ethical and responsible manner. When scraping data, make sure you always abide by the terms of service of the website, respect privacy policies, and adhere to all legal and moral standards.

Role of C# in Web Scraping

C# is a powerful and versatile language that offers several advantages for web scraping. Its strong typing, high performance, and rich library support make it a great choice for building reliable and scalable scraping solutions.

One of C#’s biggest strengths is its smooth integration with Selenium WebDriver, a tool used to automate browser interactions. This makes it ideal for scraping dynamic or JavaScript-heavy websites.

Developers can take advantage of C# features like LINQ for easy data manipulation, multithreading to speed up scraping processes, and built-in tools for handling HTTP requests and responses.

C# also excels in error handling and debugging, which are critical for overcoming common scraping challenges such as dealing with CAPTCHAs, navigating AJAX-loaded content, and working with complex web page structures.

Furthermore, its compatibility with .NET libraries and frameworks simplifies data storage, processing, and integration with larger systems, making it a practical and efficient choice for web scraping projects.

How Selenium Enhances Web Scraping in C#

Selenium is a powerful browser automation tool that significantly enhances the capabilities of web scraping in C#. It allows developers to interact with web pages just like a real user, making it ideal for extracting data from websites with dynamic content, JavaScript-heavy pages, or complex user interfaces.

Here is how to use Selenium for web scraping:

- Automating Browser Actions: Using Selenium WebDriver, you can automate browser tasks including scrolling, browsing between sites, clicking buttons, filling out forms, and interacting with website objects. WebDriver methods can be used programmatically to carry out these operations.

- Data Extraction: Using Selenium, you can find and extract data from particular web page elements. To discover and interact with the desired elements, you can use one of the several finding methods Selenium provides, including XPath, CSS selectors, and element IDs. You can extract the elements’ content, attribute values, or other pertinent information once you’ve found them.

- Managing Dynamic Content: Selenium is very helpful for scraping websites that largely rely on JavaScript or have dynamic material that loads or is modified after the first page load. As a result of Selenium’s ability to interface directly with the browser, it is able to wait for AJAX requests, take actions on dynamically loaded items, and fetch the updated of the content.

- Taking Screenshots: Selenium enables you to take screenshots of websites, which is helpful for preserving or visually checking the data that has been scraped.

- Handling Authentication: Selenium can automate the login process by filling out login forms, sending credentials, and managing cookies and sessions whenever the website asks for authentication or login.

- Scraping JavaScript-rendered Pages: Selenium is capable of scraping websites that are built with JavaScript frameworks like Angular, React, or Vue.js. Since Selenium manages a real browser, it has the ability to run JavaScript and then receive the complete rendered HTML.

As opposed to other scraping techniques, Selenium web scraping necessitates the launch and control of a web browser, which could result in a slight performance and resource burden.

How to perform Web Scraping using Selenium C#

Prerequisites

Prior to web scraping being implemented in Selenium C#. The few prerequisites that we will need are as follows:

1. Visual Studio IDE: From their official website, you can download it.

2. Selenium Webdriver: An application programming interface for Selenium is called Webdriver. It provides us with the means to instruct Selenium to carry out certain tasks.

3. C# Packages: Using the Selenium WebDriver and NUnit framework, we demonstrate Selenium web scraping. The following libraries (or packages) are necessary for the NUnit project:

- Selenium WebDriver

- NUnit

- NUnit3TestAdapter

- Microsoft.NET.Test.SDK

These are the common packages used with NUnit and Selenium for automated browser testing.

Setting up the Selenium C# Project

Follow the steps given below to set up Selenium C# before you begin Web Scraping using Selenium C#.

Step 1: To create a project on Visual Studio, follow the below process:

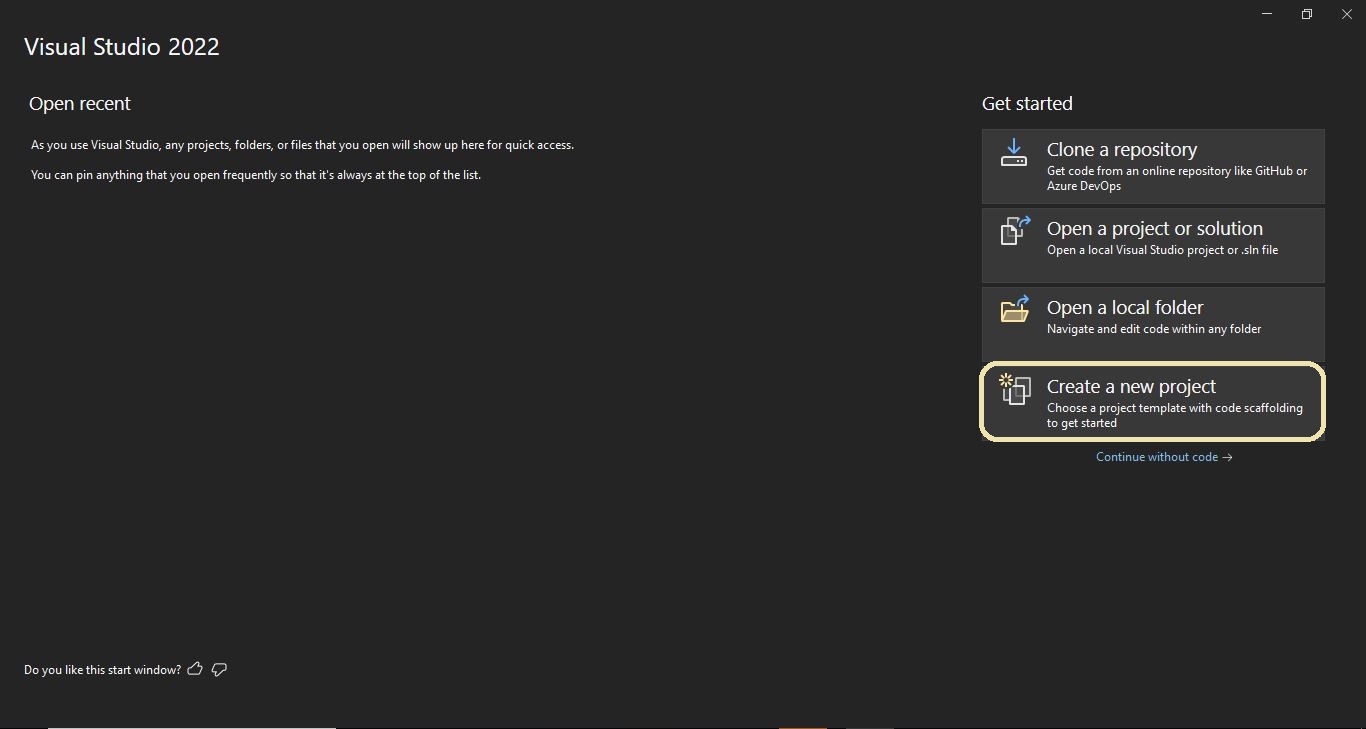

- Open Visual Studio and click on Create a new project option.

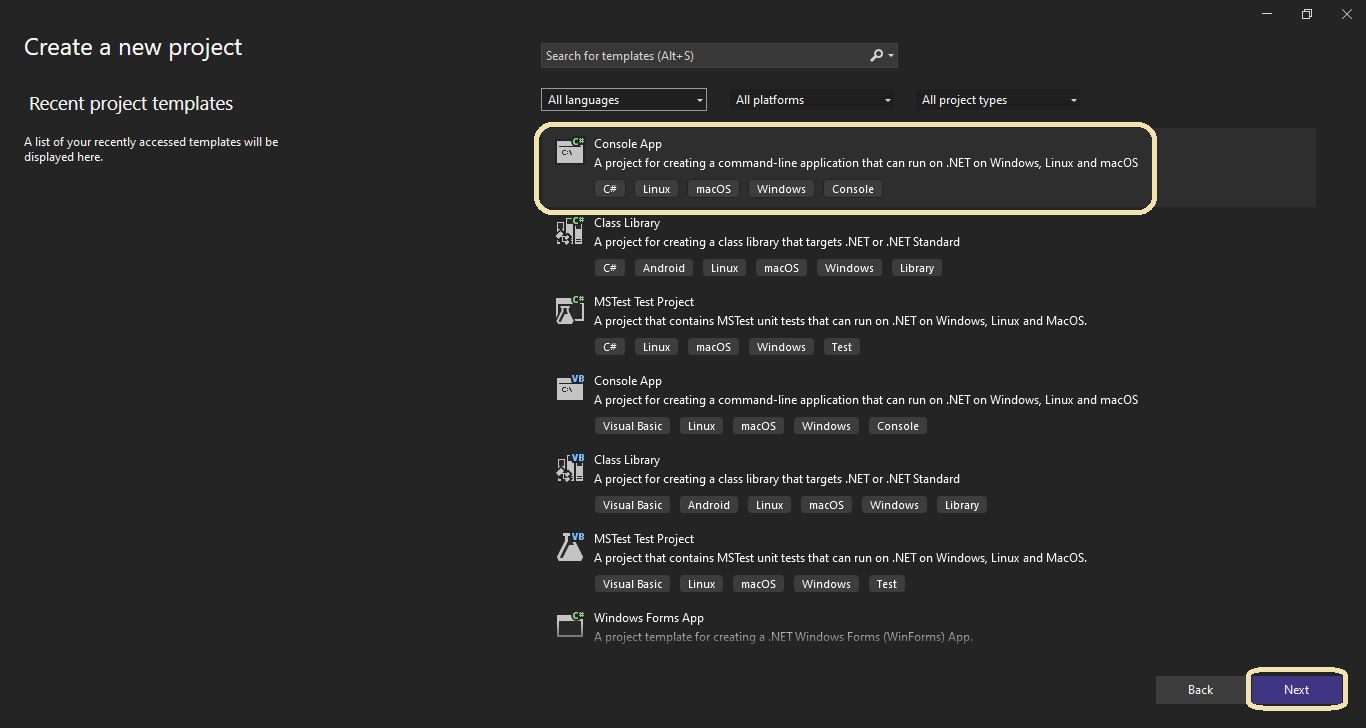

- On clicking a window will appear on the screen, where we will select Console App (.NET Framework) as a project template. After that, click on the Next button as we can see in below screenshot-

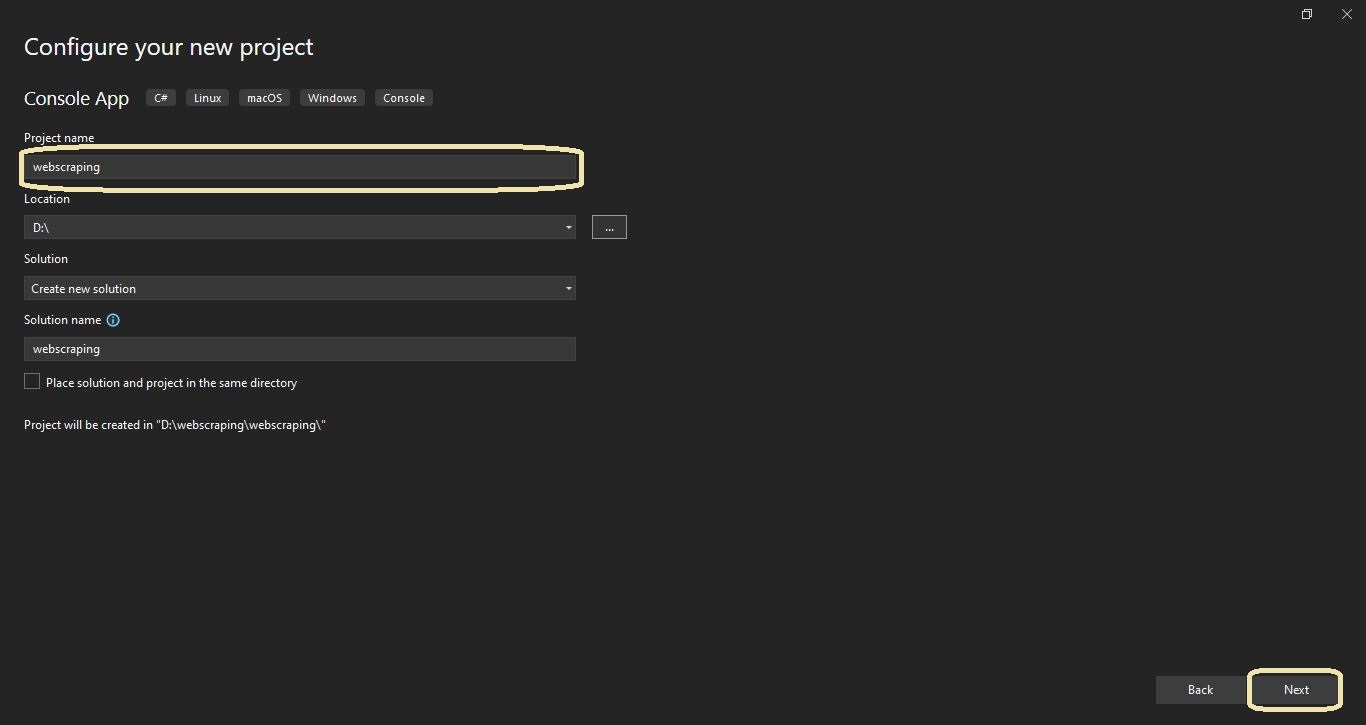

- Once we clicked on the next button, Configure your new project window will appear on the screen, where we will provide our Project name [webscraping], and click on next button.

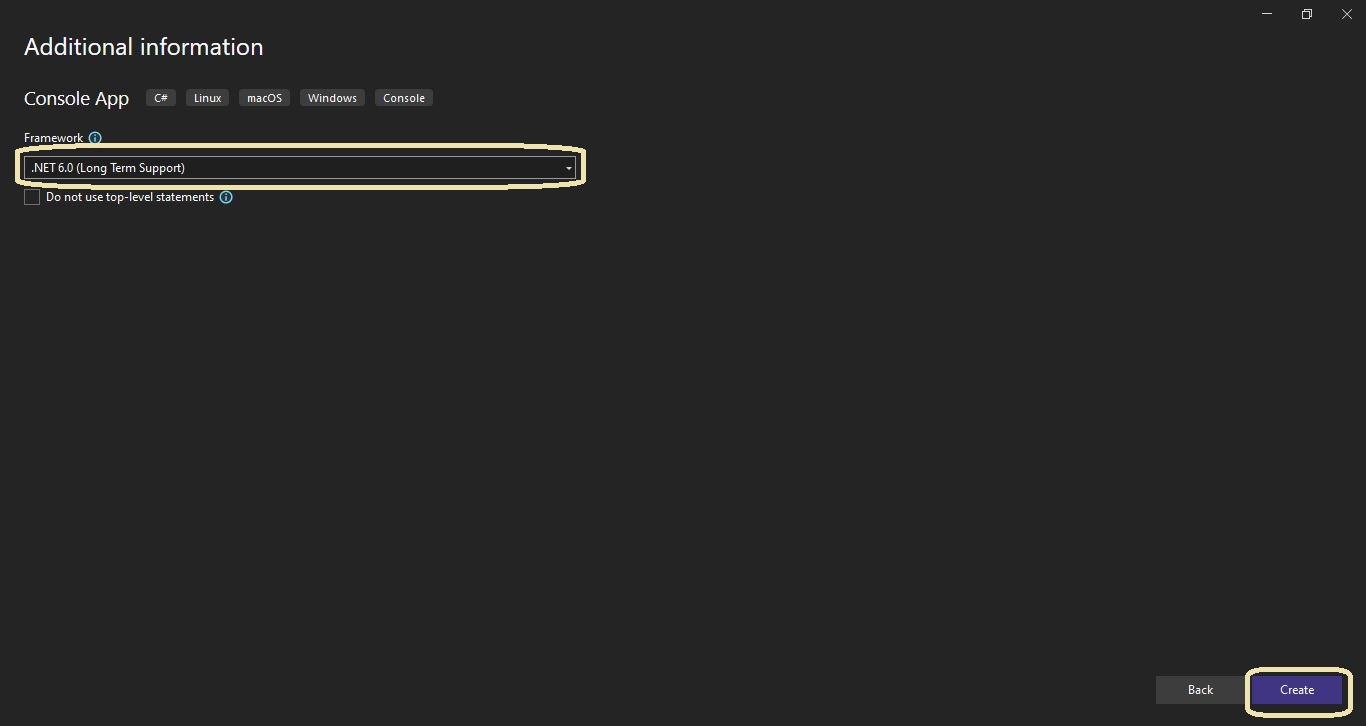

- Now we get the new window Additional Information on which we can select the target framework [.NET 6.0]. As shown in the screenshot below, clicking the Create button:

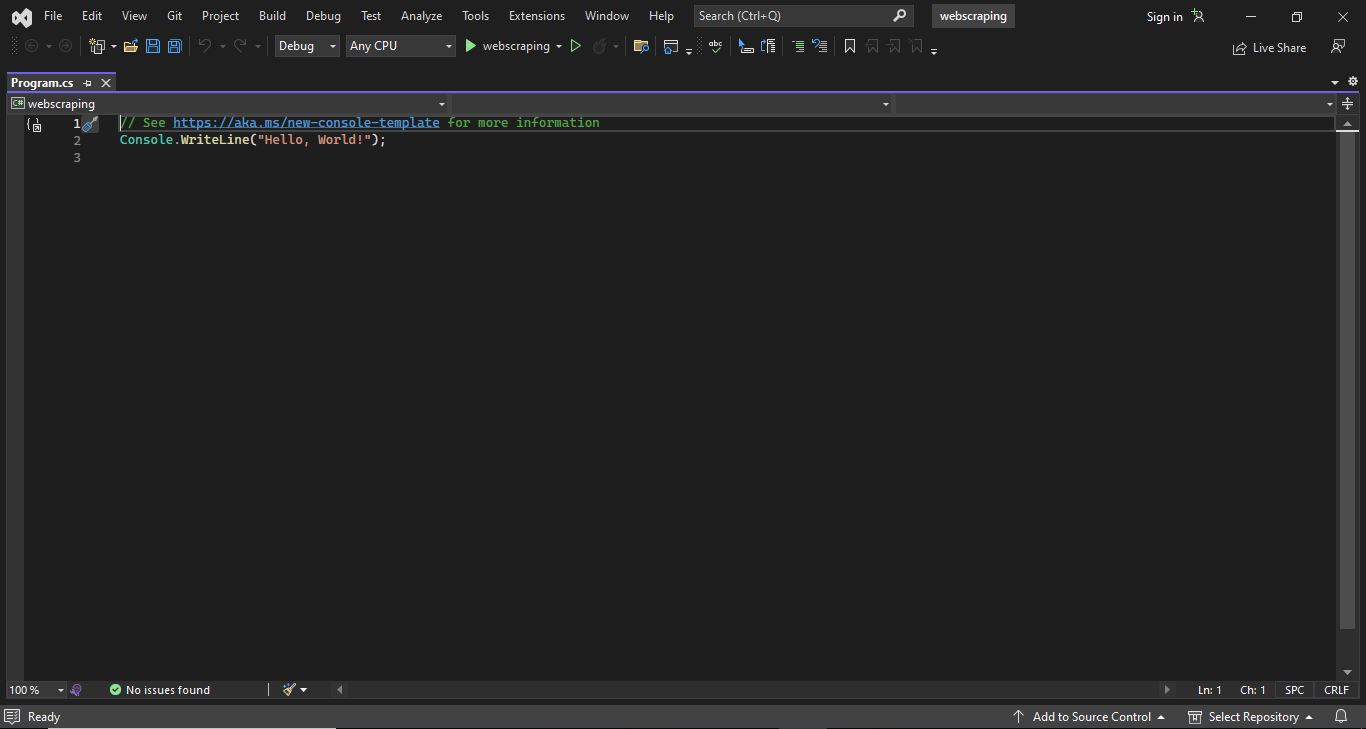

- Once the project is successfully created, and you will get a Program.cs file automatically.

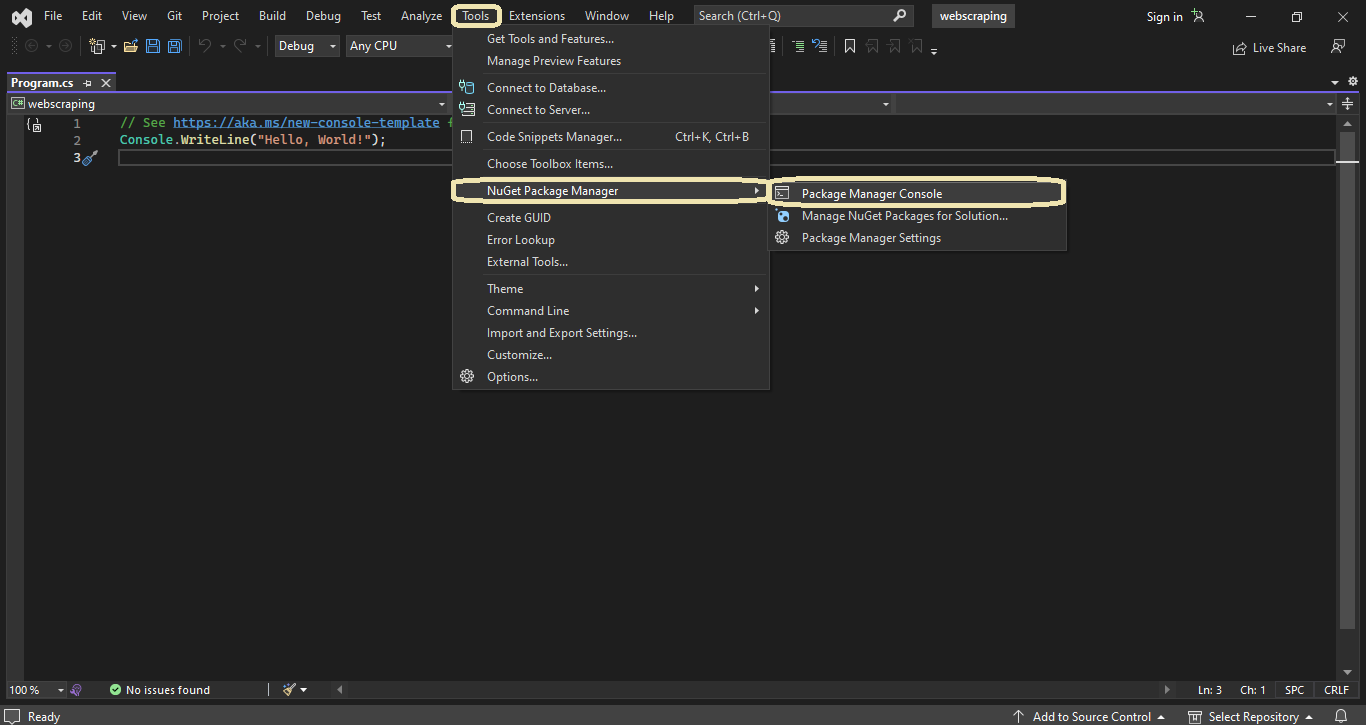

Step 2: Once you have created the project, install the packages mentioned above using the Package Manager (PM) console, which can be accessed through Tools >> NuGet Package Manager >> Package Manager Console.

Read More: How to set up Selenium on Visual Studio

Step 3: Run the following commands in the PM console, for installing the below packages

- Selenium WebDriver

Install-Package Selenium.WebDrive

- NUnit

Install-Package NUnit

- NUnit3TestAdapter

Install-Package NUnit3TestAdapter- Microsoft.NET.Test.Sdk

Install-Package Microsoft.NET.Test.Sdk- ChromeDriver to run webscraping test on Google Chrome browser

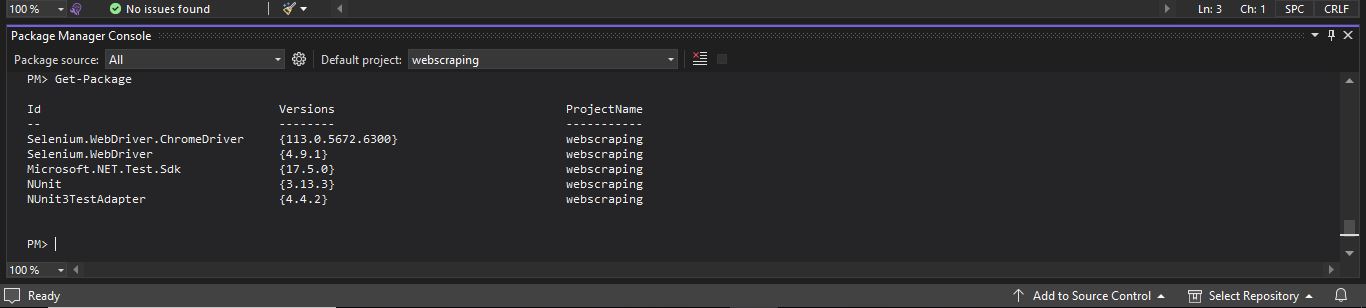

Install-Package Selenium.WebDriver.ChromeDriverStep 4: Run the Get-Package command on the PM console to confirm whether the above packages are installed successfully:

Now that the Selenium C# NUnit project’s required components have been installed, we can add a NUnit test scenario to do web scraping.

How perform Web Scraping: Example

In this demonstration, we will scrap all the items name and price from the bstackdemo.com website and will save it in a CSV file. Chrome will be used to run the web scraping test scenario.

To scrape data from an eCommerce site using Selenium in C#, you can follow these steps:

Step 1: Set up the Selenium WebDriver and navigate to the https://bstackdemo.com/ website

using OpenQA.Selenium;

using OpenQA.Selenium.Chrome;

class Program

{

static void Main()

{

// Set up ChromeDriver

IWebDriver driver = new ChromeDriver();

// Navigate to the demo website

driver.Navigate().GoToUrl("https://bstackdemo.com/");

// Create a list to store the item details

List<string[]> items = new List<string[]>();

// Add your scraping logic here

// Close the browser

driver.Quit();

}

}Step 2: Identify the elements you want to scrape using their HTML structure, attributes, or XPath. For example, if you want to scrape the name and price of products, you can use code like this. And will add these details in the above created list.

// Find elements that contain the product details

IReadOnlyCollection<IWebElement> productElements = driver.FindElements(By.CssSelector(By.ClassName("shelf-item"));

// Loop through the product elements and extract the desired information

foreach (IWebElement productElement in productElements)

{

// Extract the name and price of the product

string name = productElement.FindElement(By.Classname("shelf-item__title")).Text;

string price = productElement.FindElement(By.Classname("val")).Text;

// Add the item details to the list

items.Add(new string[] { name, price });

}Step 3: In the end, we will save all the extracted data in the csv file.

// Saving extracted data in CSV file

string csvFilePath = "\\webscraping\\items.csv";

using (StreamWriter writer = new StreamWriter(csvFilePath))

{

// Write the CSV header

writer.WriteLine("Name,Price");

// Write the item details

foreach (string[] item in items)

{

writer.WriteLine(string.Join(",", item));

}

}

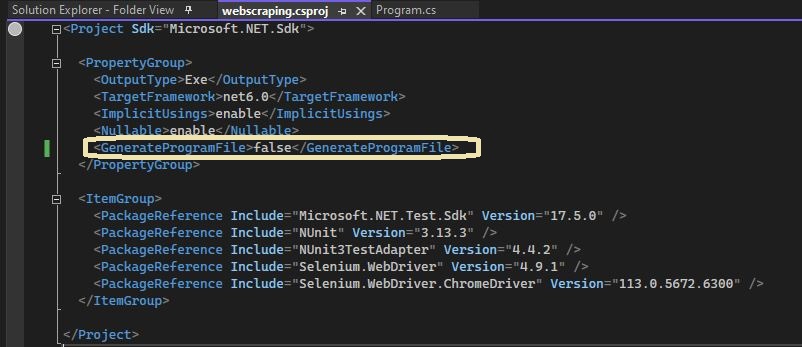

}Note: As our program includes a static void main method, so we must disable the auto-generation of the program file. Add the following element to your test project’s .csproj, inside a <PropertyGroup> element:

<GenerateProgramFile>false</GenerateProgramFile>

Adding screenshot for better understanding:

Read More: Cross Browser Testing Selenium C# NUnit

Code for Web Scraping using Selenium C#: Example

Using the below code you can implement web scraping using C#. Here the code will open bstackdemo.com, extract the name and price of the products and price, and then save it in an excel file.

using OpenQA.Selenium;

using OpenQA.Selenium.Chrome;

class Program

{

static void Main()

{

IWebDriver driver = new ChromeDriver();

driver.Navigate().GoToUrl("https://bstackdemo.com/");

List<string[]> items = new List<string[]>();

IReadOnlyCollection<IWebElement> productElements = driver.FindElements(By.ClassName("shelf-item"));

foreach (IWebElement productElement in productElements)

{

string name = productElement.FindElement(By.ClassName("shelf-item__title")).Text;

string price = productElement.FindElement(By.ClassName("val")).Text;

items.Add(new string[] { name, price });

string csvFilePath = "\\webscraping\\items.csv";

using (StreamWriter writer = new StreamWriter(csvFilePath))

{

writer.WriteLine("Name,Price");

foreach (string[] item in items)

{

writer.WriteLine(string.Join(",", item));

}

}

}

driver.Quit();

}

}To run the program, press Ctrl+F5, select green Run button from the top menu.

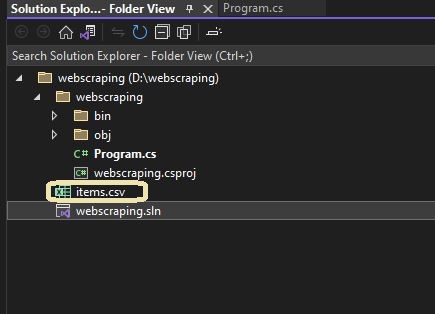

On the execution, you will get the items.csv file in project folder. (Shown as below)

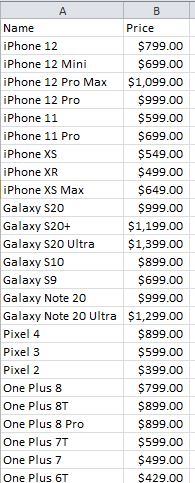

On opening that file, you will see all the item listed along with their price.

Common Challenges in Web Scraping

Here are some of the most common challenges faced during web scraping:

- Dynamic Content Loading: Many websites load content dynamically using JavaScript, making it difficult to access data directly from the initial HTML source.

- CAPTCHAs and Anti-Bot Measures: Websites use CAPTCHAs, honeypots, and behavior analysis to block bots, creating hurdles for automated scraping tools.

- AJAX and Asynchronous Updates: AJAX-powered pages update content asynchronously, making it challenging to determine when the data is ready for extraction.

- Rate Limiting and IP Blocking: Websites often monitor and limit requests from the same IP address or block suspicious activity to prevent overloading their servers.

- Complex Web Structures: Websites with deeply nested, inconsistent, or dynamically changing HTML structures can make it difficult to locate and extract desired elements.

- Legal and Ethical Concerns: Some websites restrict scraping through their robots.txt file or terms of service, and scraping them could lead to legal consequences.

- Data Volume and Scalability: Scraping large amounts of data can strain resources and require optimized solutions for efficient handling, storage, and processing.

- Error Handling: Issues like missing data, unexpected site changes, or server errors can disrupt scraping scripts and require robust error-handling mechanisms.

- Performance Constraints: Web scraping, especially at scale, can be slow and resource-intensive, particularly when interacting with JavaScript-heavy or media-rich websites.

- Maintaining Scripts: Frequent changes to website layouts or structures can render scraping scripts outdated, requiring continuous updates to keep them functional.

Tips for Optimizing Web Scraping with Selenium and C#

By applying the following tips, you can optimize the performance, reliability, and maintainability of your web scraping projects using Selenium and C#:

- Use Headless Browsers: Run Selenium in headless mode to improve performance by skipping the graphical user interface, reducing resource consumption, and speeding up scraping tasks.

- Implement Explicit Waits: Use explicit waits to ensure elements are fully loaded before interacting with them, reducing errors caused by dynamic content.

- Leverage Multithreading: Utilize C#’s multithreading capabilities to run multiple scraping tasks simultaneously, improving efficiency and reducing overall execution time.

- Minimize Browser Interactions: Limit the number of interactions with the browser by batching operations, such as extracting multiple data points at once instead of one by one.

- Use Efficient Locators: Opt for efficient and robust element locators like CSS Selectors or XPath, tailored to the page structure, to avoid brittle scripts.

- Optimize Data Extraction Logic: Avoid unnecessary operations and loops when extracting data. Filter and target specific data points to streamline the process.

- Handle Errors Gracefully: Implement robust error-handling mechanisms to manage common issues like stale elements, timeouts, and unexpected site changes.

- Rotate Proxies and User Agents: Use rotating proxies and random user-agent strings to avoid IP blocking and reduce the risk of detection by anti-scraping measures.

- Incorporate Logging and Monitoring: Add logging to track the scraping process and quickly identify issues when errors occur, improving maintainability.

- Respect Website Policies: Follow ethical scraping practices by checking robots.txt files, setting appropriate delays between requests, and not overloading servers with excessive traffic.

- Use BrowserStack for Testing: Test and debug your Selenium scripts across multiple browsers and environments using tools like BrowserStack to ensure compatibility and reliability.

- Utilize Parallel Testing Frameworks: Integrate parallel test execution frameworks with Selenium and C# to distribute tasks across multiple instances, enhancing performance.

Why choose BrowserStack to execute Selenium C# Tests?

BrowserStack is an industry-leading platform that enhances the testing and debugging process for Selenium C# scripts, offering a range of features that make it an ideal choice for executing web scraping and automation tasks:

- Cross-Browser Testing: BrowserStack provides access to a wide range of real browsers and operating systems, ensuring your Selenium C# tests run seamlessly across different environments.

- Real Device Testing: It allows you to test scripts on real devices, making it easier to handle quirks or inconsistencies that might arise on specific platforms or browser versions.

- Cloud-Based Infrastructure: With BrowserStack, there’s no need to set up or maintain complex local environments. The cloud infrastructure ensures quick and hassle-free test execution.

- Debugging Tools: BrowserStack offers detailed logs, screenshots, and video recordings of test runs, helping you identify and resolve issues faster.

- Scalability and Parallel Execution: Run multiple Selenium C# tests in parallel on different browsers and devices, significantly speeding up execution time and improving efficiency.

- Support for Headless Browsers: Use headless browser testing to execute web scraping tasks more efficiently, without the overhead of rendering the user interface.

- Advanced Security: BrowserStack ensures your data remains secure with enterprise-grade compliance, making it suitable for sensitive and large-scale projects.

- Simplified Collaboration: Share test results and logs with team members easily, streamlining workflows and improving collaboration.

Conclusion

In this article, You have learned the fundamentals of web scraping using Selenium C#. It also explored Web Scraping specific elements using locators in C# with Selenium. As you can see, this requires only a few lines of code. Just remember to comply with the website’s terms of service, be mindful of any rate limits or scraping restrictions, and follow ethical scraping practices.

It is recommended to use BrowserStack, it can be beneficial for ensuring that your scraping code works correctly across different real browsers and devices. It allows you to test your scraping scripts on various browser configurations without the need for setting up multiple local environments. This can be helpful in ensuring the compatibility and reliability of your scraping code across different browser platforms.