What is Test Data: Techniques, Challenges & Solutions

By Sandra Felice, Community Contributor - December 3, 2024

Test data is essential for validating software by simulating real-world data, ensuring accurate test execution and comprehensive coverage to improve software quality.

Well-prepared test data ensures comprehensive test coverage, as it simulates different scenarios, including edge cases, and general usage patterns, and helps uncover potential bugs.

As applications grow in complexity, testers need efficient strategies for gathering, generating, and managing test data in real user conditions effectively.

This article explores the concept of test data, important techniques, and some best practices.

- What is Test Data?

- Importance of Test Data in Software Testing

- What is Test Data Generation?

- What is Corrupted Test Data?

What is Test Data?

Test data refers to various input values and conditions given to the software program during tests to check how well the software works and how it behaves under different conditions.

It validates and verifies the functionality, performance, and behavior of the software with different input conditions. It is the data provided to a software program during test execution, representing inputs that affect or are affected by the software during testing.

Test data is a crucial aspect of the testing process and can include both positive and negative data. Positive test data is used to verify that the software produces the expected results, while negative test data is used to validate exceptions and error-handling cases.

Importance of Test Data in Software Testing

Test data is essential because it evaluates the software’s performance under diverse conditions, ensuring that the product meets specified requirements and functions correctly. It enables testers to determine whether the software is ready for release.

Following are a few reasons why test data is important in software testing:

- Detecting & Addressing Bugs Early: Better test data coverage helps you identify bugs and errors early in the software testing life cycle (STLC). Catching these issues early saves time and effort.

- Improved Test Data Coverage: Proper test data provide clear traceability and a comprehensive overview of test cases and defect patterns.

- Efficient Testing Processes: Maintaining and managing test data allows you to prioritize test cases, optimize your test suites, and streamline testing cycles, leading to more efficient testing.

- Increased Return on Investment (ROI): Efficient reuse and maintenance of test data, through test data management, lead to fewer defects in production and allow the same data set to be reused for regression testing in future projects.

What is Test Data Generation?

Test data generation is a process that involves creating and managing values specifically for testing purposes. It aims to generate synthetic or representative data that validates the software’s functionality, performance, security, and other aspects.

Bonus: Streamline your testing with BrowserStack’s free tools! Instantly generate test data like credit card numbers and random addresses for your critical use cases.

Test data generation typically occurs through the following methods:

- Manual creation

- Utilizing test data creation automation tools

- Transferring existing data from production to the testing environment

What is Corrupted Test Data?

Corrupted test data occurs when test data is altered unintentionally, leading to unreliable test results and potential false failures. This often happens when multiple testers work in a shared environment, modifying data to fit individual test needs without resetting it, causing issues for others relying on the original data state.

How to Avoid Corrupted Test Data:

- Maintain data backups for recovery if needed.

- Reset data to its original state after each test.

- Assign unique data sets to each tester to prevent overlap.

- Notify data administrators of any data changes made during testing.

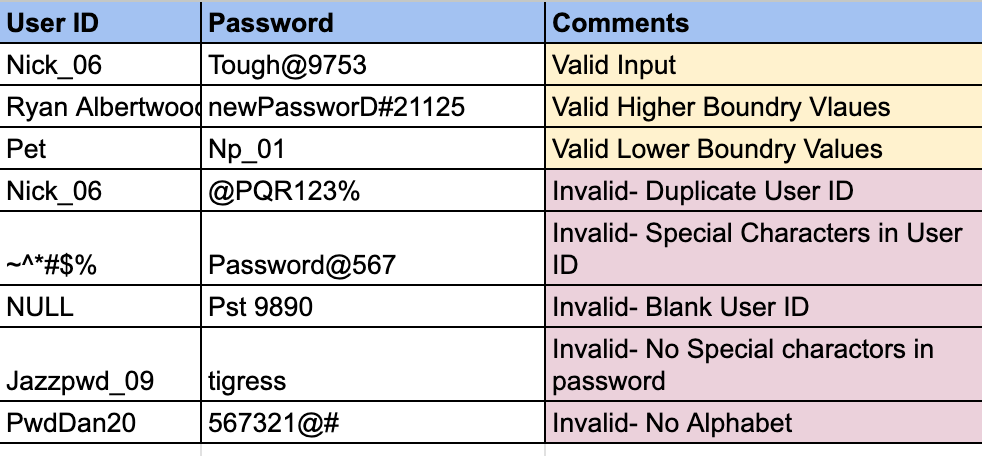

What are the different Types of Test Data: (With Examples)

Effective software testing relies on a diverse array of test data to thoroughly evaluate an application’s functionality. This includes different scenarios to identify issues and ensure reliability.

Different types of test data serve distinct purposes in the testing process:

Valid Test Data

This type of test data consists of correct and acceptable inputs, ensuring that the software behaves as expected under normal conditions.

Example: Entering a valid email address in a registration form to confirm it accepts proper formats.

Invalid Test Data

Invalid test data includes incorrect or unexpected values, used to uncover weaknesses in data validation and error-handling, often revealing security vulnerabilities.

Example: Typing letters into a numeric-only field to check if the system blocks invalid entries.

Boundary Test Data

Boundary data involves testing values at the edges of acceptable ranges, which helps identify issues with handling extreme cases.

Example: Testing a form field with inputs at the maximum and minimum allowed lengths to confirm boundary handling.

Blank Test Data

Blank test data checks how the system responds to missing inputs, ensuring that it manages empty fields appropriately and provides user-friendly messages.

Example: Leaving a required form field empty to verify that the system prompts the user to fill in the field.

Properties of Test Data

Here are the key properties of test data:

- Relevance: Directly supports the test scenario by simulating actual usage patterns, enhancing the detection of critical issues.

- Diversity: Encompasses a wide range of inputs, covering typical and edge cases to capture potential defects.

- Manageability: Easy to organize and maintain, ensuring efficient access and updates throughout the testing process.

- Consistency: Maintains uniformity across test runs to ensure stable and repeatable results, minimizing unexpected test outcomes.

How to Create & Manage Test Data

Creating and managing test data is vital for effective software testing. Proper test data management ensures tests are reliable, repeatable, and comprehensive.

Here are strategies and best practices for creating and managing test data:

1. Identify Test Data Requirements

- Understand the Application: Gain a thorough understanding of the application’s data requirements, data flow, and dependencies.

- Define Test Scenarios: Identify all test scenarios, including edge cases, boundary conditions, and negative scenarios.

2. Select Test Data Sources

Choose test data based on the following test data types:

- Static Data: Predefined data that rarely changes.

- Dynamic Data: Data that varies with each test execution, such as user inputs or transaction data.

- Synthetic Data: Artificially created data that mimics real data while ensuring privacy and security.

- Production Data: Anonymized or masked data sourced from production systems.

3. Data Generation Techniques

Create test data based on the following techniques:

- Manual Data Creation: Manually create small sets of test data for straightforward test cases.

- Automated Data Generation: Use tools and scripts to generate large volumes of test data.

- Data Cloning: Copy subsets of production data while ensuring sensitive information is anonymized.

4. Data Management Tools

Manage test data using the following:

- Test Data Management Tools: Utilize tools like Informatica, or CA Test Data Manager for creating, managing, and masking test data.

- Database Management Systems (DBMS): Use DBMS features to export, import, and manage data sets.

- Scripting Languages: Leverage languages like Python, SQL, or shell scripts to automate data creation and management tasks.

5. Data Security

- Protect Sensitive Data: Ensure any data derived from production systems is anonymized and masked to safeguard sensitive information using Data Masking Tools.

6. Data Versioning and Backup

- Version Control: Use version control systems to track different versions of test data sets.

- Backup and Restore: Regularly backup test data sets to prevent loss and facilitate easy restoration when needed.

7. Data Maintenance

- Regular Updates: Keep test data updated to reflect the current production environment.

- Data Cleanup: Periodically remove outdated or irrelevant test data to maintain integrity and performance.

8. Collaboration and Documentation

- Collaborate with Stakeholders: Work closely with developers, DBAs, and business analysts to meet test data requirements.

Different Test Data Preparation Techniques

Test data preparation is a crucial element of software testing, and several techniques can be employed to prepare test data effectively.

Here are some of the different test data preparation techniques:

- Manual Data Entry: Testers manually input data into the system under test to ensure data accuracy for specific test scenarios.

- New Data Insertion: Fresh test data is fed into a newly built database according to testing requirements, and it is used to execute test cases by comparing actual results with expected results.

- Synthetic Data Generation: Synthetic data is created using data generation tools, scripts, or programs. This technique is particularly useful for generating large datasets with diverse values.

- Data Conversion: Existing data is transformed into different formats or structures to assess the application’s ability to handle diverse data inputs.

- Production Data Subsetting: A subset of production data is selected and used for testing, focusing on specific test cases and scenarios to save resources and maintain data relevance.

Testing Levels that require Test Data

Effective software validation relies on test data across different testing levels to thoroughly assess software functionalities. Each testing level has unique test data requirements tailored to evaluate specific aspects of the system under test.

Here are the testing levels along with the corresponding test data requirements:

Test Data for White Box Testing

White box testing focuses on testing an application’s internal structures, algorithms, and code logic. Test data for white box testing is designed to evaluate specific code paths, logic branches, and conditions.

Types of Testing Under White Box Testing:

- Unit Testing: Testing individual functions or methods.

- Integration Testing: Testing interactions between modules.

- Regression Testing: Ensuring new changes don’t break existing functionality.

- Code Coverage Testing: Ensuring all code paths are tested.

Test Data Requirements:

- Data that exercises all code paths, including loops and condition branches.

- Boundary values for input fields to verify the handling of edge cases.

- Special cases that trigger errors or exceptions in the logic.

Test Data for Performance Testing

Performance testing assesses how well a system performs under stress, load, or varying levels of usage. Test data for performance testing is designed to simulate realistic load scenarios and ensure the system meets performance criteria.

Types of Testing Under Performance Testing:

- Load Testing: Testing under normal expected load.

- Stress Testing: Testing under extreme load.

- Spike Testing: Testing with sudden large increases in load.

- Endurance Testing: Testing stability over extended periods.

Test Data Requirements:

- Large volumes of data to simulate user load or transactions.

- Varying data inputs to simulate different user interactions with the system.

- Data that mimics real-world usage patterns and peak load scenarios.

Test Data for Security Testing

Security testing focuses on identifying software vulnerabilities, including threats from malicious attacks. Test data helps verify the software’s resilience against various security risks and threats.

Types of Testing Under Security Testing:

- Penetration Testing: Simulating attacks to identify weaknesses.

- Authentication Testing: Verifying user access controls.

- Access Control Testing: Ensuring proper data protection.

- Vulnerability Scanning: Identifying potential security flaws.

Test Data Requirements:

- Invalid or malicious inputs such as SQL injection, cross-site scripting (XSS), or buffer overflow data.

- Data that attempts to bypass authentication or access controls.

- Simulated attack data to assess the system’s response to breaches.

Test Data for Black Box Testing

Black box testing focuses on validating an application’s functionality without knowledge of its internal workings.

Types of Testing Under Black Box Testing:

- Functional Testing: Verifying expected feature behavior.

- System Testing: Validating the entire system.

- Acceptance Testing: Ensuring business requirements are met.

- Regression Testing: Checking for unintended changes in behavior.

Test Data Requirements:

- Data representing typical user inputs to verify expected behavior.

- Boundary and edge case data are used to check for the handling of limits.

- Invalid or erroneous data to check error handling and validation mechanisms.

Automated Test Data Generation Tools

Below are some popular automated test data generation tools:

- BrowserStack Low Code Automation: Use low-code automation to build, execute, and maintain tests easily with minimal coding, while leveraging AI-powered maintenance for efficiency and seamless cross-browser execution on BrowserStack’s real device cloud.

- TDM Data Generator: Good for generating large datasets for functional and performance testing but can be complex to configure for advanced scenarios.

- Mockaroo: Excellent for creating realistic and structured data but may lack advanced customization for very specific testing needs.

- GenRocket: Great for on-demand test data generation with complex relationships but requires significant setup time for intricate data models.

Once your test data is ready, start testing immediately with BrowserStack Automate. For a no-code approach, leverage BrowserStack’s Low Code Automation to streamline and speed up your testing.

Common Challenges in Creating Test Data & its Solutions

Creating test data can be a complex and challenging process, with several common challenges that can impact the effectiveness and efficiency of software testing.

Here are some of the most common challenges in creating test data and its solutions:

1. Data Volume and Variety

- Challenge: Ensuring comprehensive coverage of all scenarios without overwhelming the system.

- Solution: Utilize data generation tools to create manageable yet thorough data sets, focusing on critical paths and edge cases.

2. Data Privacy and Security

- Challenge: Safeguarding sensitive information during testing.

- Solution: Implement data masking and anonymization techniques to protect personal and sensitive data.

3. Maintaining Data Quality

- Challenge: Ensuring accuracy and relevance of test data.

- Solution: Regularly review and update test data, validate data integrity, and use realistic data generation methods.

4. Data Consistency Across Environments

- Challenge: Ensuring consistency across different testing environments (development, QA, staging, etc.).

- Solution: Use version control systems and data synchronization tools to manage data changes and maintain consistency.

5. Data Dependency Management

- Challenge: Managing dependencies between different data sets.

- Solution: Define clear data dependencies, automate data setup processes, and use relational databases to manage relationships.

6. Scalability and Performance

- Challenge: Generating test data for large-scale usage without compromising performance.

- Solution: Utilize load testing tools to generate large volumes of test data and monitor system performance.

7. Test Data Refresh

- Challenge: Keeping test data aligned with application changes.

- Solution: Implement automated data refresh processes and regularly update test data.

8. Managing Complex Data Structures

- Challenge: Handling complex data structures and relationships.

- Solution: Use advanced data generation tools and relational databases to maintain data integrity.

9. Test Data Maintenance

- Challenge: Keeping test data relevant and preventing data corruption.

- Solution: Implement regular maintenance routines and use tools that support data integrity checks.

10. Creating Realistic Data

- Challenge: Generating data that mirrors real-world scenarios.

- Solution: Analyze production data and use data generation tools to create diverse and realistic test data sets.

Best Practices for Creating Effective Test Data

Here are some best practices that will help you create effective test data:

- Automate test data generation to speed up the process and ensure quick access to data sources.

- Identify key test scenarios and conditions to generate relevant and diverse data.

- Involve appropriate teams to handle complex and large data volumes efficiently across systems.

- Use fresh, up-to-date data for testing to ensure accuracy and relevance.

- Regularly clean test data to remove duplicates and missing values for consistent results.

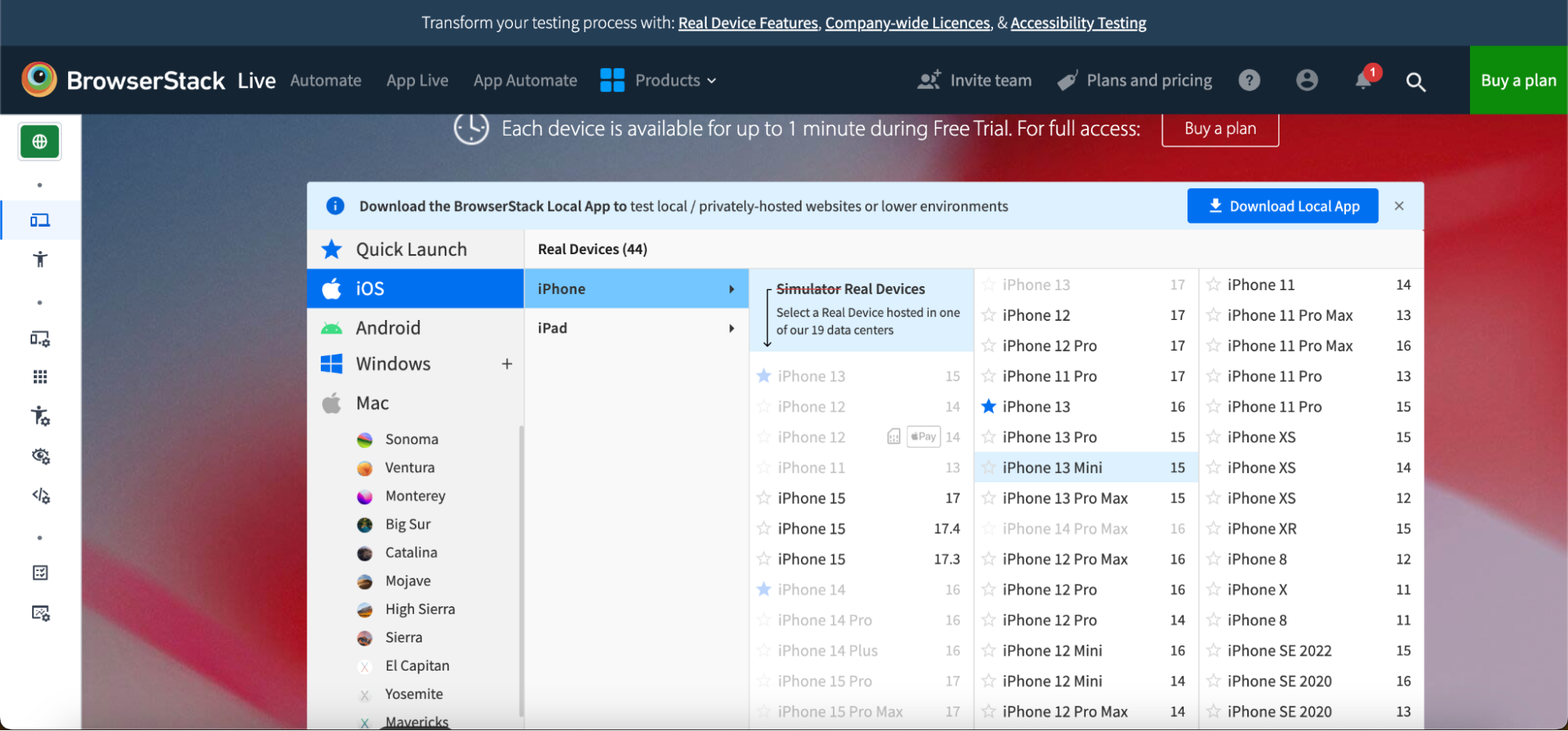

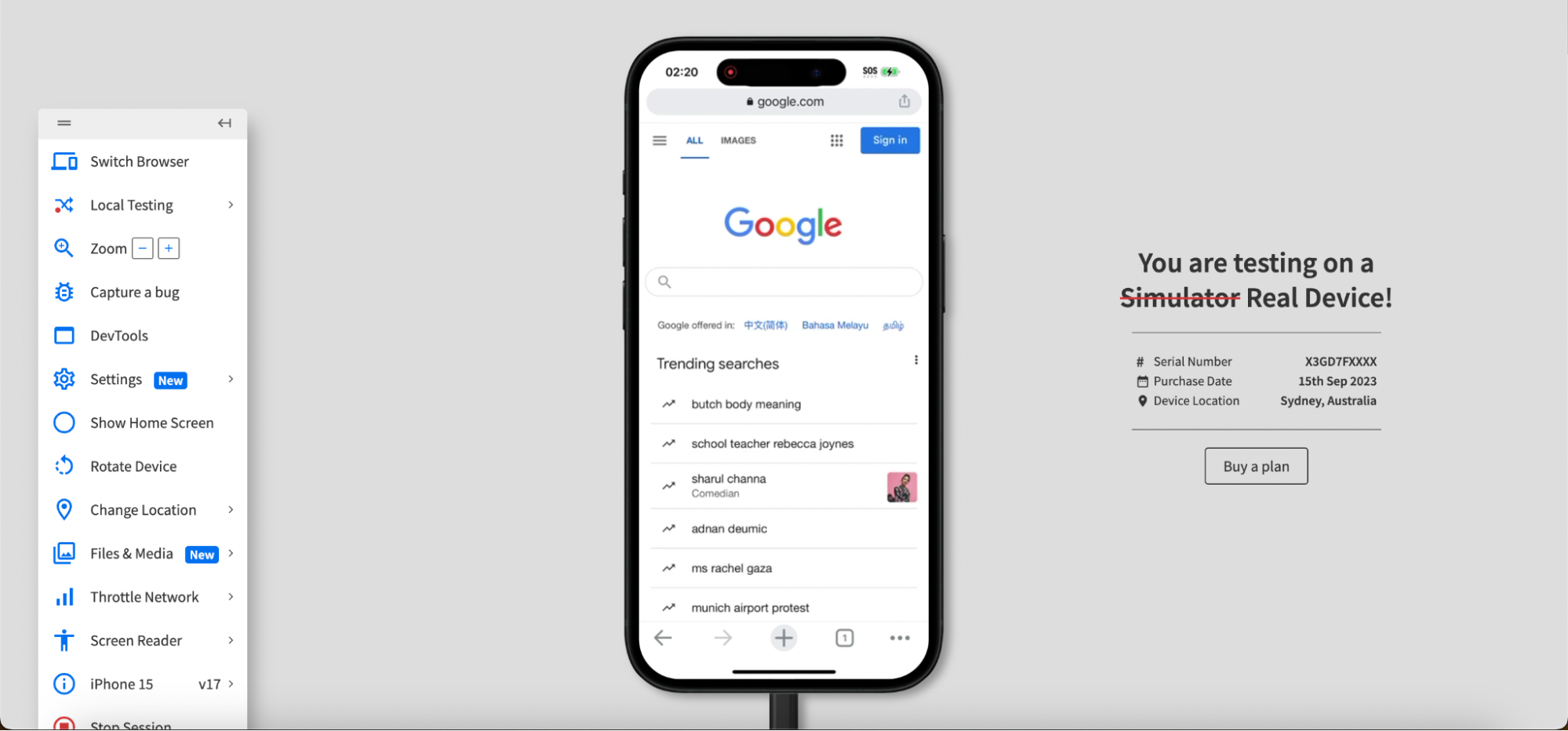

Why is Testing on Real Devices and Browsers important?

Real-device and real-browser testing play a vital role in ensuring the dependability, efficiency, and user experience of web and mobile applications.

Below are several reasons highlighting the significance of testing on actual devices and browsers:

1. Real User Experience

- Real devices and browsers accurately replicate user interactions, including touch gestures and native behaviors.

- Testing on real devices ensures compatibility with various screen sizes and resolutions for proper layout and usability.

2. Hardware and Software Variability

- Real devices have unique hardware features like cameras, GPS, and sensors, ensuring accurate testing of device-specific functionalities.

- Testing on real devices accounts for diverse operating system versions, ensuring compatibility and functionality across different OS environments.

3. Performance and Load Testing

- Real-device testing assesses application performance under different network conditions (3G, 4G, Wi-Fi), aiding in identifying performance issues and optimizing load handling.

- Monitoring battery, CPU, and memory usage during real-device testing ensures application efficiency and prevents excessive drain on device resources.

4. Browser-Specific Issues

- Real-browser testing detects and resolves rendering discrepancies across browsers like Chrome, Safari, Firefox, and Edge for a consistent user experience.

- Testing on real browsers ensures proper functionality across different browser environments, accounting for unique features and plugins that may impact application behavior.

5. Security and Compliance

- Real-device and real-browser testing uncovers security vulnerabilities like data encryption issues and secure storage concerns that may go unnoticed in emulated environments.

- Testing on real devices ensures compliance with regulatory standards such as GDPR and HIPAA, validating adherence to these guidelines in real-world scenarios.

6. User Environment Testing

- Real-device testing considers environmental factors like lighting, screen glare, and user handling that impact application usability.

- Testing on real devices and browsers validates smooth interactions with third-party services and APIs, ensuring seamless integrations.

7. Bug Detection and Fixes

- Real-device and browser testing aids in diagnosing and fixing bugs more accurately due to the realistic testing environment mirroring production conditions.

- End-to-end testing on real devices ensures a seamless user experience by covering the complete user journey comprehensively.

Conclusion

Test data is a critical component of software testing, enabling comprehensive validation and verification of applications. Effective test data management encompasses strategic planning, diverse data creation, data security measures, and seamless integration into testing processes. Prioritizing test data management enhances testing accuracy, efficiency, and facilitates the delivery of high-quality software solutions that align with user expectations and market requirements.

BrowserStack’s Test Management Tool helps in the planning, execution, and assessment of tests, as well as the management of resources and data associated with testing. It provides teams with a centralized platform to manage test cases and test data, coordinate testing activities, and track progress in real-time.