Load testing with Selenium

Run a load test using Selenium scripts on BrowserStack Load Testing

Prerequisites

- BrowserStack Username and Access key. You can find this under your account profile.

- An existing automated test suite written in Selenium with Java, JUnit, TestNG, or TestNG Cucumber.

Use our load testing sample project to quickly get started.

Run a test

Based on your preferred method of performing load tests, use one of the following methods:

You can start a new test either from the Quick Start page, or the Load Tests page on the Load testing dashboard.

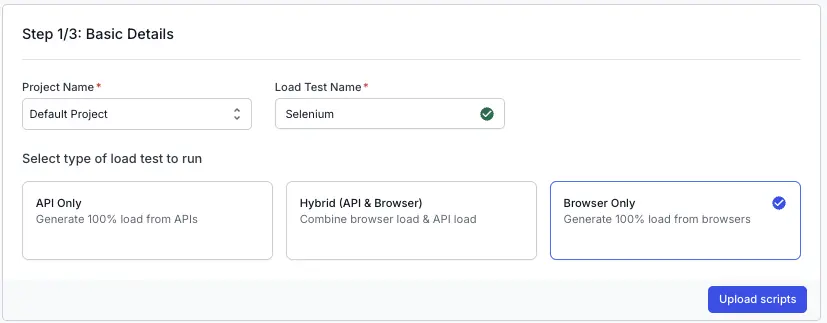

On the dashboard, click Create Load Test.

Enter a Test Name for your load test, select Browser Only and click Upload scripts

Upload scripts

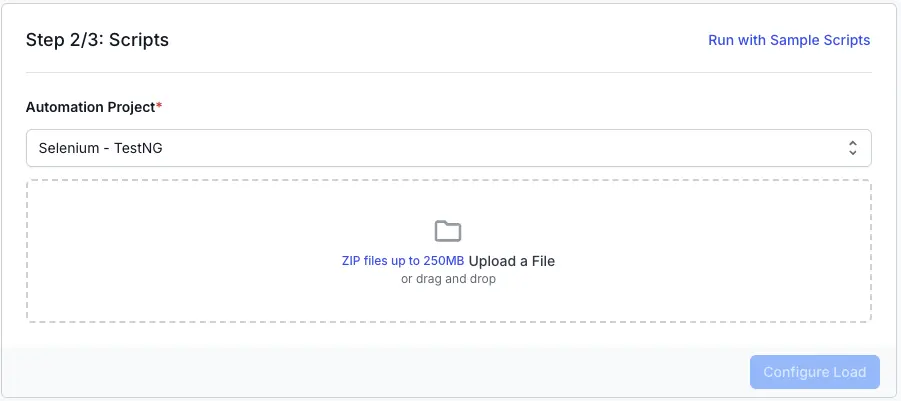

In this step, upload your Selenium automation project as a ZIP file. Select your framework (for example, Selenium - TestNG) from the dropdown, then drag and drop your ZIP file (up to 250MB) or click to select it.

Before you zip and upload your Selenium automation projects, replace local WebDriver instances (such as new ChromeDriver()) with RemoteWebDriver to run tests on the remote setup. Set the remote URL to http://localhost:4444/wd/hub.

Example

RemoteWebDriver driver = new RemoteWebDriver(new URL("http://localhost:4444/wd/hub"), caps);

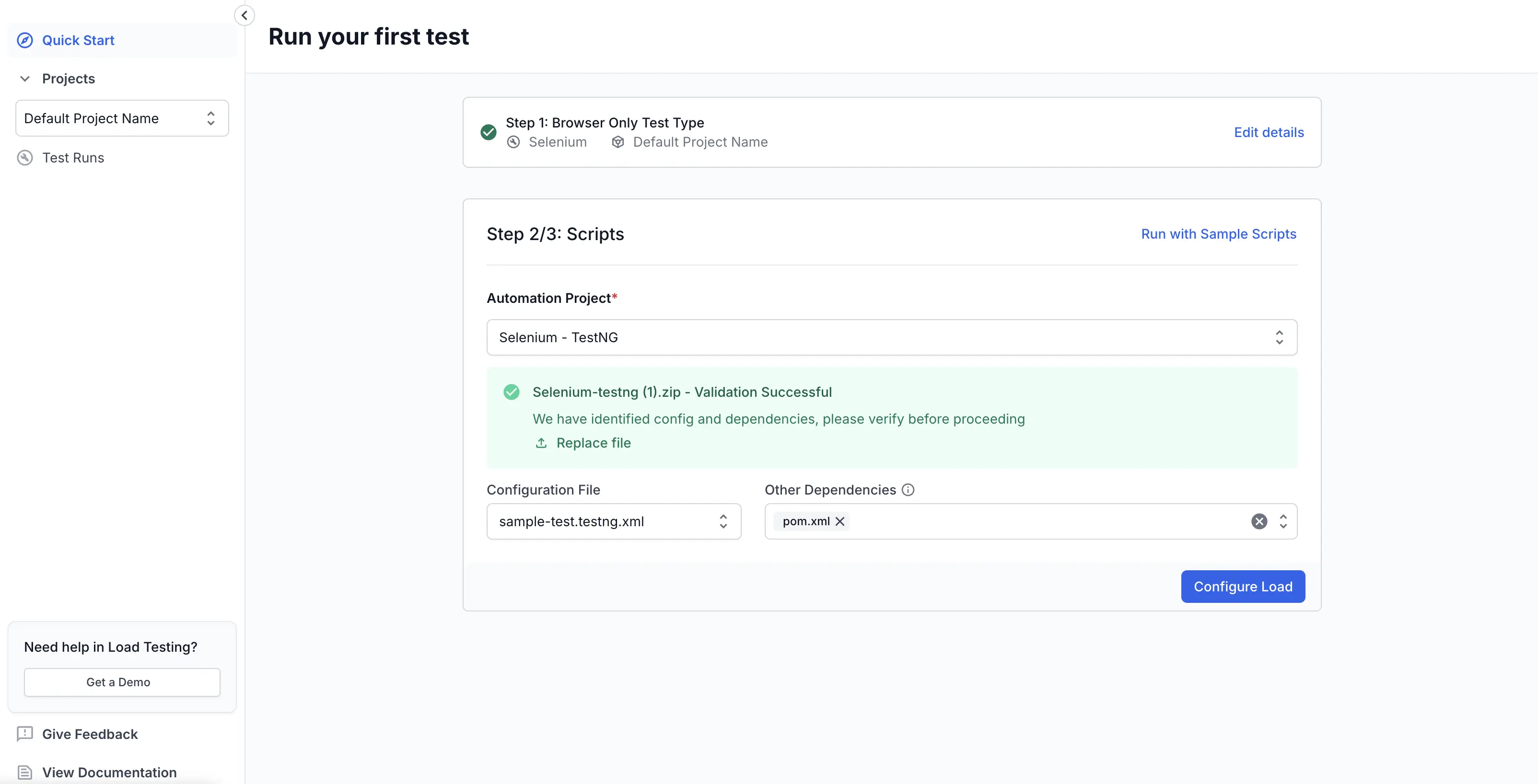

After you upload your ZIP file, the dashboard automatically validates your project. If validation is successful, you will see a confirmation message and a summary of the detected configuration and dependencies.

You can review and confirm the configuration file (your TestNG config file) and any other dependencies (path of pom.xml) identified in your project.

Ensure that your pom.xml and TestNG config files are placed at the root level of your project.

Once you have verified the configuration, click Configure Load to move to the next step.

You can also run load tests using the sample scripts if you want to try out the feature before uploading your own files.

You can configure the following load parameters to customize your load test:

Run test

After you finish configuring load parameters, click RUN TEST .

Download the BrowserStack Load Testing CLI

Download the CLI based on your operating system and place it at the root directory of your Selenium project:

Initialize your project for Load Testing

Run the following command from the root directory of your test project to generate the browserstack-load.yml file, which contains the configuration required to define and run your load test:

Refer to the given table to use the framework - language combination argument depending on your preference:

| Framework | Argument |

|---|---|

| Selenium - TestNG | --framework testng --language java |

| Selenium - JUnit | --framework junit --language java |

| Selenium - Java | --framework selenium --language java |

Configure your Load Test

Open the generated browserstack-load.yml file and update it with the relevant test details. Here’s a sample configuration:

Specify the test type

testType defines the type of load test that you want to execute. Currently, Playwright, Selenium, WebdriverIO and Nightwatch are supported. Set this to Selenium for a load test using Selenium test suite.

Specify the language

Set the language to java.

Currently, only Selenium with Java TestNG, TestNG Cucumber, and JUnit is supported.

For a Java (vanilla) project, you need to specify only the language parameter.

For TestNG or JUnit projects, you must also specify the framework parameter with values testng or junit.

If you omit the framework parameter, the platform treats the project as a Java (vanilla) project.

Specify the test framework

Set the framework to junit, testng, or, cucumber-testng for TestNG Cucumber, depending on your preferred testing framework.

Specify the paths to your TestNG configuration file and pom.xml file

The files block specifies the key files required to install dependencies and define which tests should be executed.

- Under

dependencies, list the paths to required files for installing dependencies. For Java (Maven) projects, include the path to yourpom.xmlfile. Note: Only Maven projects are currently supported. - Under

testConfigs, list the paths to test configuration files. For TestNG, add the path to your testng.xml file. Note: Only one configuration file is supported per test run. If you specify multiple files, only the first one will be used.

Ensure all referenced files are relative to the project root.

Set reporting structure

Use projectName to group related tests under the same project on the dashboard. Use testName to group multiple runs of the same test.

Both projectName and testName must remain consistent across different runs of the same test.

You can use the following characters in projectName and testName:

- Letters (A–Z, a–z)

- Digits (0–9)

- Periods (

.), colons (:), hyphens (-), square brackets ([]), forward slashes (/), at signs (@), ampersands (&), single quotes ('), and underscores (_)

All other characters are ignored.

You can configure the following load parameters to customize your load test:

- Load profiles

- Load zones

- Set thresholds

- Per-VU external inputs with test data

- Environment variables

- Configure multiple scenarios

- Capture response details

- Network throttling

Use RemoteWebDriver

Replace local WebDriver instances (such as new ChromeDriver()) with RemoteWebDriver to run tests on the remote setup.

Set the remote URL to http://localhost:4444/wd/hub.

Run the Load Test

Run the given command to start your test:

Check out the FAQs section to get answers to commonly asked questions.

View test results

When the test is run, you’ll get a link to the result dashboard where you can analyze key metrics like:

- Response time

- Request throughput

- Web vitals (LCP, CLS, INP, etc.)

- Errors and bottlenecks

If you run into issues, try the troubleshooting steps or contact us for help.

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

Thank you for your valuable feedback!