Most testers assume that if their application works flawlessly in one language, it will work just as well in others. After all, the logic is the same, right? Input fields accept text, buttons respond to clicks, and pages render perfectly on your test devices. It seems straightforward.

But what if I told you that this assumption can silently mask critical failures? Dates might flip, currencies might misalign, text might overflow, or right-to-left layouts could break the entire interface, issues invisible unless tested in the exact international context.

Internationalization testing ensures your software genuinely adapts to every locale and language. Mastering it helps catch hidden bugs that can frustrate users worldwide and impact your product’s credibility before they ever see them.

Overview

What is Internationalization Testing?

Internationalization testing (I18N testing) is a type of software testing that checks whether an application is built to support multiple languages, cultures, and regional formats without changing the core code. It ensures the software has a global-ready foundation that can adapt seamlessly to different environments.

Key aspects of Internationalization Testing:

- Language Compatibility: Ensures the app handles and displays different character sets and languages correctly, including right-to-left (RTL) layouts.

- Functionality Across Environments: Verifies that core features work as expected in different locales, including correct handling of dates, times, currencies, numbers, and sorting rules.

- UI Scalability and Layout: Checks that the interface accommodates text expansion or contraction without truncation, overlapping elements, or broken layouts.

- Input Method Support: Confirms the app supports various input methods, including keyboards and Input Method Editors (IMEs) for complex languages.

- Database Unicode Support: Ensures the database can store and retrieve Unicode characters without data loss or corruption.

- Interoperability: Tests consistent behavior across platforms, operating systems, and app versions.

- Installation Testing: Verifies that installation prompts and messages display correctly in different language environments.

How Internationalization Testing Differs from Localization Testing?

Internationalization focuses on building the software for adaptability, while localization is the process of customizing it for a specific language or region with translations, local currencies, and formats. Internationalization testing usually comes first to ensure the software can be localized without issues.

In this article, I will show how to perform internationalization testing effectively and avoid common pitfalls.

What is Internationalization Testing?

Internationalization testing, often abbreviated as I18N testing, is the process of verifying that a software application has been designed and developed to adapt to different languages, cultures, and regional formats without requiring changes to the source code.

Internationalization testing ensures the product is global-ready, meaning it can display text, process data, and function correctly across multiple locales once localized. It is used when a product is intended for multiple regions or languages, and needs to work correctly in each environment.

Importance of Internationalization Testing in 2026

Internationalization testing is important because it validates whether a product can operate correctly across regions. It creates confidence that features, layouts, and data formats hold up when the language or locale changes.

Key reasons why internationalization testing matters:

- Hidden format failures become visible: Even a small shift like switching from MM/DD/YYYY to DD/MM/YYYY can break booking flows, payment systems, or analytics. Testing reveals logic that silently depends on a single culture.

- UI design is pushed beyond English constraints: Text expansion in German or French, or vertical and right-to-left scripts, can expose weak layout structures. Designers learn which elements need flexibility before localization begins.

- Core functionality is validated, not just translation: Sorting, search, validation, and calculations often change per locale. For example, alphabetical sorting rules differ by language, and currency rounding may follow local rules.

- Data handling is future-proofed: Unicode storage, encoding, and retrieval are tested so multilingual inputs do not corrupt the database. This prevents failures when users enter names or addresses in non-Latin scripts.

- Operational consistency is preserved across platforms: Browsers, operating systems, and devices each interpret locale rules differently. Internationalization testing confirms that the feature behaves the same on all target environments.

- Expansion becomes a product strategy, not an engineering struggle: Once global-readiness is proven, new markets can be added by configuration rather than re-engineering. Releases become faster, predictable, and scalable.

Where is Internationalization Testing Done?

Internationalization testing is performed in environments that simulate different language, locale, and regional settings. It is typically done on a combination of real devices, operating systems, browsers, and backend systems configured with target locale data.

Key environments where internationalization testing takes place:

- Operating system locale settings: Changing system language, regional formats, input methods, and keyboard layouts to validate how the application responds.

- Browser environments: Testing locale-dependent rendering, date and number formatting, and font fallback behavior across browsers like Chrome, Firefox, Safari, and Edge.

- Mobile devices: Verifying behavior on real Android and iOS devices where language, region, and text-direction settings impact UI layout and input handling.

- Server and backend configurations: API testing and services that rely on locale-specific logic such as sorting, formatting, or currency conversions.

- Database layers: Validating that the database can store and retrieve Unicode data when records contain multilingual text.

- Cross-platform setups: Running tests across desktop, mobile, web, and hybrid applications to ensure consistent global behavior across platforms.

Also Read: How to approach Cross Platform Testing

Difference between Internationalization and Localization Testing

While internationalization testing focuses on making a product usable to a global audience, localization testing makes it usable for an audience located in a specific region.

Read More: How to perform Localization Testing

Below is a table of key differences between both:

| Aspect | Internationalization Testing | Localization Testing |

|---|---|---|

| Purpose | Prepares the software for global use without code changes. | Customizes the software for a specific region or culture. |

| Focus | Global compatibility and flexibility. | Region-specific features like language and culture. |

| Examples | Character encoding, time zones, UI scalability. | Translations, local currencies, regional formats. |

| Timing | Done before localization to enable easy adaptations. | Done after internationalization to tailor for specific regions. |

| Goal | Ensure the software can be adapted to various regions. | Ensure the software works correctly in a specific locale. |

In fact, internationalization and localization testing together form globalization testing, a mainstay of modern application development.

Also Read: How to Perform Globalization Testing

Example of a Scenario for Internationalization Testing

Consider the following scenario.

You, a Japanese user, prefer to browse and use apps and websites in Japanese instead of English. However, you are living in the USA and the default language for all software is English.

You buy a new phone, and when you use Amazon on it for the first time, the language by default is set to English. However, if you set your preferred language to Japanese all the content switches to Japanese without affecting the functions or offerings.

Not only will the language change to Japanese, but the recommendations might also change based on the specific preferences, occasions, norms, and mores in Japanese culture.

All these changes are the result of internationalization efforts and testing. To be successful, sites and apps account for what users from different cultures want and prioritize. For example, for an Indian audience, it is best to ensure that the app can be used in multiple Indian languages, given the country’s diverse cultural tapestry. This would be the purview of localization testing.

Challenges of Internationalization Testing

Internationalization testing often reveals issues that are not obvious during regular functional testing. These challenges usually stem from assumptions made during development, inconsistencies across platforms, and the complexity of supporting multiple scripts and formats.

Key challenges include:

- Assumptions baked into the codebase: Many systems are built with implicit expectations such as English text length, left-to-right layout, or U.S. formatting rules. These assumptions cause unexpected failures when the same UI or logic is used in other languages or regions.

- Unpredictable text expansion and contraction: Languages like German, French, or Russian expand UI text significantly, while East Asian scripts may shrink it. Testing must account for how labels, buttons, and error messages reshape layouts in ways designers never planned for.

- Complexity of right-to-left support: RTL languages require a mirrored UI, reversed navigation patterns, flipped icons, and bidirectional text handling. Even small elements like arrows, sliders, and pagination can break if mirroring is not implemented correctly.

- Inconsistent locale behavior across platforms: The same locale may behave differently on Android vs iOS or Chrome vs Safari. Date formatting, font fallback, input behavior, and sorting rules often change by platform, which increases testing scope.

- Font rendering and fallback issues: Some languages require specific font families or fallback rules. Characters may render incorrectly, break alignment, or appear as tofu blocks when fonts are not configured correctly across platforms.

- Complex data validation rules: What counts as a valid address, phone number, postal code, or name varies dramatically between countries. Validation logic often fails when tested against real global data.

- Content encoding and Unicode handling problems: Text corruption, broken characters, and truncated strings occur when the database or API layer does not manage Unicode consistently. These failures are often subtle and hard to trace.

- Environment setup difficulties: Accurate internationalization testing needs correct locale settings, IMEs, input methods, and multilingual test devices. Setting up and maintaining these test environments becomes time-consuming without dedicated infrastructure.

Best Practices for Internationalization Testing

Internationalization testing works best when the product is evaluated early, repeatedly, and across environments that reflect true global conditions. The goal is to ensure that the foundation of the application is flexible enough to support localization without redesign or code rewrites.

Key best practices include:

- Start testing internationalization during development: Early detection of layout inflexibility, hardcoded strings, or locale-specific assumptions prevents expensive refactoring later. Developers and testers can identify patterns that restrict global readiness before they spread across the codebase.

- Use pseudo-localization systematically: Pseudo text exaggerates expansion, introduces accented characters, and simulates script behavior. For example, using brackets, diacritics, or mirrored text helps reveal layout failures and string concatenation issues long before real translations exist.

- Validate UI behavior across multiple locale profiles: Instead of testing only one or two languages, test representative categories like RTL scripts, CJK languages, and languages with heavy word expansion. This exposes how your UI behaves under the most stressful text conditions.

- Test functional features with real locale data: Apply actual date formats, currencies, numbering systems, and sorting rules from the target regions. For example, Arabic numerals, Japanese calendars, Indian numbering systems, and European decimal separators. This ensures functional logic does not break under unfamiliar formats.

- Check for complete Unicode support across the stack: Verify that APIs, databases, logs, file systems, and UI layers consistently process multilingual text. This avoids corruption when users enter names, addresses, or payment data in non-Latin scripts.

- Confirm input method handling on real devices: IMEs for languages like Chinese, Japanese, and Korean behave differently from Latin keyboards. Testing on real Android and iOS devices reveals issues like delayed input, broken suggestions, or cursor misalignment.

- Review layout adaptability with fluid design principles: Ensure constraints, auto-layouts, and responsive components can handle dynamic text sizes and orientation flips. Fixed-width components often break first, so this check reduces UI fragility.

- Run tests across operating systems and browsers: Locale behavior differs subtly between platforms. Testing Chrome, Safari, Firefox, iOS, and Android ensures consistent formatting, font fallback, and text rendering.

Read More: How to Change Location on Google Chrome

- Automate core internationalization checks where possible: Automate format validations, Unicode handling, and locale-specific functional flows to speed up regression cycles. Combine automation with manual checks for layout and usability issues.

How to Perform Internationalization Testing on Real Browsers in 2026

Internationalization issues often appear only when the application runs on actual devices and browsers because each platform handles fonts, input methods, locale settings, and rendering rules differently.

Simulators cannot fully replicate RTL behavior, font fallback, text expansion effects, or locale-driven layout changes. Real environments are the only way to see how global users will truly experience the product.

This is why platforms like BrowserStack, with access to 3500+ real browsers and devices, are essential. They allow testers to verify how their website or application behaves across real device–browser–OS combinations.

Let’s proceed further with an internationalization testing example. Follow the steps below to check common aspects of internationalization on BrowserStack:

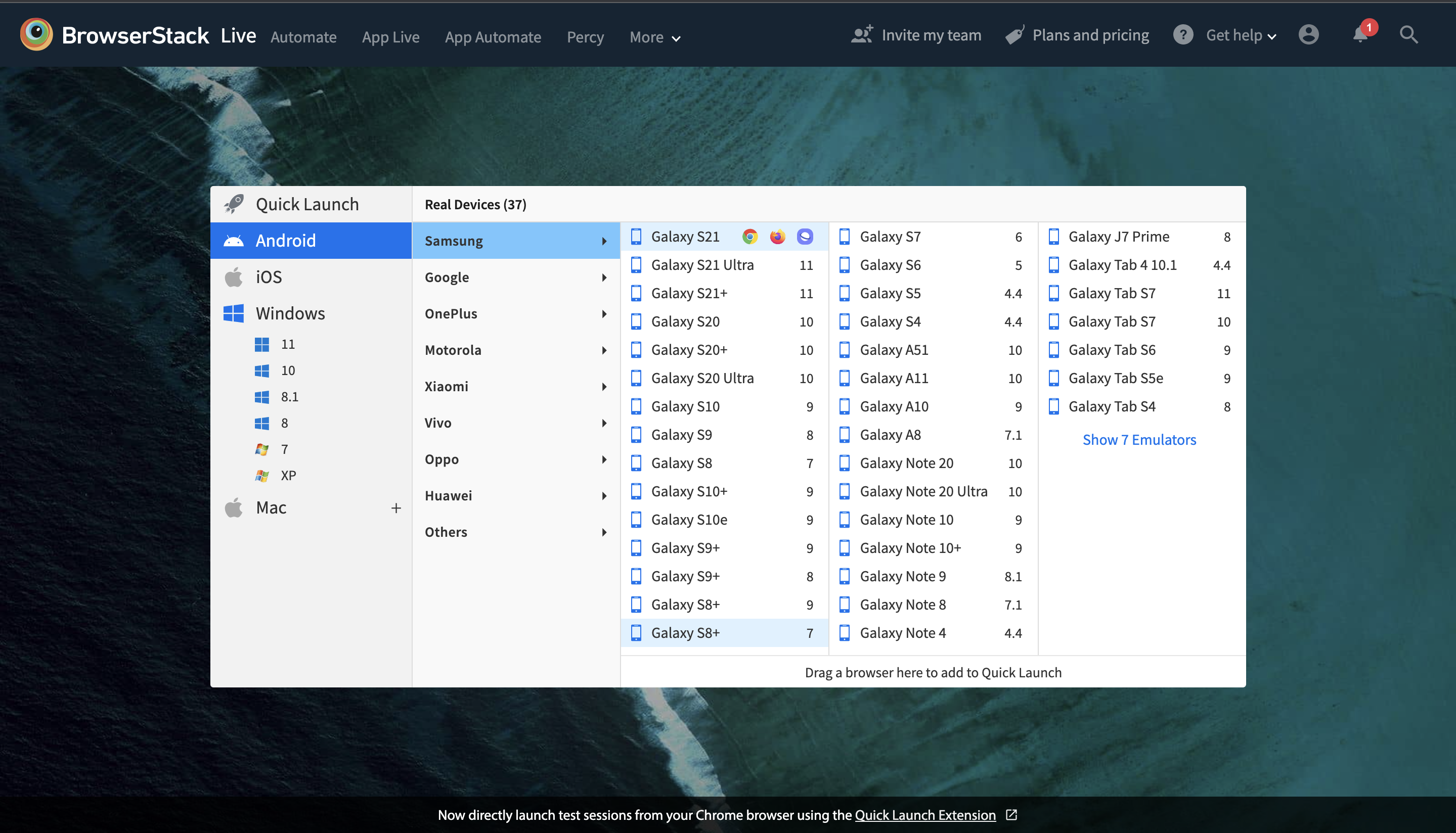

- Sign up for free on BrowserStack Live. Login if you already have an account.

- It will take you to the device, browser, OS dashboard.

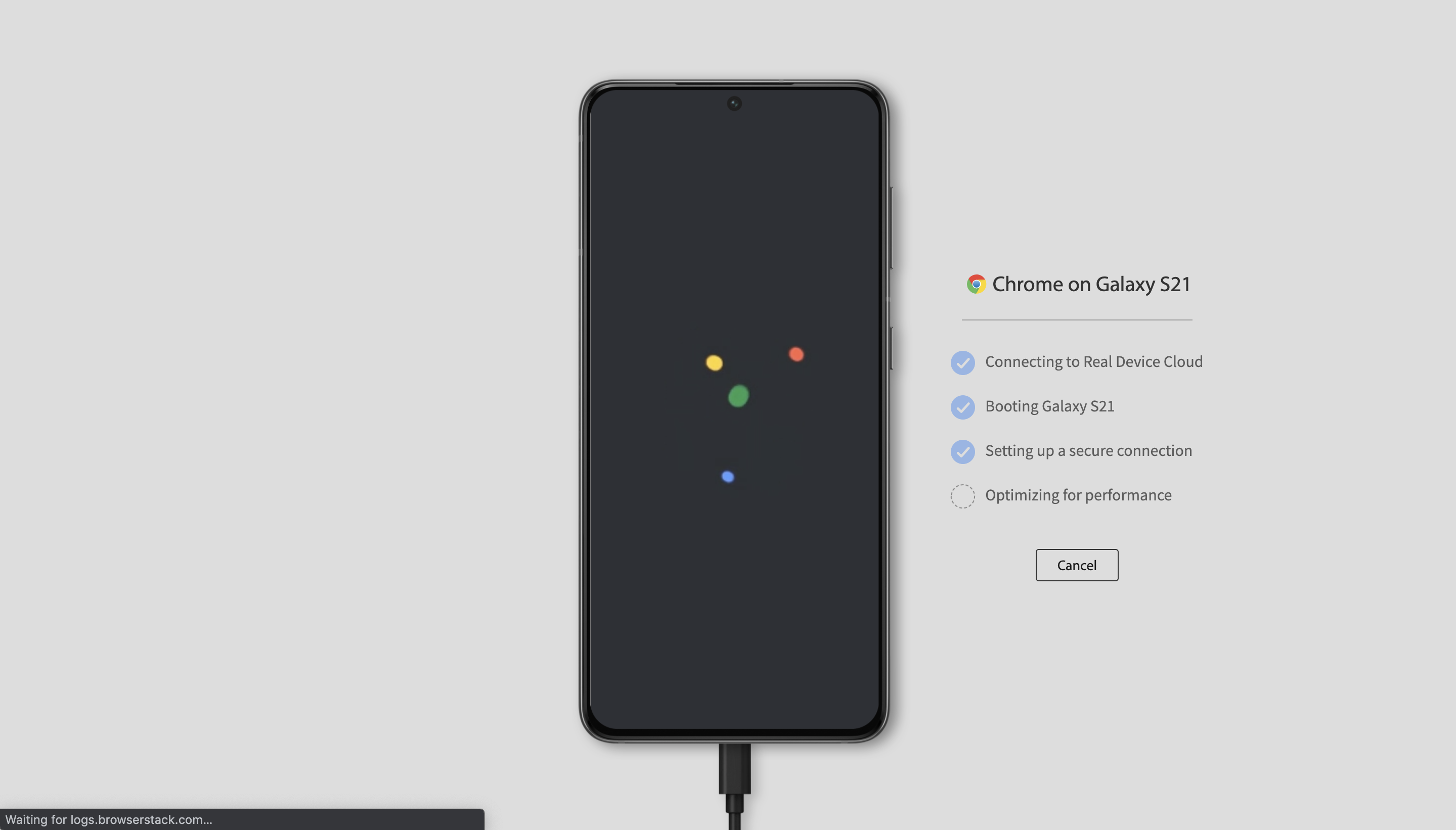

- Choose the browser, device, and OS you want to test on. In this article, we will test a website on Chrome running on a Samsung Galaxy S21.

- Click on the browser icon. It will take you to an actual Galaxy S21 running an actual Chrome browser on our real device cloud.

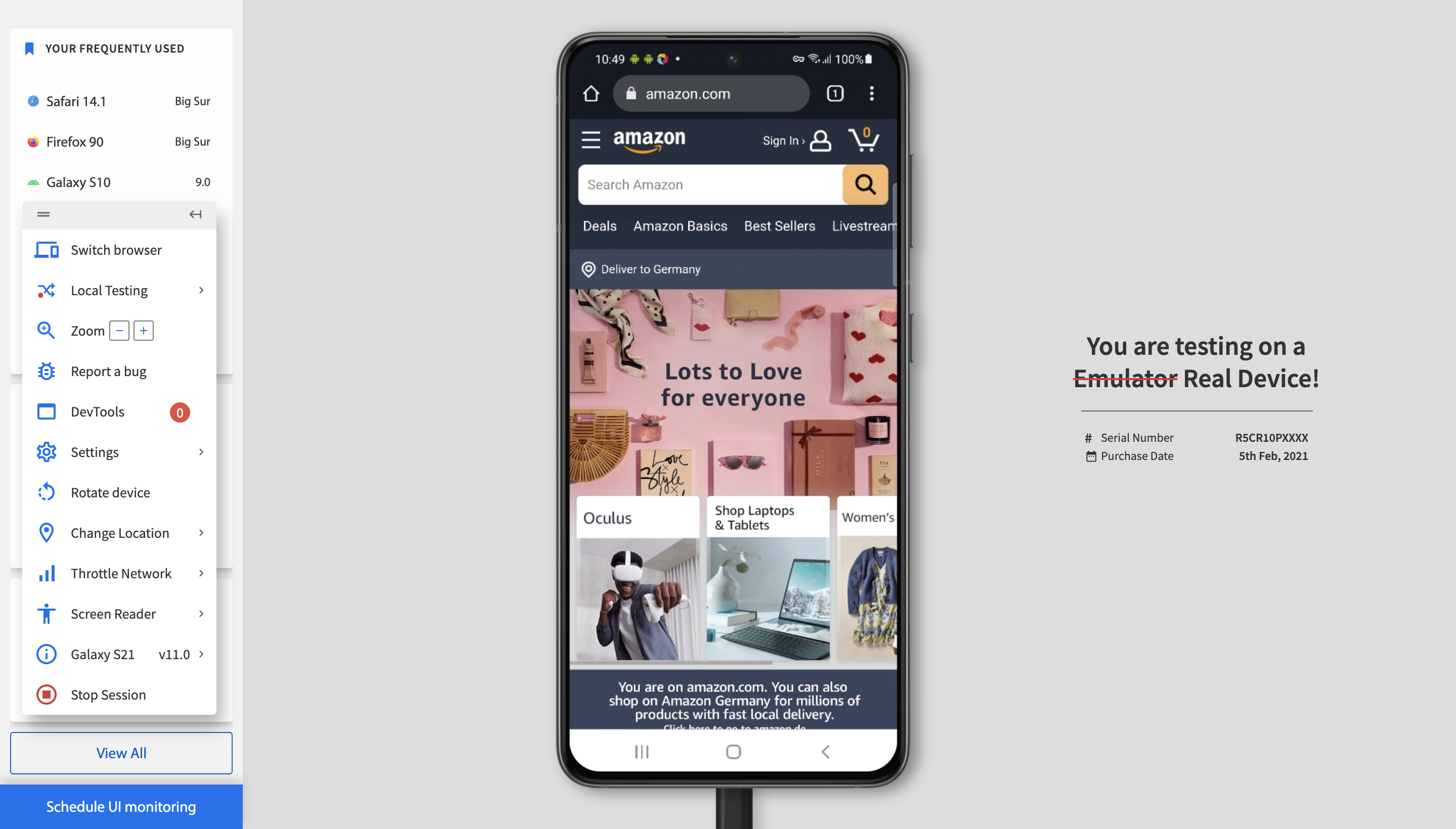

- Once the device loads, navigate to the website to be tested. In this example, we are testing amazon.com.

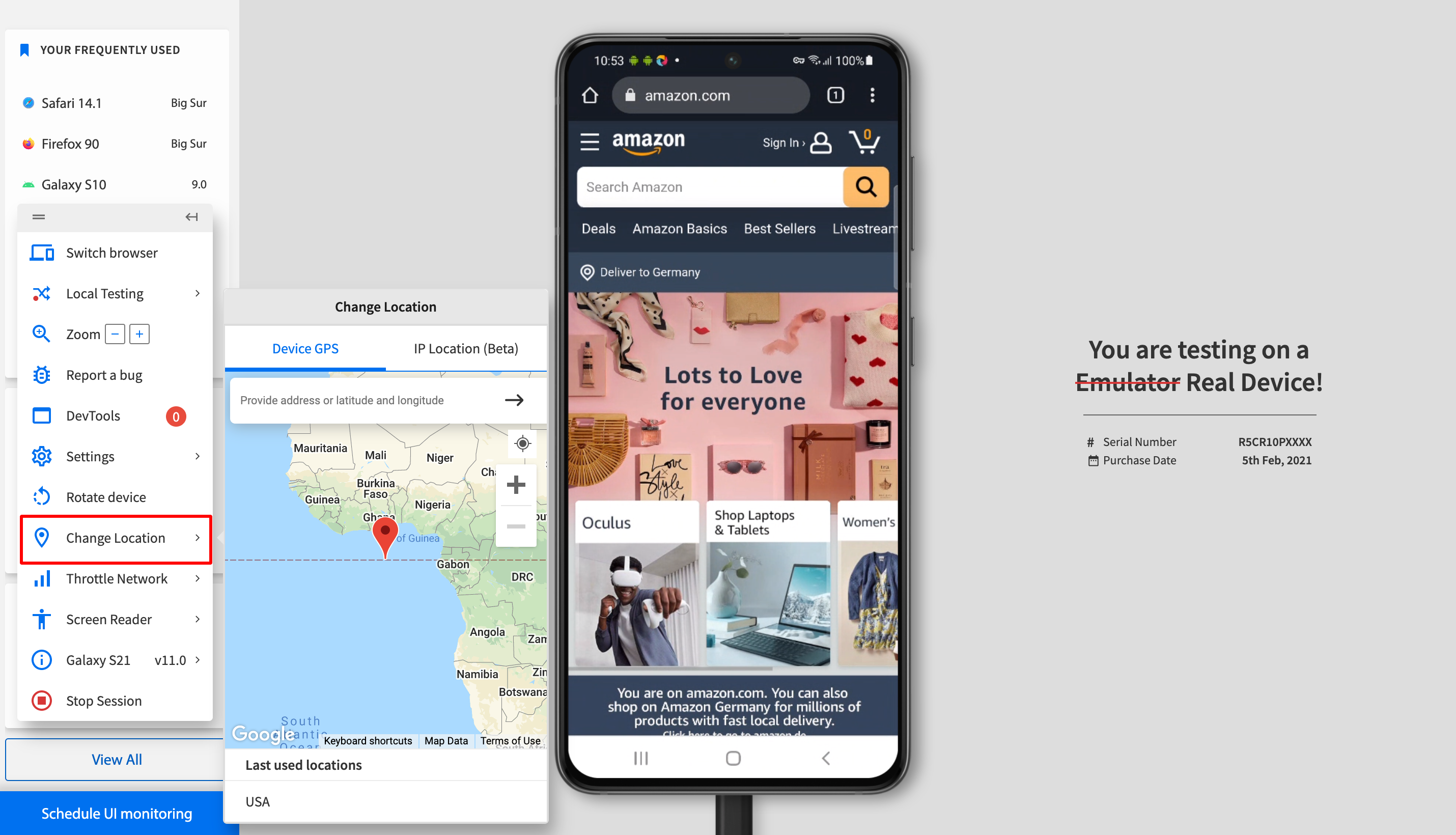

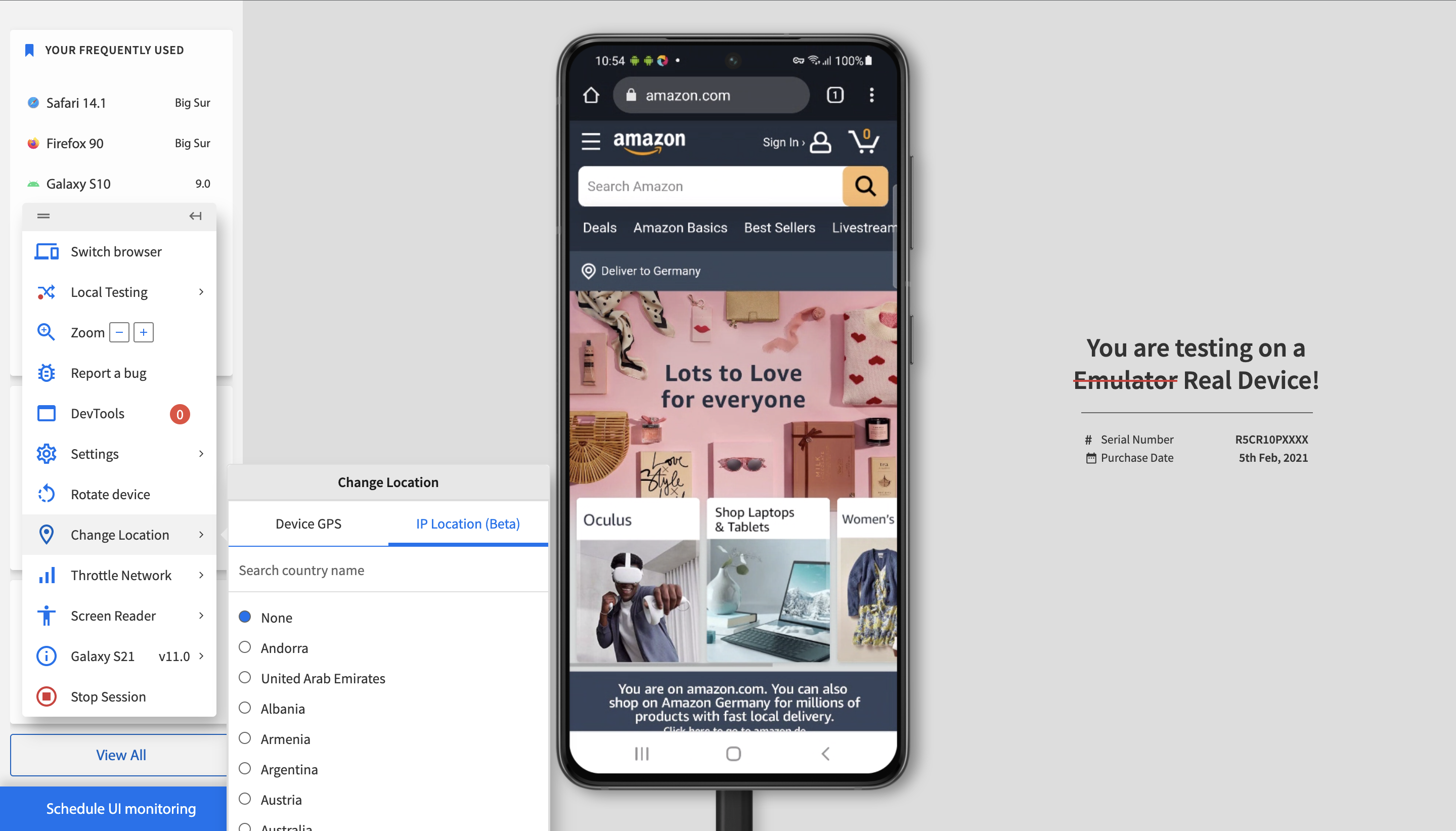

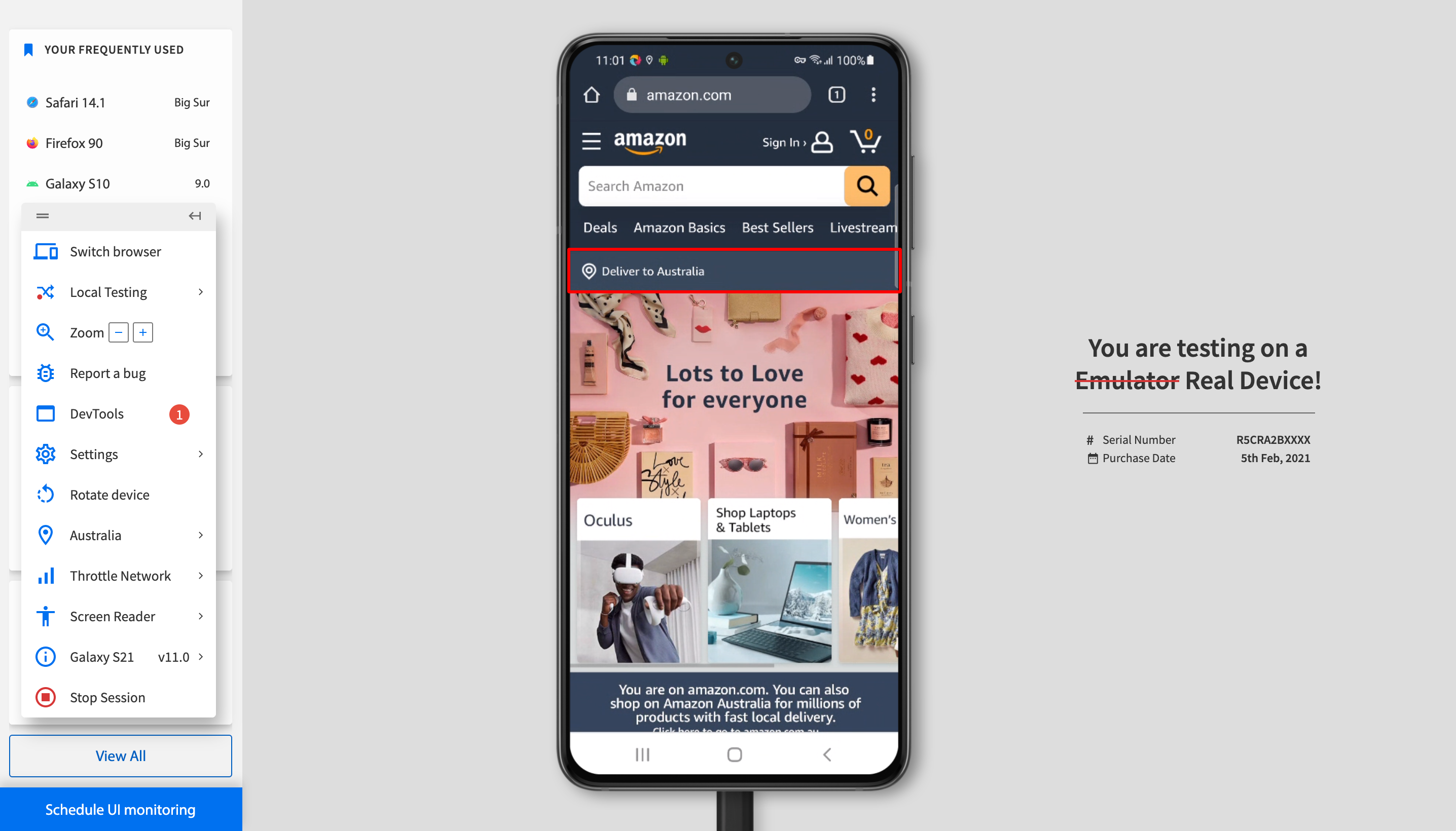

- To start with, let’s test how the website appears from different languages. Notice the Change Location option on the menu to the left.

- Choose the country you want to check website function from. In this case, we have chosen Australia.

Notice that the delivery location has changed to Australia, depicting that you are now viewing the Australian version of the website. From this point, you can test the app to check other functions from Australia, or any other location you choose.

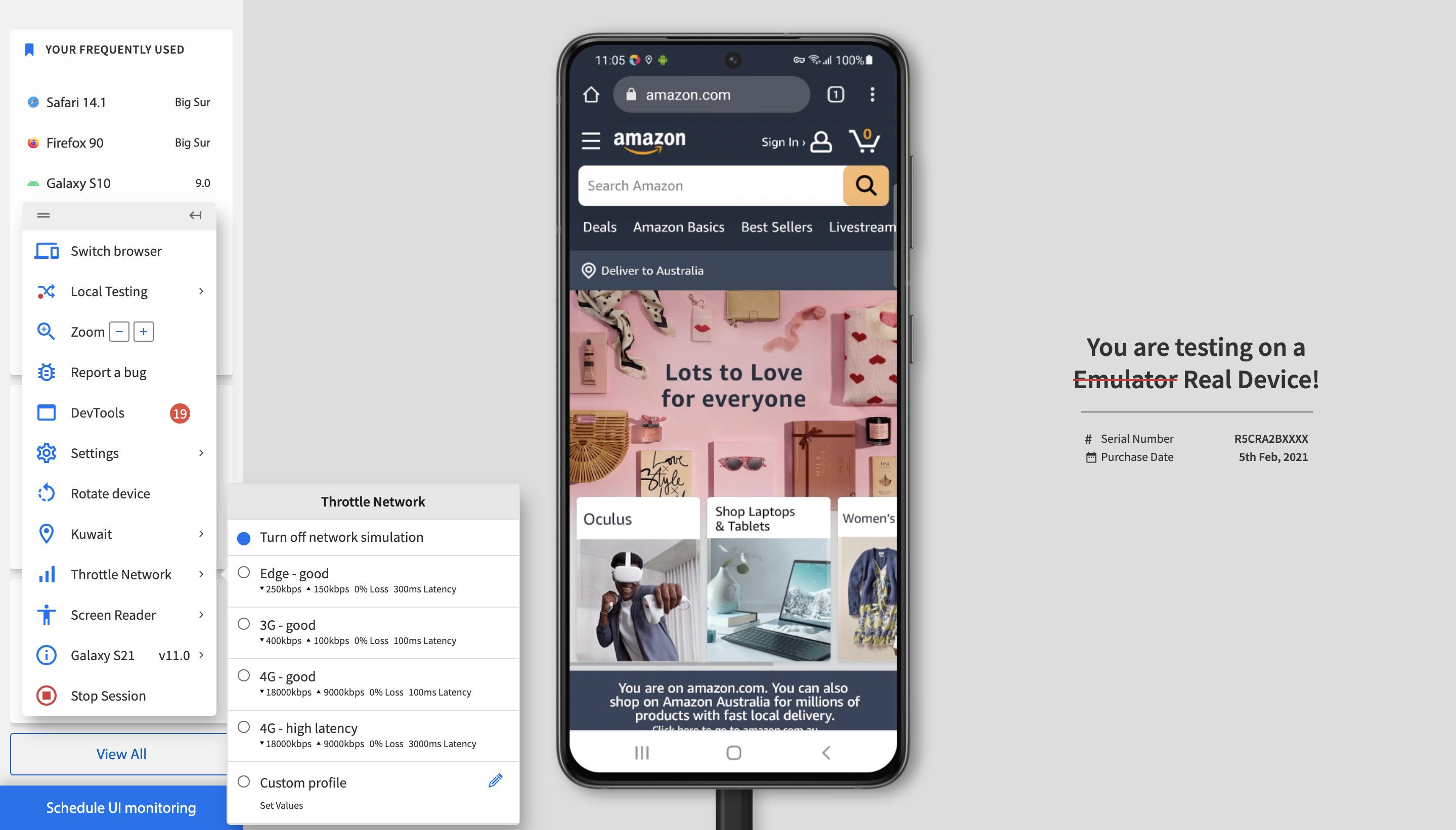

- Now, let’s check how the website functions at different internet speeds. An international app will be used by people having varying network connections, so optimizing for low-speed internet is essential.

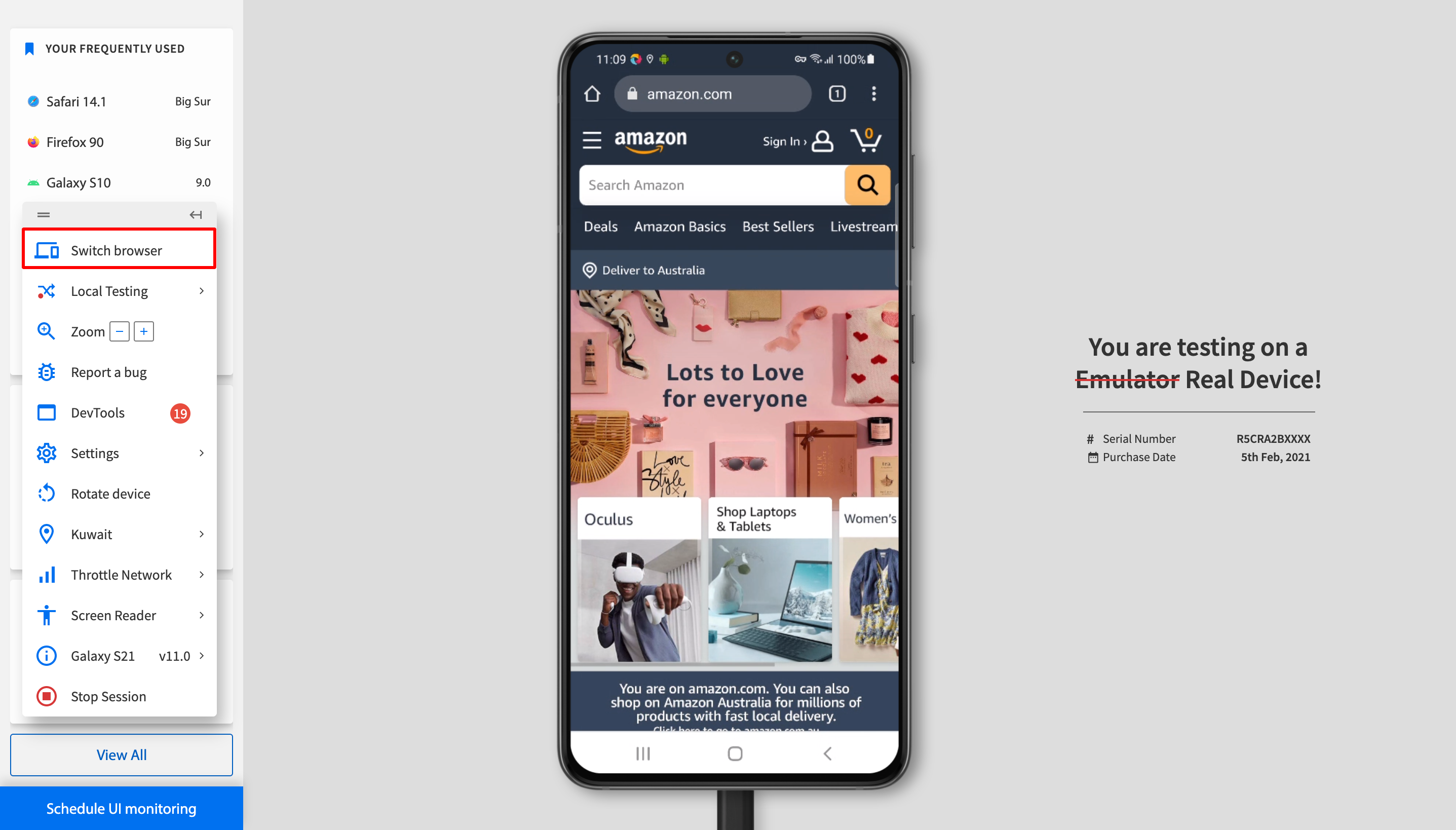

- You can check these features from multiple locations and internet speed, across different browsers and devices. Notice the Switch Browser option on the menu.

It will take you back to the dashboard, and you can select another browser-device-OS combination to test the same site.

On BrowserStack, internationalization tests are fairly easy to run. They can also be automated via Selenium, thanks to automated Selenium testing on Automate. Apps can similarly be tested manually on App Live or via Appium automation on App Automate.

Run Internationalization Tests

What Industry Experts Recommend for Internationalization Testing

Internationalization testing is often treated as a late-stage localization check. Experts who work closely with global products consistently recommend addressing internationalization risks early, as many defects originate in core design and data handling rather than translated content.

- Sanjay Vij, a specialist in internationalization and localization testing, recommends validating Unicode support, locale handling, and data formats early in development, since most i18n defects originate from foundational design choices rather than translated text.

- Natasha Murashev, an internationalization engineer and former Google developer advocate, advises testing applications with multiple locales enabled to catch issues like text expansion, date and number formatting, and right-to-left layouts before they reach localization.

- John Yunker, a globalization and international UX expert, emphasizes testing products for global readiness by validating cultural assumptions, regional workflows, and locale-specific user expectations, not just language translation.

- Elisabeth Hendrickson, a software testing consultant and author, highlights that internationalization testing should focus on how real users across regions interact with the system, ensuring localized behavior works correctly within complete user workflows.

Conclusion

Internationalization testing helps teams ensure that software behaves reliably across languages, regions, and cultural settings. It exposes issues with formatting, layout, input, and data handling that only appear when the product moves beyond its default locale, allowing teams to build experiences that feel consistent and native worldwide.

Since these issues surface only in real environments, testing on actual devices and browsers becomes essential. BrowserStack provides the required accuracy and scale with over 3500 real device–browser–OS combinations, making it easier to validate global scenarios and ship software that works seamlessly across markets.