The most challenging part of any agile testing team is achieving seamless regression tests. The challenge gets multifold when cross-browser and cross-device come into play. Naturally, now more than ever, development teams are considering scaling their automation test stack. Firstly, automation helps to arrest the cumbersome manual activity. Secondly, it helps avoid the overlook. Having to interact with the application on regular basis, human eyes adapt to the screen behavior which makes us unknowingly overlook very obvious bugs/issues. Of course, there are more good reasons why automation is leveraged within the development teams; it's a deep topic on its own.

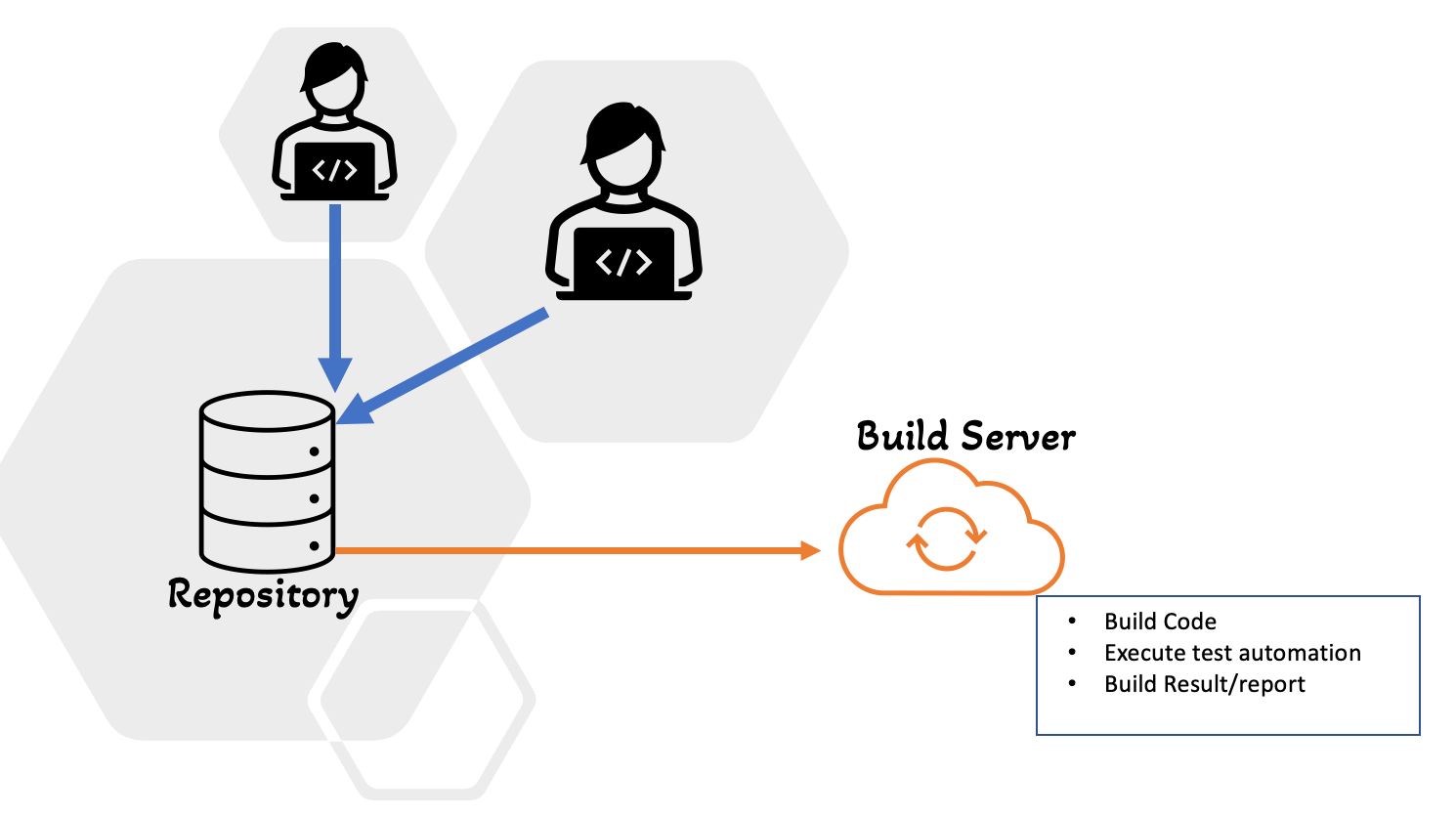

The broad idea of having automation is to integrate it within the CI/CD pipeline to trigger regression automatically whenever there is a code merge (pull request), thus reducing the manual follow-up and execution. However, it’s not as rosy as it sounds. As long as the test suite is small it does not pose any challenge. But, the tests are added regularly to the suite and it grows massively; the aftereffect of this is the increasing build time. The pain becomes exponentially high if the test suite is executed across browsers.

Below are a few good practices that can be followed while automating tests. They help reduce the number of clicks and page loads which in turn helps arrest excessive time leaks, achieving faster test builds.

1. Skip UI based Login/Logout

The infamous “Login sequence” - everybody’s favourite. Whether you are trying out something new or checking the feasibility, the login screen is always a guinea pig. But do we ever think about the time consumed each time the test suite triggers the login sequence through UI?

Remember the “beforeEach” hook getting executed before every test?

Use API calls to login into the application skipping the UI interaction as a whole. Some Login scenarios may need little more than username and password - like cookies. Get an understanding of the login security scenario and try to pass all the relevant parameters as a backend call to gain access.

cy.request({

method: 'POST',

url: 'https://makemyBrow.com/logIn',

form: true,

body: {

username: 'Automation_tester',

password: 'Automate123',

},

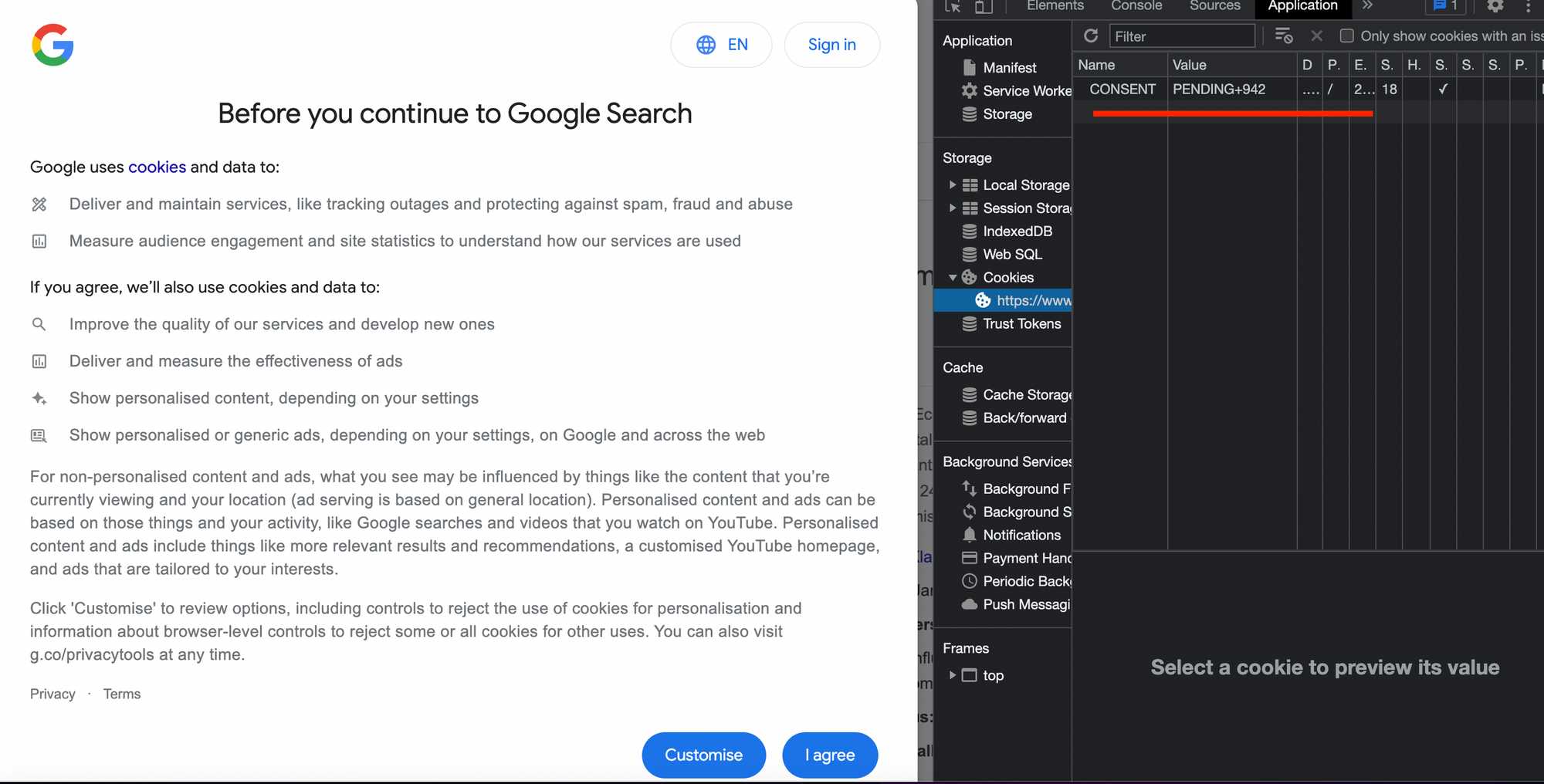

}) 2. Avoid Consent banner interaction

I bet every test engineer gets annoyed with the consent banner pop-up. Imagine each time you have to interact with the consent banner to close the dialog box and perform further actions. It’s just overkill. Usually, most of these consent banners can be arrested by placing required cookies before the page-load. Identify the right cookie to be set and you are sorted. The test goes directly to the application.

For example: in the below screenshot we see google sets a cookie called “CONSENT”. If this cookie is fed with the right value, the consent banner dialog will not be displayed

3. Always use Direct Link for interaction

Automating test flows demands some attention. Test engineers try to translate every manual sequence into test steps. For example, instead of trying to reach a specific page by clicking through the navigation links, it is better to load the URL directly; thereby reducing the number of clicks and page loads. It also reduces test script flakiness to large extent.

Avoid This

cy.visit('www.browserstack.com');

cy.get('.nav_item_name').click().within(() => {

cy.get('.event').should('be.visible').click();

}); Try This

cy.visit('https://www.browserstack.com/events') 4. Prep using API

Not all scenarios can be executed directly. Some may need initial preparation of the SUT (system under test). Use underlying APIs to do the prepping for you as investing time in setting up the required condition takes up a lot of time.

export const Images = () => {

return requestHeaders().then((headers) => {

const wait = {

method: 'POST',

headers: headers,

url: `${apiLink}/images/suit`,

body: { page: 0, size: 15 }

};

});

};the above example is a utility method used to upload some images into the application, which later will be used for further user journeys. Thus, avoiding multiple clicks in uploading multiple images.

5. Avoid 3rd party interaction

The friction is really high when the test scripts have to interact with 3rd party applications. Here, we are dealing with the code which we are not responsible for. These are the weak links that cause frequent failures and flakiness of tests. It’s best to skip such interaction as much as possible.

One infamous example is payment scenarios. Most of the e-commerce applications will be associating themselves with one or the other payment portal. Once the system is prepped, it is recommended to carry out the payment through backend API calls bypassing the 3rd party UI. This way you entirely avoid external waits.

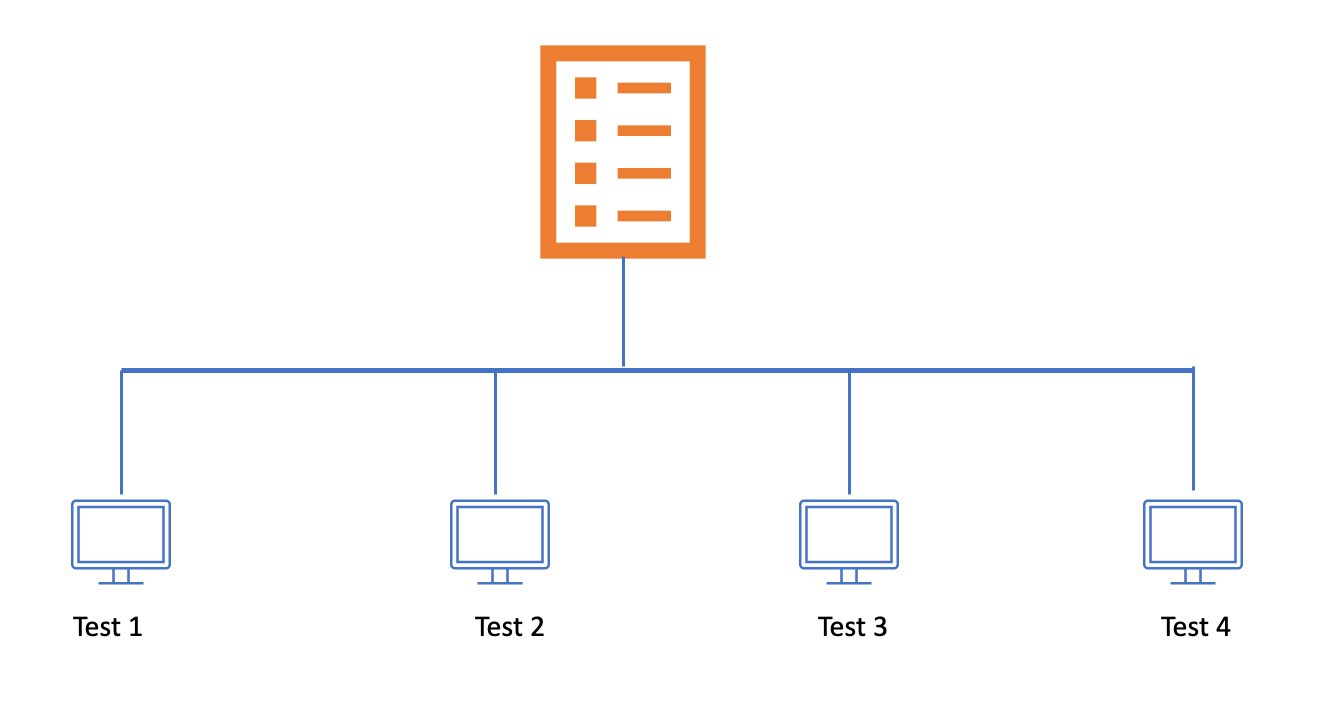

6. Atomic Tests

To harness parallelization to its full potential, automated tests should be individually executable without having any dependency on other tests.

parallelization helps run tests simultaneously on multiple machines thus exponentially reducing the overall test execution time.

To do this, tests need meticulous planning. Brainstorming sessions with the wider team can help to identify dependent scenarios and the necessary preparations required. Most of the prep activities will become part of utility methods, which can be called when necessary.

7. Avoid dynamic sections of the UI

When there are animations or dynamic elements on the screen, try to avoid interacting with those elements. Simply because you may have to beef up the tests with synthetic wait or it may be so quick and fragile that things can get skipped. One example is the shopping bag animation. When the user adds the product to shopping bags, we see the little icon either jumps or a small drop-down opens up, showing the product added and closes.

Quick Recap

- Skip UI based Login/Logout

- Avoid Consent banner interaction

- Always use Direct Link for interaction

- Prep using API

- Avoid 3rd party interaction

- Atomic Tests

- Avoid dynamic sections of the UI

Keep in mind these golden nuggets and you will start noticing the improvement in the execution time of your automated tests.