"What good is a system if it’s unavailable?"

Floyd Piedad says what we all think, in his book titled High Availability: Design, Techniques, and Processes. When it comes to systems design—especially systems designed to be used at scale—a key objective is to ensure that every end-user gets the experience they expect from it, without fail, every time they access it.

In other words, users expect a system to be available.

What exactly is availability?

Availability is a system characteristic, measured from the user’s point of view. It deals with ‘real’ and ‘perceived’ system outages. If a system is inaccessible, slow, and/or likely to fail unexpectedly, it's unavailable.

Availability is the biggest (hidden) contributor to the TCO (Total Cost of Ownership) of your service/product.

How do you achieve high availability?

A system is ‘highly available’ if it delivers a high level of operational performance. It should respond to requests within an acceptable timeframe, continuously, for a given period of time.

There are three principles of reliability engineering that help achieve availability. Broadly speaking, the process involves:

- removing single points of failure,

- reliable crossover to redundant resources, and

- early detection of failure points.

As the software testing infrastructure for more than 25,000 teams across the world, we couldn't afford unexpected system outages that would delay our customers’ test feedback and releases. To enable their continuous testing, we had to ensure our components' continuous availability—whether they're on Cloud or physical machines.

Here's how we applied the principles of reliability engineering to make a non-AWS (or non-EC2) component highly available.

Making a non-EC2 component highly available: A BrowserStack case study

The setup

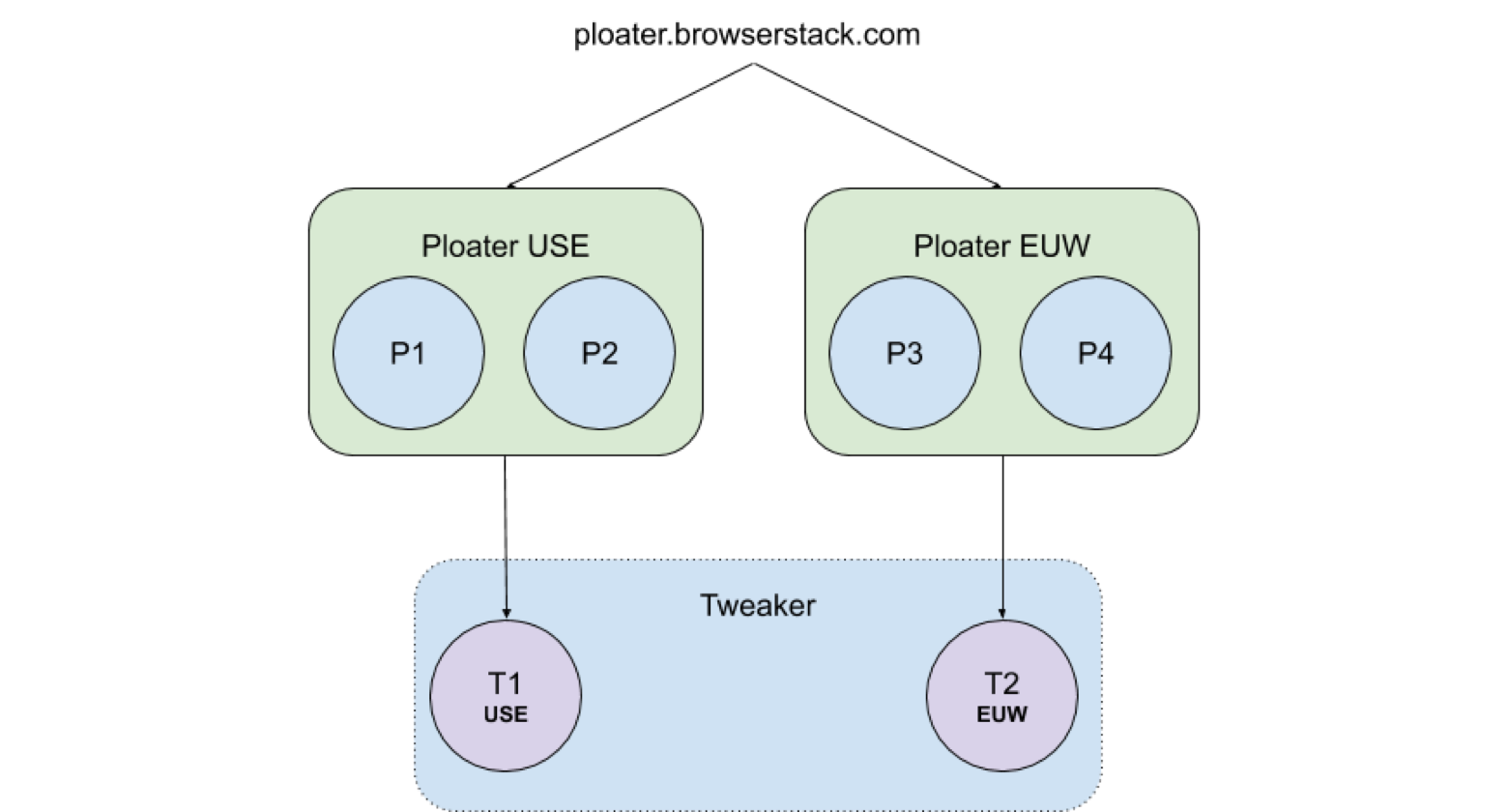

In our system, there is an EC2-hosted, load-balanced component called Ploater. For simplicity, we'll say the Ploater instances are in two locations (US-East and EU-West). Any request to ploater.browserstack.com is routed to one of these regions (via latency-based routing).

Ploater makes internal requests to another component called Tweaker, which is a Node.js Express server. Due to a few application-specific requirements, Tweakers couldn’t be hosted on conventional AWS EC2 instances. We had to use our data center's physical Mac machines for this purpose. So, we have two data center machines (Tweakers)—one each in the US and the EU.

In Ploater, we used to have hardcoded config and IPs to specify which Tweaker machine to hit (based on region). Then the Ploater would make an HTTP request to a Tweaker directly.

As illustrated, a request on ploater.browserstack.com is served by one of the machines in the closest region (say P1). P1 knows the public IP of the Tweakers (T1 and T2) and makes an HTTP request to, say T1, directly.

The problem

Since there is only one Tweaker each in the US and the EU (namely T1 and T2 respectively), they become a single point of failure in that region. This also leads to disruptions at Ploater layer, making the entire service inaccessible for every user in that region.

At this point, we want to make sure that even if T1 is down in the US, T2 in EU is able to serve the requests successfully—without any service interruption at Ploater level. This would not be ideal but it will keep the Ploater available.

The fix (part I)

We began by adding redundancy in each region. Two machines in each region ought to do the trick. But we’ll have to do away with the hardcoded IP (of T1) in P1’s config. How’d that work?

Maybe add a domain that has a record pointing to the two machines. Answer section of the domain would look like this:

;; ANSWER SECTION:

tweaker-use.browserstack.com. 300 IN A 65.100.100.101

tweaker-use.browserstack.com. 300 IN A 65.100.100.102

[Note: These are dummy IPs used for explaining the system’s architecture.]

With this solution, we have managed to distribute the request to two Tweakers. While that solves redundancy, it's not enough to achieve high availability. For instance, what would happen if both Tweakers in the US were down?

The fix (part II)

We still need to handle single-point failures. Ploater should automatically stop sending HTTP requests to a Tweaker that can’t serve them.

Additionally, if the two Tweakers in the US are down, we would also like to be able to serve requests in US-East through Tweakers in EU-West. This would result in a temporary latency increase in the service—but the service would remain operational. Our users' tests will not be dropped, which was half the battle.

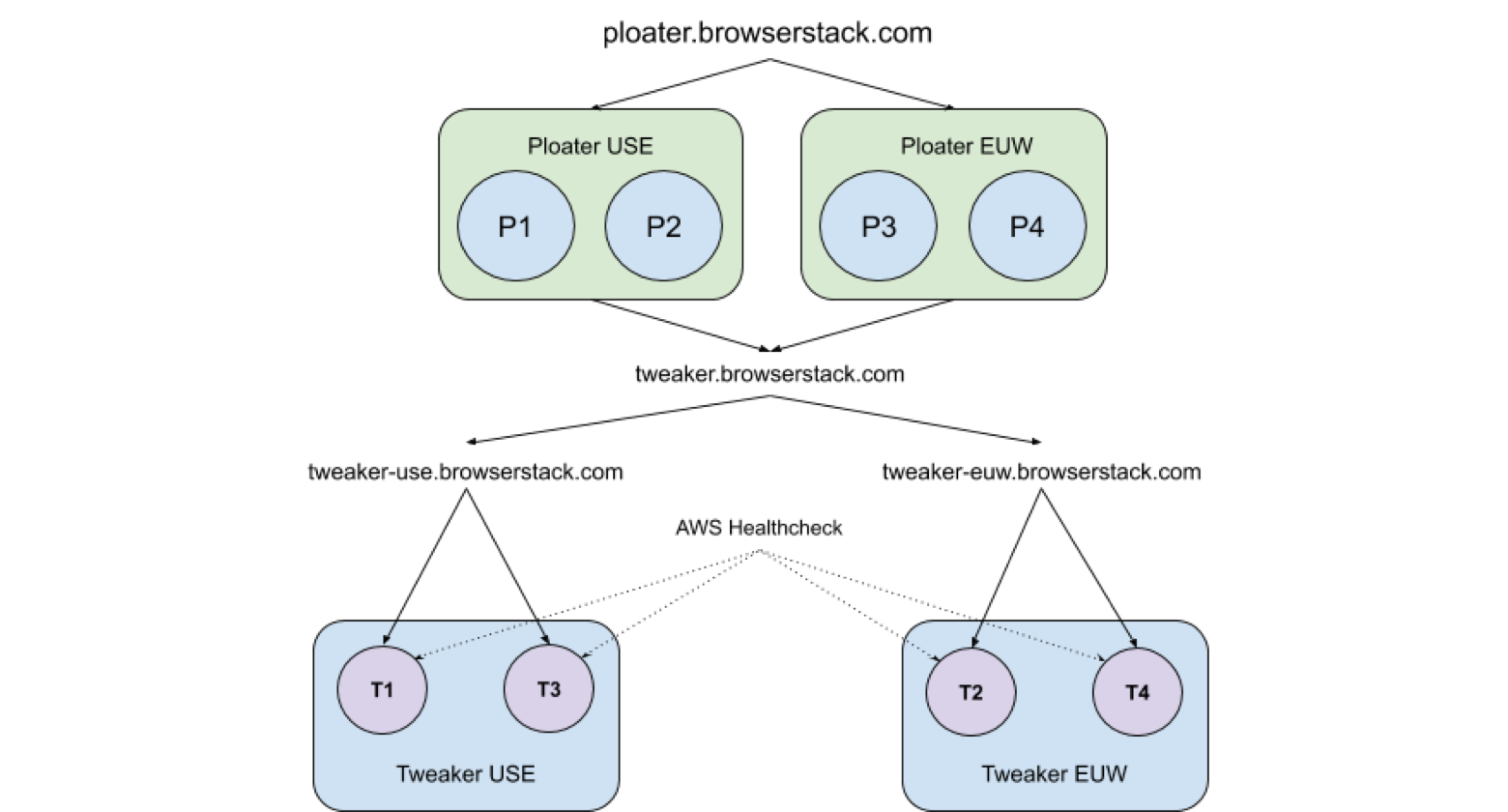

It would be great if we could architect a solution to handle both of those scenarios. So we did, using AWS Route 53 for a configuration that looks something like this:

In this setup:

- Request on ploater.browserstack.com resolves to one of the closest available EC2 machine (say P1).

- Config on each of the four Ploater machines points to tweaker.browserstack.com

- Tweaker.browserstack.com is an AWS Route 53 resource which CNAMEs to tweaker-use.browserstack.com and tweaker-euw.browserstack.com (in keeping with latency-based routing policy).This ensures that the closest resource in terms of latency is assigned. In happy scenarios, requests from US-East Ploater will be resolved to one of the US-East Tweaker. There is also a toggle in Route 53 that evaluates target health continuously, plus we can set up alertings using Amazon SNS for unhealthy resources.

- Now each of these region-based domains points to the two machines with equal weights to just round-robin amongst the machines (in keeping with the weighted routing policy).

It looks like all we have managed to achieve with this setup is a somewhat-decent traffic distribution (albeit one that minimizes client-side latency).

The key is in #3. AWS Health checker continuously evaluates target health. If a resource is down, it tells Route 53 stop routing traffic to it. In our scenario, the health checkers will ensure that traffic requested on tweaker.browserstack.com will always be routed to a healthy resource—following the distinct policies applied at both Ploater and Tweaker layers.

Now, let’s run through some likely scenarios in the EU region:

- All the machines are up and running

All’s well with the world. In this scenario, the system prioritizes latency and routes requests (in a round-robin manner) to one of the Tweaker machines in EU (since the Route 53 weights are the same for all the machines). - When one machine in the EU is down (say T2)

AWS Health Checkers mark it down and Route 53 stops routing traffic to it. In fact, tweaker.browserstack.com would never resolve to T2 until T2 is marked up again. - When all machines in the EU are down

If both machines in the EU are marked down, AWS Health Checkers will come into play and only the US machines would be available for resolution (until one or both of the EU machines are up again).

Using a combination of latency-based routing and weighted-routing, we are able to achieve inter-and-intra-region high availability. Plus, the weighted-routing provides the benefit of load balancing on Tweaker machines.

As we scale horizontally, we can continue to add new machines to the Route 53 configuration of the appropriate domain, without needing to modify configuration on any other services.

tl;dr

We started with a problem statement: Making a non-AWS component highly available. We achieved this by adding redundancy and some fail-safe features like alertings and auto-switching (to a machine that is up and running).

Some concepts we played with:

- Load distribution across the available machines (with Weighted Routing Policy)

- Making a geo-distributed Tweaker component (on non-EC2 machines) highly available, with health checks that’d trigger failover configurations.

So if there is even one machine up and running, a request will be resolved—and our users’ tests will run—successfully.