Cypress is a popular testing framework that offers developers an easy and efficient way to write end-to-end tests. However, even with the best efforts, Cypress fails test scenarios can still occur.

Overview

What are Test Failures in Cypress?

Test failures in Cypress occur when the application’s actual behavior differs from the expected result. Cypress provides detailed error messages to help quickly identify the cause of the failure.

Common Causes of Test Failure in Cypress

- Not making commands or assertions retryable

- Failing to create page objects

- Not using specific test selectors

- Avoiding deterministic testing

- A surplus of end-to-end testing

- Failing to conduct the tests on each PR (CI)

- Lack of expertise in debugging Cypress tests

- Failing to mock external dependencies

- Not retrying fragile tests in flaky environments

Debugging Failed Tests in Cypress

- Use the Cypress Test Runner to run and debug tests in real-time, providing detailed failure insights.

- Leverage Chrome DevTools to inspect elements, network requests, and pinpoint issues during test execution.

- Explore test steps and application state using the Cypress UI to identify where tests fail.

- Analyze failed tests with Cypress’ automatic screenshots and video capture.

Track commands and assertions using logging to identify the cause of failures easily. - Use browser dev tools to inspect the DOM, network activity, and console logs for additional debugging context.

This guide will explore common reasons behind Cypress test failures and provide practical solutions for troubleshooting and resolution.

Understanding Test Failure in Cypress

Test failures in Cypress occur when the expected outcome doesn’t match the actual behavior of the application. When a test fails, Cypress displays a clear error message highlighting what went wrong.

The most common reasons for failures include:

- Issues in the Application Under Test (AUT): A bug or unexpected behavior in the app can cause the test to fail.

- Flaws in the Test Logic: Incorrect assumptions or poorly written test cases can also lead to failures.

Maintaining automated tests requires effort, tests can become brittle over time, especially with frequent UI changes. Proper debugging and test design are essential to keep them reliable and cost-effective.

Also Read: How to manage Cypress Flaky Tests?

Common Causes of Test Failure in Cypress

Test failures in Cypress often stem from unstable test design, environmental dependencies, or neglecting Cypress-specific best practices.

Here are the most frequent issues:

- Unreliable Element Selectors: Using dynamic or overly generic selectors increases the chance of tests breaking when the UI changes. Stable, custom attributes should be used for targeting elements.

- Ignoring Retry Behavior: Cypress auto-retries commands and assertions, but tests that aren’t structured to take advantage of this may fail due to timing issues or race conditions.

- Lack of Isolation or Mocking: Relying on real external services can lead to flaky tests. Proper mocking of APIs ensures more stable and consistent test outcomes.

- Overuse of End-to-End Tests: Relying heavily on E2E tests can slow down test suites and increase flakiness. A balanced testing strategy with unit and integration tests is more efficient.

- Skipping CI Checks: Not running tests on every pull request increases the risk of regressions slipping into production. Regular CI execution is key to catching issues early.

Also Read: How to handle Errors in Cypress

How to force fail a test in Cypress.io

Whether a missing verification is to blame for your false negative or something else, you now have the option to “Force Fail” and purposefully identify a test as failing.

Here is one method in Cypress.io for forcing a test to fail if a specific condition is met.

If the phrase “Sorry!” appears on your website, Here you want the test to fail.

/// <reference types="Cypress" />

describe("These tests are designed to fail if certain criteria are met.", () => {

beforeEach(() => {

cy.visit("");

});

specify("If 'Sorry!' is present on page, FAIL ", () => {

cy.contains("Sorry!");

});

});

The test currently passes if the word “Sorry!” is found. If this criterion is true, how can you fail the test?

You can just throw a JavaScript Exception to fail the test:

throw new Error("test fails here")However, in your situation, It is recommended to use the .should(‘not.exist’) assertion instead:

cy.contains("Sorry, something went wrong").should('not.exist')Debugging Failed Tests in Cypress

Debugging failed tests in Cypress involves using various built-in tools like the Cypress Test Runner, console logs, and browser DevTools to identify and fix issues in your test code. With these tools, you can pinpoint the root cause of test failures and make the necessary adjustments to ensure your tests are accurate and reliable.

Read More: How to start with Cypress Debugging?

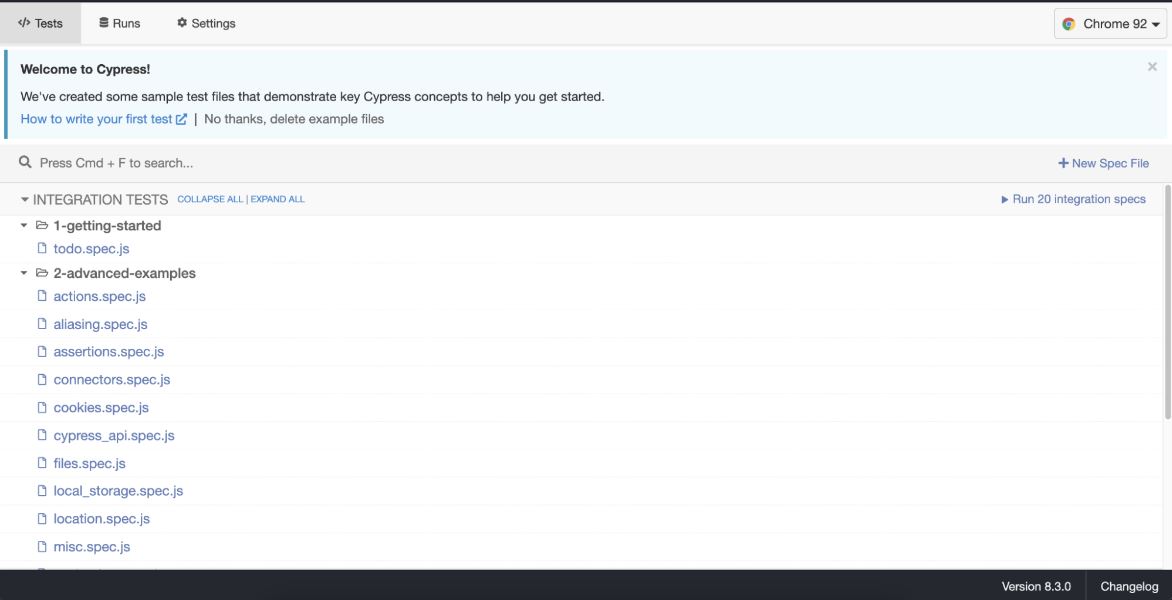

Debugging with the Cypress Test Runner

A user interface for executing tests is called test runner. Use the following command:

./node_modules/.bin/cypress open

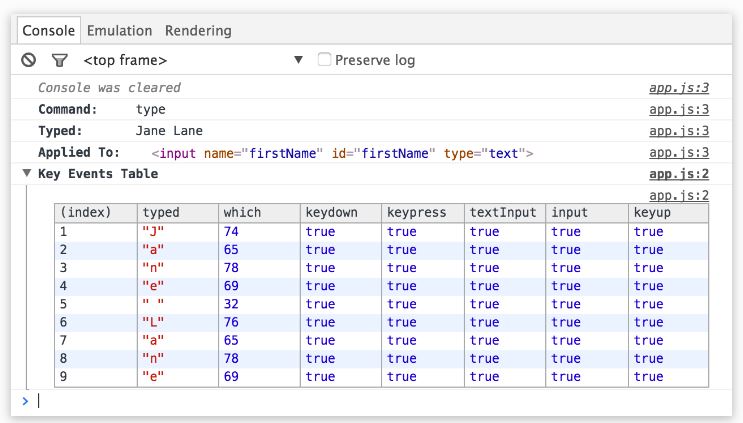

Debugging with Chrome DevTools

Chrome DevTools is a powerful suite of developer tools built into the Google Chrome browser.

While Cypress provides robust in-app debugging, leveraging DevTools enhances visibility into application behavior, DOM state, and network activity.

Cypress integrates seamlessly with DevTools, allowing developers to debug tests using familiar, browser-native tools.

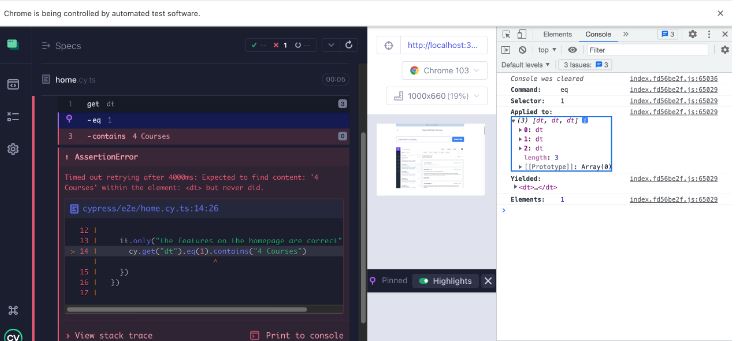

Debugging using Cypress UI

One of the quickest methods to determine why your tests failed is by using the Cypress UI. It helps to see all of the steps, every step your tests made before the issue occurred. Cypress outputs helpful data to the browser’s console when you click on a step.

For instance, in the failing test above, Cypress writes out the actual array in the console when the assertion step is clicked.

One of the most important and rewarding skills you should master is how to debug the Cypress Fail Test. Cypress offers a simple technique for debugging test scripts. It offers compelling possibilities that you can explore by clicking here.

Debugging using Screenshots & Videos

When Cypress is used in headless mode, whenever a failure occurs, screenshots and videos are taken automatically. Having a screenshot and a video of your failing test is quite handy when running your tests in continuous integration, therefore, this is quite beneficial as well.

Also Read: How to Perform Screenshot Testing in Cypress

Debugging using Logging

You can log information from your testing in two practical ways. Using cy.log() or console.log() are two options, respectively. Remember that Cypress is essentially JavaScript, thus you may implement all the advantageous debugging techniques you use in JS. In the Cypress Command Log, cy.log() will print a message. Additionally, you may use console.log() to write logs to the browser’s console.

Debugging using Browser Dev Tools

Since Cypress is a browser-based application, you have complete access to all of the data provided by your browser’s developer tools. This implies that you may troubleshoot your failing Cypress tests using the same methods and tools you use to troubleshoot problems with your application code.

Read More: How to start with Cypress Debugging?

Irrespective of the method you choose to debug your Cypress tests, you must remember that Cypress testing must be executed on real browsers for accurate results.

Start running tests on 30+ versions of the latest browsers across Windows and macOS with BrowserStack. Use instant, hassle-free parallelization to get faster results without compromising on accuracy. Detect bugs before users do by testing software in real user conditions with BrowserStack.

Run Cypress Tests on Real Browsers

Frequently Occurring Cypress Test Failures and Solutions

Even well-written Cypress tests can occasionally fail due to various factors like timing issues, application instability, or improper test structure.

Recognizing common patterns in these failures and knowing how to resolve them can drastically improve test reliability and reduce debugging time.

Below are frequent failure scenarios along with practical solutions to handle them effectively.

1. Explicit waiting

cy.wait() is a command in Cypress that instructs the test runner to pause execution for a specified period of time. It is typically used to synchronize test code with the application under test or to wait for an element or event to be available before continuing with the test.

The cy.wait() command takes a time parameter, which can be a number (in milliseconds) or a string representing a time interval. For example, cy.wait(5000) would pause execution for 5 seconds, while cy.wait(‘2 seconds’) would pause execution for 2 seconds.

// ❌ incorrect way, don't use

cy.visit('/')

cy.wait(10000)

cy.get('button')

.should('be.visible')However, this forces us to just wait for the page to load while running your test. Instead, you can leverage the built-in retry ability of Cypress.

cy.visit('/')

cy.get('button', { timeout: 10000 })

.should('be.visible')This way, you won’t have to wait more than 10 seconds this way for the button to display. However, if the button renders faster, the test will move right on to the next command. This will allow you to gain some time.

2. Unreadable selectors

First-hand information on the behavior of your test can be provided via selectors. This is why it’s critical to make them readable.

Regarding the best selectors to choose from, Cypress has several suggestions. These suggestions’ primary objective is to provide your testing stability. Use of separate data-*’ selectors comes first on the list of suggestions. You should incorporate these into your application.

Read More: Find elements using Cypress Locators

Unfortunately, access to the program being tested isn’t always available to testers. As a result, choosing elements might be challenging, especially when trying to locate a particular piece. Many people who are in this situation select their elements in a number of ways.

One of these techniques is using XPath. It is challenging to read the syntax of XPath, which is its biggest drawback. You cannot really identify what element you are selecting from your XPath selector alone. Additionally, they don’t actually increase the effectiveness of your Cypress tests in any way. Everything that XPath can do, you can accomplish using Cypress commands, and they’ll make it easier to read.

❌ selecting elements using xpath

// Select an element by text

cy.xpath('//*[text()[contains(.,"My Boards")]]')

cy.xpath('//div[contains(@class, "list")][.//div[contains(@class, "card")]]')

// Filter an element by index

cy.xpath('(//div[contains(@class, "board")])[1]')

// Select an element after a specific element

cy.xpath('//div[contains(@class, "card")][preceding::div[contains(., "milk")]]')

✅ selecting elements using cypress commands

// Select an element by text

cy.contains('h1', 'My Boards')

cy.get('.card').parents('.list')

// Filter an element by index

cy.get('.board').eq(0)

// Select an element after a specific element

cy.contains('.card', 'milk').next('.card')3. Ignoring requests in your app

Let’s examine this code example:

cy.visit('/board/1')

cy.get('[data-cy=list]')

.should('not.exist')A number of requests are fired when you open a page. The frontend app will process and render the responses to these queries on your page. The [data-cy=list] components in this example are rendered when you get a response from the /API/lists endpoint.

The issue with this test, however, is that Cypress is not being instructed to wait for these requests. As a result, even if there are lists present in your application, your test may provide a false positive and pass.

Cypress will not wait for the requests!

In order to define this, you must use the intercept command:

cy.intercept('GET', '/api/lists')

.as('lists')

cy.visit('/board/1')

cy.wait('@lists')

cy.get('[data-cy=list]')

.should('not.exist')4. Overlooking DOM re-rendering

To retrieve data from the database and then render it in DOM, modern web apps constantly submit requests. You are testing a search bar in the following example, where each keystroke will initiate a new request. With each response, the page’s content will be updated. The goal of this test is to take a search result and verify that the first item with the text “search for critical bugs” will appear when the word “for” is typed. Following is the test code:

cy.realPress(['Meta', 'k'])

cy.get('[data-cy=search-input]')

.type('for')

cy.get('[data-cy=result-item]')

.eq(0)

.should('contain.text', 'search for known issues')This test will encounter an “element detached from DOM” issue. The reason for this is that while you are still typing, you will initially get two results, and once you are done, you will only get one result. Just check it!

It’s important to keep in mind that the .should() command will only retry the preceding command, not the entire chain. As a result, cy.get(“[data-cy=result-item]”) is not called again. You can once more add a guarding assertion to code to counteract this issue. This time, it will first ensure that you get the proper number of results before asserting the result’s content.

cy.realPress(['Meta', 'k'])

cy.get('[data-cy=search-input]')

.type('for')

cy.get('[data-cy=result-item]')

.should('have.length', 1)

.eq(0)

.should('contain.text', 'search for critical bugs')But what if you cannot assert the number of results? In short, the solution is to use .should() command with a callback, something like this:

cy.realPress(['Meta', 'k'])

cy.get('[data-cy=search-input]')

.type('for')

cy.get('[data-cy=result-item]')

.should( items => {

expect(items[0].to.have.text('search for critical bugs'))

})5. Inefficient command chains

In Cypress, the chaining syntax is quite great. Due to the fact that each command passes data to the one that follows it, your test scenario has a one-way flow. However, there is logic to even these commands. Commands issued by Cypress may be parent, child, or dual in nature. Therefore, some of the commands will inevitably begin a new chain.

Consider this command chain:

cy.get('[data-cy="create-board"]')

.click()

.get('[data-cy="new-board-input"]')

.type('new board{enter}')

.location('pathname')

.should('contain', '/board/')The difficulty of reading such a chain is compounded by the fact that it disregards the parent/child command chaining logic. Every.get() command essentially begins a new chain. As a result, .click().get() chain is illogical. By correctly leveraging chains, your Cypress tests might be more understandable and less unexpected:

cy.get('[data-cy="create-board"]') // parent

.click() // child

cy.get('[data-cy="new-board-input"]') // parent

.type('new board{enter}') // child

cy.location('pathname') // parent

.should('contain', '/board/') // child6. Overusing UI

You ought to use UI as little as possible when building UI tests. By using this tactic, you may speed up your testing and feel just as confident about your app—if not more—than before. Let’s imagine your navigation bar has links and looks like this:

<nav> <a href="/blog">Blog</a> <a href="/about">About</a> <a href="/contact">Contact</a> </nav>

The test’s objective is to ensure that all of the links contained within the <nav> element lead to active websites. Using the .click() command and then checking the opened page’s location or content to see if the page is live could be the most logical course of action.

The downside of this strategy is that it takes too long and could mislead you.

You can use the .request() command to verify that the page is live instead of checking your links like this:

cy.get('a').each( link => {

cy.request(page.prop('href'))

})7. Repeating the same set of actions

It’s very frequent to hear that your code should be DRY, or don’t repeat yourself. Although this is great instruction for your code, it seems that throughout the test run, it is only loosely followed. An example of a cy.login() command that will carry out the login procedure before each test is shown here:

Cypress.Commands.add('login', () => {

cy.visit('/login')

cy.get('[type=email]')

.type('filip+example@gmail.com')

cy.get('[type=password]')

.type('i<3slovak1a!')

cy.get('[data-cy="logged-user"]')

.should('be.visible')

})The ability to condense this set of actions into a single command is unquestionably useful. It will unquestionably make code more “DRY.” However, if you keep using it in your test, the same set of actions will be carried out continuously during test execution.

You can use Cypress to conjure up a solution to this problem. Using the cy.session() command, this series of steps can be cached and reloaded. The experimental SessionAndOrigin: true attribute in your cypress.config.js file can be used to enable this, even if it is currently in an experimental stage. The sequence in the custom command can be wrapped using the.session() function as follows:

Cypress.Commands.add('login', () => {

cy.session('login', () => {

cy.get('[type=email]')

.type('filip+example@gmail.com')

cy.get('[type=password]')

.type('i<3slovak1a!')

cy.get('[data-cy="logged-user"]')

.should('be.visible')

})

})This will result in the sequence of your custom commands running once per specification. However, using the cypress-data-session plugin will allow you to cache it for the duration of your entire test run.

There are a lot more things you can do this, but caching your steps is probably the most valuable one, as it can easily shave off a couple of minutes from the whole test run.

What are Cypress Test Retries?

Cypress test retries are a built-in feature that automatically re-runs failing tests several times before marking them as failed. This helps address flaky tests, failures caused by temporary issues like timing, network delays, or DOM not being ready, rather than actual bugs.

- Retries apply to failed tests only, not passed ones.

- The number of retry attempts can be configured globally or per test.

- Cypress retries the entire test block (it()), not just individual commands.

- Retries are useful for making CI runs more stable by reducing false failures.

This feature improves test reliability and minimizes unnecessary debugging caused by transient issues.

Best Practices for Handling Test Failures in Cypress

Test automation can dramatically boost efficiency, but only when implemented thoughtfully. Many failures are preventable with the right practices.

Below are key guidelines to improve stability, reduce flakiness, and make debugging faster in Cypress:

1. Define Clear Goals for Automation: Not all tests must be automated. Focus automation on scenarios directly contributing to delivering quality at speed. Set realistic timelines and objectives for automation, ensuring each test adds measurable value.

2. Build Modular and Isolated Test Cases: Each test should validate only one feature or functionality. This approach makes failures easier to diagnose and prevents dependencies between tests.

3. Ensure Test Independence and Order Flexibility: Design tests that can run in any sequence without affecting results. Avoid relying on shared states or test interdependencies to reduce debugging overhead.

4. Use Assertions Strategically: Assertions are critical for verifying test outcomes. With Cypress, assertions help:

- Improve observability and ease of failure analysis.

- Validate that elements, states, and data are present or correct.

- Ensure dynamic content behaves as expected under different conditions.

5. Document Tests Clearly: Maintain accurate documentation of test steps, objectives, and expected results. Well-documented test cases help new team members understand logic and reduce ramp-up time.

6. Handle Flakiness with Retries and Timeouts: Use Cypress’ built-in retries and adjustable timeouts to manage unpredictable issues like network delays or external service failures.

Implement recovery strategies for flaky scenarios to keep CI pipelines stable and efficient.

Testing on Real Devices with BrowserStack Automate

Running Cypress tests on real devices is crucial for uncovering issues that don’t surface in local or simulated environments. Device-specific behaviors, inconsistent browser rendering, and OS-level quirks can often lead to test failures that are hard to replicate on local setups.

BrowserStack Automate provides access to a cloud of real devices and browsers, enabling teams to run Cypress tests in parallel across real user conditions.

- Uncover real-world issues: Detect device-specific bugs that emulators and simulators often miss.

- Improved test reliability: Validate application behavior across actual hardware and browsers.

- Faster execution: Run Cypress tests in parallel across multiple devices to reduce test cycle time.

- Seamless CI/CD integration: Automate testing within your existing development workflow.

- Actionable debugging insights: Access logs, screenshots, and video recordings for quicker root cause analysis.

Conclusion

Understanding and effectively managing test failures in Cypress is crucial for maintaining the reliability of your tests.

By implementing best practices, using real devices for testing, and properly analyzing failures, you can significantly improve test accuracy and speed up your development process.

With Cypress’ powerful features, addressing failures becomes a streamlined process, ensuring smoother releases and better-quality software.