Regression testing is essential for any organisation that is serious about shipping quality code, quickly. But it's tricky to get right.

That's because a regression test suite needs to be comprehensive as well as fast—and balancing the two is like a tightrope walk.

Why should you care about the speed?

Imagine your test suite taking 48 hours to complete. At this point, the devs and testers have moved on to other (better) things.

In the meantime, if something breaks in the test, they need to drop whatever context they picked up and come back to the test and the failure. It's a waste of precious human time—a waste that can be minimized, if not entirely avoided.

Can’t we just drop some of those lengthy tests?

If only it were that simple. You want to make sure not to compromise code coverage.

In this post, we'll take you through some ways you can create regression test suites that are both quick and comprehensive. Some of these techniques are pretty simple, others will need more effort. Nothing to be wary of. As JFK once said, "We do these things not because they are easy, but because they are hard". And by the end of it, you'll not only get better test suites; you'll also get a new outlook on testing.

Alright, let's get into the meat of the post, which will be broken down into three parts. We will start from optimizing elements of a test script, followed by breaking down test scenarios into faster, independent tests, and looking at the test suites’ execution as well.

So, at the end of each section you should find yourself with:

- Faster test scripts

- Faster test cases

- Faster test suite execution

...all of which will lead to a more productive and happier QA team—and subsequently, a happier organisation.

Part 1: Faster test scripts

1.1 Use of selectors

Selectors are the bread-and-butter of all Selenium tests. They let you search for a particular element on the page you are testing. You can then interact with the element by clicking, sending keys, etc.

There are multiple types of selectors, e.g., find by id, find by class, find by name, etc.

driver = Selenium::WebDriver.for :chrome

# navigate to browserstack.com

driver.get("https://www.browserstack.com")

# find element by id

driver.find_element(:id, "menu-item-555")

# find element by class

driver.find_element(:class, "menu-item-555")

# find element by link text

driver.find_element(:link_text, "Live")

# find element by xpath

driver.find_element(:xpath, "//footer/div/div/div[1]/div/ul/li[1]")All of the selectors listed above find the same element, but there are a few differences in their underlying implementation.

Find by ID

- ID is unique for a given element on a webpage.

- find by ID uses Javascript's findElementById, which is optimized for almost all browsers.

Find by XPath

- XPath search is based on traversing the DOM tree and trying to find the element which matches the expression.

- This itself takes a bit more time than finding by ID. Also, it isn't optimized for browsers, mostly older ones, and especially the older IEs.

- ID may be missing for some elements, but XPath can be used to search all elements.

Comparison

We did some benchmarking for find_element by ID vs XPath. We ran find_element_by_id and find_element_by_xpath 50 times on same element.

We did on the Instagram app and found that using ID was ~14% faster than XPath.

user system total real

Appium find_element by ID 0.030000 0.020000 0.050000 ( 11.363210)

Appium find_element by XPath 0.030000 0.010000 0.040000 ( 13.227601)We did the same on Wikipedia app. In this case, using by ID was ~19% faster. (You can find the script here.)

user system total real

Appium find_element by ID 0.040000 0.020000 0.060000 ( 12.979250)

Appium find_element by XPath 0.040000 0.020000 0.060000 ( 16.035685)

Conclusion

XPath can traverse up the DOM (e.g. from child to parent), whereas CSS can only traverse down the DOM (e.g. from parent to child). In modern browsers and mobile devices (for Appium), CSS performs better than XPath.

For faster test runs, your preference should be: findElementID, followed by CSS selectors (eg. find by class, name, text), and eventually XPath.

1.2 Implicit vs Explicit Waits

Elements in the DOM might load at different time intervals. This may happen because elements are loaded via Ajax or some Javascript events. The 'waits' in Selenium are used to synchronise your code state with browser state.

Selenium throws "ElementNotVisibleException" if we try to find elements before it is loaded. To tackle this problem Selenium has two types of waits.

Implicit waits

Implicit wait is the default waiting time between each Selenium command in your script. This is global setting and can be set at the start of script.

driver.manage.timeouts.implicit_wait = 33

Explicit waits

Explicit works similar to implicit wait except you need set it before running individual command. Explicit adds more flexibility over Implicit as you can define different values for different elements.

wait = Selenium::WebDriver::Wait.new(:timeout => 10) # seconds element = wait.until { driver.find_element(:id => "dynamic-element") }

Comparison

- Implicit wait is global and applies to every find_element command where as Explicit wait can be set for individual command.

- Explicit wait is handled client side of Selenium (eg. selenium bindings, Watir) whereas Implicit wait is handled by remote part of Selenium (eg. individual drivers, selenium-jar)

Conclusion

- Explicit waits trumps implicit. This is because implicit timeout is set in remote part of Selenium and explicit waits are mentioned in a local part of the Selenium. Using both can lead to unexpected behaviour. You can read more here.

- Setting implicit waits may increase testing time dramatically since it applies globally for find_elements command

- Explicit waits makes your code more verbose and affects readability. You can see it in code example above.

- It is not recommended to use

Thread.sleepas it blocks the main executing thread.

Once you've got the individual tests performing at their best, we can look at how to put them together in the best possible ways.

Part 2. Faster test cases

According to Software Testing Fundamentals, a good test case is:

- accurate: Does what it's supposed to do, i.e., once the test is done you should be sure that whatever you tested works.

- economical: No unnecessary steps or words.

- traceable: Capable of being traced to requirements.

- repeatable: Can be used to perform the test over and over.

- reusable: Can be reused if necessary.

You'd find that atomic test cases fit those parameters.

Atomic test cases

An atomic test case focuses on one testable unit. A sign up function, a login function, a payment function, and so on. This way, your scenarios are not a single test case—but built of multiple atomic cases that are mutually independent.

- Building a test suite takes less time—a huge advantage for developing new test flows.

- Failure reporting pinpoints to exact sources, and fixing those failures becomes easier.

- It's a good hygiene practice—the whole test code base is cleaner, easier to understand, and scale.

- New test scenarios can be added without re-testing existing functionalities.

How to write atomic tests?

Start by moving out common steps to

- to avoid the trouble of writing them again for other test scenarios

- to avoid retesting them once tested for one build

For best results

- use APIs to mock scenarios before testing a particular test.

- ensure that any test case doesn't have to depend on the result of another test.

Example test scenario

As part of our own regression suite, we test whether a Live session can be run by a premium BrowserStack user. If such a test were to be done manually, the steps involved would be as follows:

- Navigate to BrowserStack

- Sign Up

- Navigate to Pricing page

- Choose a product and proceed to checkout

- Enter card details and purchase

- Run a Live test

The above scenario can be split into 3 smaller (atomic) tests

- Test "SignUp"

- Test "Purchase"

- Test "Run a Live Test"

How does splitting the scenario help us?

SignUp and Purchase could be required for other flows. Testing them independently makes sure that they are not re-tested for other flows—for all other flows, we can simply spoof SignUp and Purchase via backend APIs (and by setting the appropriate session cookies).

Since we are no longer re-writing those steps, testing different flows is considerably faster.

Running a single script for testing a huge flow leads to debugging nightmares. For the above example scenario, it is difficult to know what broke the automation, SignUp? Checkout? Live Test? If we split the flow, breakages can be localized, fixed and tested again—quickly.

Flaky Tests

As you increase the number of tests to improve your coverage, you will find that the regression is more or less red always. The team will be working as hard as possible trying to fix existing flaky test cases and to make them stable, but this won't scale because people will be committing more tests every day than you can fix.

Flaky tests are worse than no tests. With everything same (environment, Jenkins, application branch etc.) - if a test passed before but failed later, that test is flaky and should be disabled. Though, this reduces coverage but delivers stability of regression and maintains confidence and velocity of engineering team.

But, another important piece: is to have an on-call engineer. For keeping eye on all test-runs, including disabled test cases - and make sure they are being actively fixed and enabled again.

Surprisingly, a lot of flakiness of tests come from:

- Environment issues (your pre-prod, UAT environment etc.)

- CI server stability (more load than it can take)

- Dependent test cases introduced into your test suite

- Selenium and browser stability

- Application timeouts because of involved network calls

As these tests are now independent of each other we can get faster results by testing them in parallel.

Part 3. Faster Test Suite Execution

Once you've got your individual tests at a state where you can trust their results you will have to start to think about putting them together as part of a larger test suite. Test suites are designed to test a single component end-to-end. This means that all the tests connected to that component become part of a single test suite.

IF you managed to keep the tests independent (based on the section above), running these tests serially is unnecessary and wasteful (assuming more than 1000 tests per regression suite).

So, in this section, we'll look at executing these tests all at once (parallelization) and making sure that you have

- an infrastructure setup—that is, a sufficient number of Browsers/Devices—on which to run the parallel tests, and

- a CI box (Jenkins, TeamCity, etc.) capable of handling the load of parallel test execution.

3.1 Infrastructure setup

The goal here is to make sure that the test suite's execution time is minimized. Now that you have a suite of individual tests—which are atomic and independent—running the whole suite at once should not take any more time than the slowest test.

Total time of the parallelized test suite == Time of the slowest test case

Every UI test should get its own browser instance. Whether you want to run on your CI box, run your own Selenium grid, or use a Selenium grid on the cloud (like the one we have on BrowserStack) is up to you.

- Local Selenium Grid

- In this case the Hub and Nodes for the grid will be hosted within your network, or even on your local system

- You will be able to test on the available browsers and devices which you can hook to your node system

- As the number of tests in your suite increases, the number of nodes may need to increase depending on how large your nodes are

- This is generally only viable when the scope of the testing is limited or if you have a lot of money to burn

- Selenium Grid on the cloud

- This lets you avoid the maintenance costs of a local grid.

- You can get instant access to a multitude of OS-browser combinations (For example: OS-Browser combinations on BrowserStack)

You can run your automation test cases on BrowserStack using Automate

3.2 Scaling the CI Box

Challenges

- Tests are run with hundreds of parallel browsers or devices which can not be executed on a single CI machine due to its resource limitation.

- False alarms—Tests will fail if the CI machine is overloaded.

- Aggregating test results needs extra effort when it is executed on a distributed system.

Solution

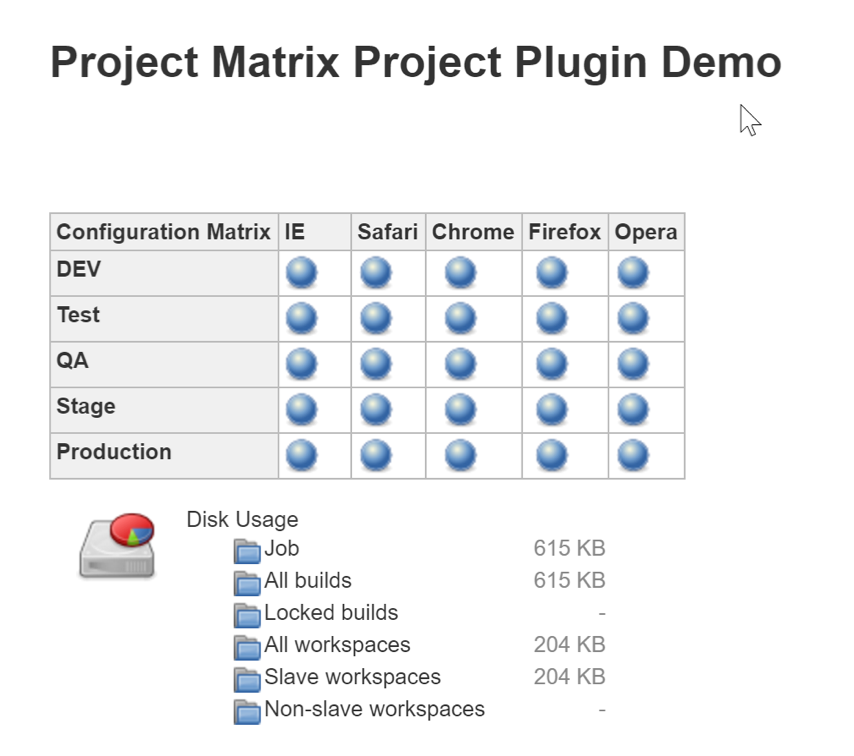

CI tools have a master-slave configuration, which allows you to increase your testing capacity.

- Create a master-slave configuration and define number of executors on each machine. (Recommendation: Use on-demand cloud machine for slave machines.)

- Now execute your tests (all at once) on different slaves—this will prevent overload problem on the master machine.

Example: If you want to execute your test suite on different browsers, you can:

- Configure a slave for each browser

- Spawn those slaves on demand (through a docker instance or an AWS AMI)

- If any slaves go down, all cases should go into a queue

- Another slave instance should be spawned to start executing those cases

Divide cases in multiple jobs and run those jobs in parallel on slaves

- Consider I have 10 modules and each module contains 100 cases, I will create 1 job for each module so it will be 10 jobs

- I will create a pipeline job in CI and will configure 10 jobs parallel and run that pipeline job. Where a pipeline job will run on a master and child jobs will run on slaves

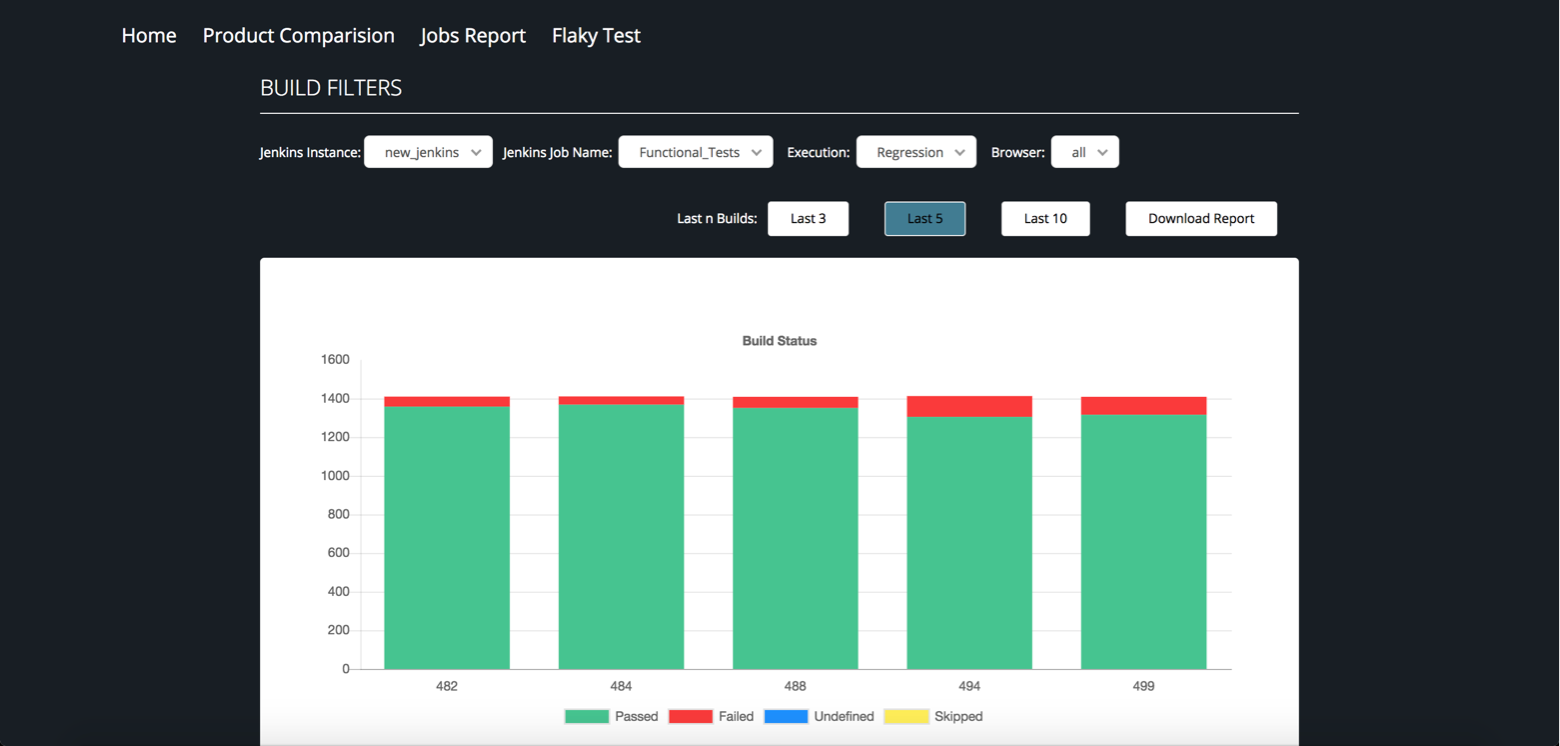

Analyzing test failures quickly

Failure cases have a major impact on Regression/Sanity suites because it directly impacts deliverables.

To fix failure cases you need to analyze/debug your failure cases. This needs a good reporting & alerting strategy, consisting:

- a centralized system to collect data,

- stacktrace for failure cases,

- test/suite execution times,

- alerting with build results,

- count of passed and failed cases, and

- bucketing of failed tests.

Tests can also fail without an error in application code. THIS IS CALLED FLAKINESS.

Here are some factors that contribute to flakiness (and how to isolate them).

Env issue

- Put monitoring and alerting for AUT (Application Under Tests) during a run.

- Collect and mine AUT network and application logs.

Flaky test scripts

- Keep the execution history of each tests case.

- Rerun failed tests cases either automatically or manually to filter out flaky failure

An honest-to-goodness code bug

- Use locator key instead of hardcoded locators

- Put proper failure loggings with tests data and other details

Selenium or browser stability issue

- Selenium session start failure

- Page load failures

- Selenium stale elements

- Locator or product change flow change issue

CI server issue

- Dependency issues & Code checkout Issue

- CI machine memory and CPU issues

- Network connectivity issues

It is also recommended that you make reporting centralized so you can analyze all cases quickly from one screen.

Endnote

Let’s take a breath and remember that a perfect regression suite doesn’t exist. The solutions listed above are only optimizations. And at the heart of these optimizations is the understanding that completeness, speed and accuracy are key to a successful testing strategy.

Regression test suites keep your application (and your engineering team) sane. But they can turn into a chaotic mess themselves. It's up to you to improve them, continuously, and treat them with care they deserve.