Before every deployment, QA teams need to validate test automation results. One of the ways they do this is by manually comparing two builds to spot new failures, confirm fixes, or check for regressions. It’s a tedious process that involves jumping between tabs, sifting through test logs, and trying to spot changes between builds.

We built Test Reporting & Analytics to improve visibility into test executions and help teams validate test runs faster. Now, we’re taking that a step further with Build Comparison, a powerful new feature that changes how teams compare builds. By providing instant insights into what’s changed between test runs, Build Comparison helps QA teams detect regressions, anomalies, and unexpected changes faster and more accurately than manual comparisons can.

Why teams need Build Comparison

Comparing build runs to verify tests remains largely a manual process. There are several challenges with this:

- The process is time-consuming as users have to keep both build runs open side-by-side and manually compare them

- There may be important anomalies or failures missed because of human error

- Some teams maintain multiple tabs or spreadsheets to track and log changes between test runs, which is not scaleable

Here’s how one of our customers who is a Senior QA Lead describes it, "Comparing builds is crucial for our sign-off process, but manually tracking changes across hundreds of tests is becoming unsustainable as we scale our automation."

Seamless comparisons for faster releases

Build Comparison introduces a comprehensive, side-by-side analysis of test runs that enables teams to:

Compare builds across historical runs

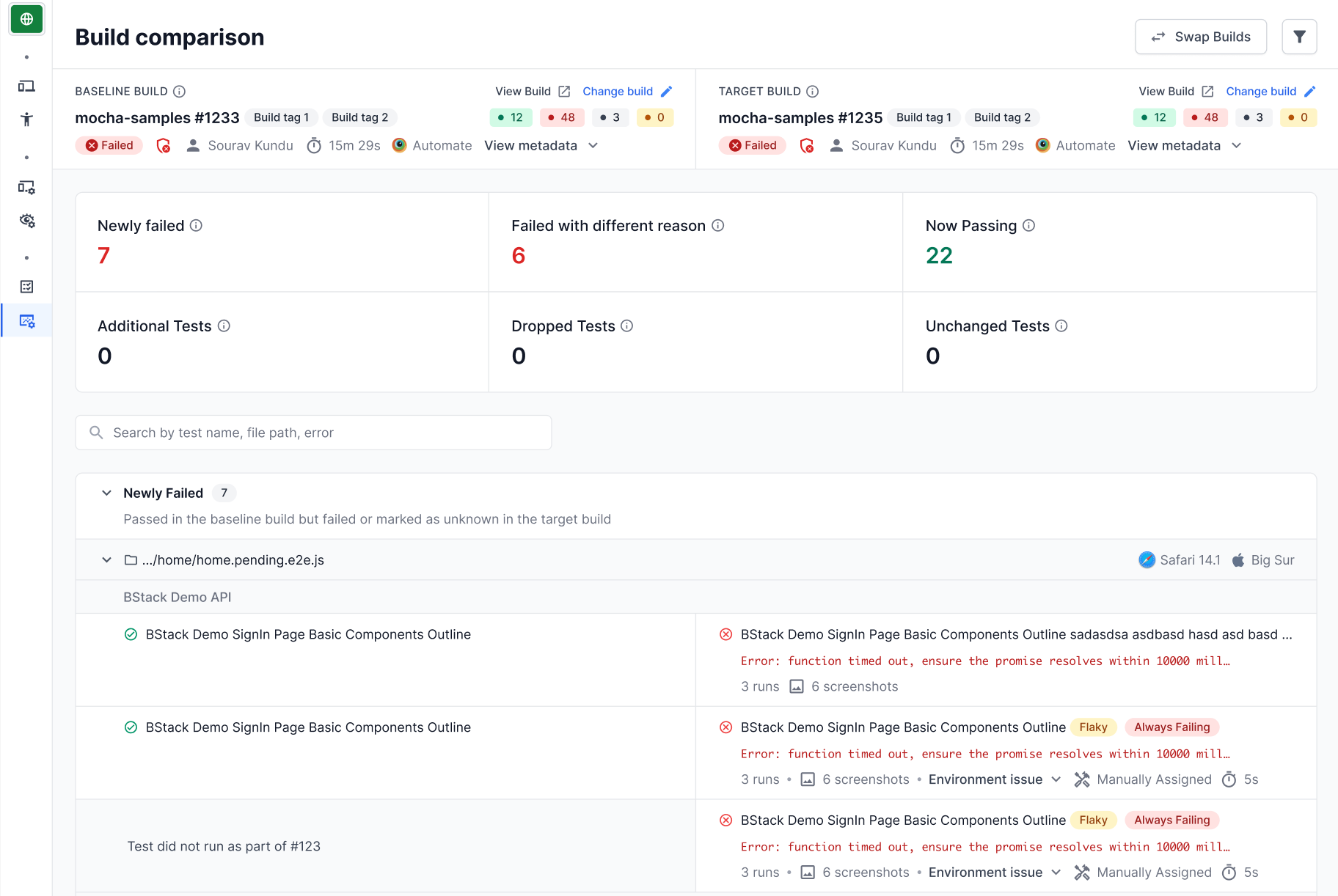

Compare any two build runs with a detailed side-by-side view that instantly highlights changes in test results. Whether you're validating fixes, analyzing the impact of code changes, or investigating performance regressions, you get immediate visibility into what changed between runs.

Analyze test result changes

The comparison view provides detailed insights into newly failed tests, tests that started passing, and failures with different root causes. This granular visibility helps teams quickly identify patterns and make informed decisions about release readiness.

Debug anomalies faster

Drill down into specific test changes with our timeline debugger view, accessing all execution logs, screenshots, and historical data to understand the root cause of changes.

Automate deployment decisions with Quality Gates

Quality Gates in BrowserStack Test Reporting & Analytics help QA teams automate the verification of builds, automate PR merges and deployments, and set quality standards. It allows you to define thresholds for flakiness, performance, and failure categories before allowing code merges or triggering automated deployments.

In Build Comparison, teams can set up custom rules to trigger alerts when specific comparison results are detected. These rules can also be tied to Quality Gates, allowing builds to pass, fail, or notify users when certain thresholds are met, ensuring that only builds meeting predefined standards move forward.

Getting started

To start using Build Comparison:

- Navigate to your Test Reporting and Analytics dashboard

- Select any build run you want to analyze

- Click the "Compare" button and choose your baseline build

- Explore the detailed comparison view to identify changes and make informed decisions

Looking ahead

We're already working on expanding the feature with automated comparison capabilities, allowing teams to automatically compare each build run against pre-configured benchmarks for even faster quality validation.

For more information on how to get started, visit the Build Comparison documentation.