In software development, release velocity is the driving force behind delivering updates faster, enhancing user experience, and staying ahead in the market.

Overview

What is Release Velocity?

Release velocity measures how quickly a development team delivers new features or updates to production, reflecting the speed of software delivery.

What is a Good Release Velocity?

A good release velocity balances speed and quality, ensuring frequent, reliable updates without compromising user experience or introducing bugs.

Benefits of Accelerating Release Velocity

- Faster Time-to-Market

- Improved User Satisfaction

- Increased Team Productivity

- Competitive Advantage

This article will provide a broad outlook on the challenges commonly encountered during product release and how debugging and testing can help accelerate product release cycles.

Challenges to Optimal Product Release Velocity

Achieving optimal product release velocity comes with its own set of challenges. Here are some of them:

1. Infrastructure costs associated with in-house cross browser testing

Take an example of a site that archives something as trivial as gifs for every meme in existence. All a user has to do is to search by topic and they’ll get all the memes they want. The company making this site uses Windows-based systems to develop and test the same.

For a user who uses a MacBook Air and opens the site on Safari. Suddenly they find none of the buttons are working and the gifs are all over the page. In this situation, they would either assume the site has a temporary issue and return later or simply leave forever to find a better alternative.

In either case, the site has lost a valuable customer because they didn’t check thoroughly enough on all possible browsers on which their device can be tested.

To Product Release managers, this represents the perfect storm, i.e. a product that works selectively. Though browser vendors follow Open Web Standards, they have their own interpretations of them.

Since they each render HTML, CSS, and JavaScript in unique ways, thoroughly debugging the website or app’s source code is not enough to ensure that it will look and behave as intended on different browsers (or different versions of a single browser).

For cases like this, teams depend on cross-browser testing to help pinpoint browser-specific compatibility errors so that they can be debugged quickly.

Product teams constrain their testing with a test specification document (test specs) which outlines broad essentials—a list of features to test, what browsers/versions/ platforms to test on in order to meet the compatibility benchmark, test scenarios, timelines, and budget.

Cross browser testing encapsulates a wide range of activities including –

- Base functionality tests

- Visual testing for checking the UI Design

- Accessibility testing to check compliance with Web Content Accessibility Guidelines (WCAG)

- Responsiveness testing

Given that there is a veritable plethora of browsers and browser versions active on the market, creating in-house infrastructure to test a website on all of them can be both time-consuming as well as expensive.

Though emulators/simulators can be used for this purpose, so can Virtual Machines (VMs). These results are not easily scalable and have been shown to be unreliable on virtual mobile platforms (Android and iOS). Moreover, they do not take any real user conditions into account while testing, thus, the chances of leaving bugs are higher.

Running cross-browser tests on a cloud-based testing infrastructure (like) to run your tests on a remote lab of secure devices and browsers—at a fraction of the cost of setting up your own device lab.

Pro Tip: You don’t have to know coding to make use of interactive cross browser testing tools. Browserstack’s Live, for instance, is also used by marketers and web designers, who are quickly testing landing pages/new designs for cross-browser rendering and responsiveness.

Run Cross Browser Tests on Real Devices

2. Communication gaps between Developers and testers

Another major roadblock to product release velocity is the lack of proper communication between developers and the QA team. A situation where a bug is identified by the latter, and yet cannot be reproduced by the former is best avoided. Tools that help QAs deliver objective information to devs to replicate bugs are critical to prevent this back and forth.

Read More: How to write an Effective Bug Report

Then there is the case of bugs considered “urban Legends” by the software industry. These are apocryphally known to exist, by a number of teams and even organizations, but are very hard to reproduce. In these cases, it is vital to have a number of effective debugging tools that work across system configurations and enable the effective identification, reporting, and resolution of defects.

BrowserStack’s debugging toolkit makes it possible to easily verify, debug and fix different aspects of software quality, from UI functionality and usability to performance and network consumption. Through its text logs, video logs, console logs, network logs, and telemetry logs debugging becomes easy as the bugs can be well reported via channels like Slack, JIRA, etc.

3. Differences between Staging and Production environments

Sometimes testers and even the organizational QA teams consider staging environments to be sufficiently equipped replicas of a production environment. Though this can be correct if both environments are in full synchronization with each other, it is often difficult to achieve this sync due to a number of reasons, viz –

- The Staging cluster is usually much smaller than the production cluster. This means that configuration options for things like queues, databases, load balancers, etc are going to be different.

In case these configurations are stored in a database or a key/value (such as Consul, Zookeeper), then auxiliary systems also need to be established in the staging environment to ensure that it interacts with these systems in the same way as the production environment. This leads to increased overhead, and potentially resource costs.

- The other risk arises from inadequate monitoring of the staging environment. The primary caveat here is, that the signals monitored in the staging environment can be inaccurate as it is still a completely different environment from the one used in production.

Mimicking the conditions of a production environment can be very difficult, and this can lead to issues emerging from test cases that only give realistic results when performed in real web traffic. For example, soak testing verifies a service’s stability and reliability over a long time under realistic levels of load and concurrency, detects memory leaks, GC pause times, CPU utilization, and more. This can only be done by testing on a real device-browser-OS combination as the production environment.

Read More: Testing in Production: A Detailed Guide

4. Suboptimal leveraging of test automation suites

Test automation plays a major role in increasing release velocity. If automation testing options are not leveraged optimally, or their scope is curtailed to internal issues, then the entire release cycle risks derailment.

A very apparent example comes from regression testing. Once any change has been pushed into the system, it has to undergo regression testing and the same set of tests has to be executed repeatedly. Automating these tests reduces the time required for execution, and also increases test efficiency.

Pro Tip: Testing on a cloud-based grid is easier since updating and maintenance would be taken care of by the organization offering the grid. For example, BrowserStack offers a cloud Selenium grid that is connected to 3500+ real devices and browsers for testing. Users simply have to sign up and start testing on a robust Selenium grid that is constantly updated for the best possible results.

However, in order to ensure that the automation test suite provides optimal test coverage, it is essential to avoid flaky tests. These flaky tests are ones with non-deterministic outcomes. Essentially, it’s a test that, when executed, sometimes passes and sometimes fails. This makes them hard to reproduce because inconsistent results in the same environment will naturally make it harder to pinpoint an actual error in the code.

Read More: How to avoid Flaky Tests?

5. Time costs from Debugging issues in production

As discussed above, some software defects can arise due to non-code issues. These are the edge cases that depend on user traffic, or device configurations and are almost impossible to identify and replicate unless tested meticulously during production.

Even if a company has invested the resources to create a fully-functional test lab for production environments, it may still end up taking a lot of time to iron out these defects if the proper tools are not used.

Now that the challenges to Product Release Velocity have been described, the rest of the article will focus on the means to accelerate it by overcoming these issues and outline some best practices to follow in order to achieve the same.

How to accelerate Product Release Velocity

1. Reduce the time between development and release

The Product Manager Survey, from Gartner, has identified “delays in product development (bugs, errors, feature creep), failure to meet customer requirements, product quality” to be some of the critical factors prohibiting on-time product release by as much as 45%.

In order to overcome these issues, the QA team must endeavor to reduce the time between development and release. This can be achieved in a number of steps –

- Optimize automation test suites: Test automation has gone from being an elitist option deployed by isolated teams with exceptional skills to an industry buzzword. Every software development team takes automation into serious consideration. In fact, in most cases, test automation isn’t a negotiable option. Test automation can work wonders for certain types of tests like Regression Testing, Data-Driven Testing, Performance Testing, and Functional Testing.

Before jumping into automation testing, it is important to have a well-defined automation strategy. Some key considerations here are:

- The nature of the software – Some software is web-based and some are mobile-based. For the former, a tool like Selenium can be used, whereas for the latter Appium can be more suitable.

- Programmer Experience – It is important to choose frameworks that match testers’ comfort and experience. Some of the most popular frameworks support commonly used languages like Java, JavaScript, Ruby, C#, etc.

- Selecting the Right Test Grid – The test grid refers to the infrastructure that all automated tests will run on. It comprises a series of devices, browsers, and operating systems (multiple versions) that software is to be tested on. It is always best to automate tests on real devices and browsers. This will ensure that software is being tested in real user conditions.

Something else to decide is whether the test grid should be hosted on-premise or on the cloud. Keep in mind that on-premise infrastructure is almost always expensive to set up and maintain. It requires keeping track of, updating, and maintaining new devices, browsers, and operating systems.

This is a challenge since multiple versions of each are released every month. Conversely, testing on a cloud-based grid is easier, since updating and maintenance would be taken care of by the organization offering the grid.

- Leverage the power of parallel testing: The ideal test grid should enable parallel testing. This means that testers should be able to run multiple tests on multiple devices simultaneously. This cuts down on test time, expedites results, and offers results within shorter deadlines.

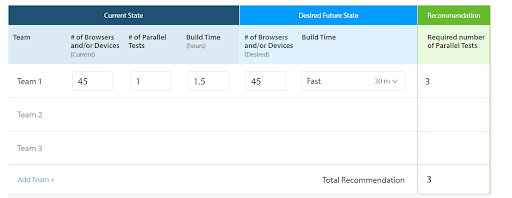

To better understand how parallel testing helps boost standard automation testing capabilities, take a simple example – an automated functional test of a signup form. To perform this test for 45 different Browser/OS configurations, with each test taking an average time of 2 minutes, then the total run time of tests would be 90 mins or 1.5 hrs when run successfully in sequence.

Now imagine, when running 3 parallel tests simultaneously, the total execution time would have come down to 30 mins.

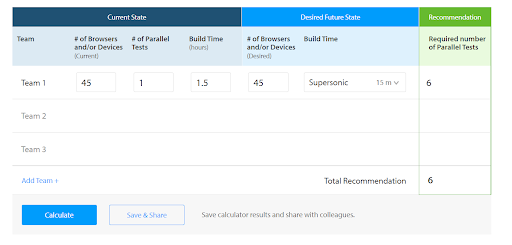

And for 6 parallels, it would be even further reduced to 15 mins- a far cry from what was expected before.

Read More: Parallel Testing: The Essential Guide

The number of parallels required to optimize test times depends on the following key factors:

- The current number of browsers (and/or) Devices on which the scenario needs to be tested

- Number of parallel tests currently being run ( we will keep this as 1 if only sequential tests are being run)

- Current Build Time

- The desired number of browsers (and/or) Devices on which the scenario needs to be tested, and

- Desired Build Time

For more complex scenarios involving a shift in any of these parameters, it is best to use a Parallel Test Calculator to understand the number of parallel sessions required to achieve test coverage and build execution time goals.

Pro Tip: With Parallel Testing, BrowserStack Automate and App Automate allow you to run multiple tests in parallel across various browsers/devices and OS combinations. In this way, more tests can be run at a time, thereby decreasing the overall time spent on testing. Faster build times mean faster releases and less time spent waiting for builds to complete. Try this calculator to learn more.

- Automate Visual Testing: Visual Testing, sometimes called visual UI testing, verifies that the software user interface (UI) appears correctly to all users. Essentially visual tests –

- Check that each element on a web page appears in the right shape, size, and position

- Checks that these elements appear and function perfectly on a variety of devices and browsers.

Visual testing fills gaps left by functional testing with regard to the visual side of things. In tandem with functional tests, it ensures that not only does the software work perfectly, but it also looks visually on point.

Visual tests generate, analyze, and compare browser snapshots to detect if any pixels have changed.

Visual testing requires a test runner – to write and run tests. Test runners enable testers or developers to create code that reproduces user actions. Each test carries assertions defining a condition that will either pass or fail.

Once tests are written, use a tool like Selenium or Cypress to interact with the browser. They support visual tests and allow for the generation of screenshots of web pages.

Finally, there must be a tool for managing the testing process. Percy by BrowserStack is one of the best-known tools for automating visual testing. It captures screenshots, compares them against the baseline images, and highlights visual changes. With increased visual coverage, teams can deploy code changes with confidence with every commit.

Percy provides the following advantages for accelerating product release:

- Testers can increase visual coverage across the entire UI and eliminate the risk of shipping visual bugs

- They can avoid false positives and get quick, deterministic results with reliable regression testing.

- They can release software faster with DOM snapshotting and advanced parallelization capabilities designed to execute complex test suites at scale.

2. Use Debugging Tools to share and resolve bugs across teams

One of the challenges to the product release discussed above was the oft-recurring issue of testers and developers not being on the same page due to the lack of clarity in detailing and reproducing bugs uncovered during testing.

The best way to detect all bugs is to run software through real devices and browsers. When it comes to websites, ensure that it is under the purview of both manual testing and automation testing. In the absence of an in-house device lab, the best option is to opt for a cloud-based testing service that provides real device browsers and operating systems.

BrowserStack offers real devices for mobile app testing and automated app testing. Simply upload the app to the required device-OS combination and check to see how it functions in the real world.

BrowserStack offers a wide range of debugging tools that make it easy to share and resolve bugs.

The range of debugging tools offered by BrowserStack’s mobile app and web testing products are as follows:

- Live: Pre-installed developer tools on all remote desktop browsers and Chrome developer tools on real mobile devices (exclusive on BrowserStack)

- Automate: Screenshots, Video Recording, Video-Log Sync, Text Logs, Network Logs, Selenium Logs, Console Logs

- App Live: Real-time Device Logs from Logcat or Console

- App Automate: Screenshots, Video Recording, Video-Log Sync, Text Logs, Network Logs, Appium Logs, Device Logs, App Profiling

3. Adapt Real-Device Cloud-Based Test Tools for Production Testing

Using a staging environment as a substitute for production is suboptimal. Staging and production clusters differ, and maintaining parity adds overhead.

However, certain defects tend to slip through the cracks because of non-code issues which can only be replicated with a real-time user load and environment as in Soak Testing.

It is impossible to gauge how the software works without placing it in a real-world context. No emulator or simulator can replicate real user conditions, and therefore should not be considered a viable option for testing.

BrowserStack offers 3500+ real browsers and devices for both manual interactive testing and automated Selenium testing. They are hosted on the cloud which allows them to be accessible from anywhere at any time. One simply has to sign up, log in, and start testing for free.

Conclusion

Timely and effective Product Release is key to the operations of any organization. While delays in the release can lead to losing out on a go-to-market advantage and allow competitors to corner the market share; a buggy product can lead to customer dissatisfaction and can lead to customer loss.

Thus, following a set of practices that allows for accelerated product release while maintaining product quality is imperative to stay ahead of the curve and keep the customers happy.