Effective automated testing starts with clear, structured test cases. These define what to test, how to test it, and what outcome to expect, leaving no room for guesswork. Unlike manual testing, automation demands precision. A well-crafted test case ensures consistent, repeatable results and reduces maintenance overhead.

Overview

Automated test cases are predefined sets of steps and validations executed by testing tools to verify that software behaves as expected, without manual intervention. They form the core of reliable, scalable test automation.

How to Write Test Cases in Automation Testing

- Setup Conditions: Define the required application state, including browser launch, environment, and login steps

- Sync & Waits: Add wait conditions to ensure the application is ready before interacting with elements

- Test Steps: Specify input data, navigation steps, and how to reset the app state after execution

- Comments: Use inline comments to clarify logic and intent for better readability

- Debug Hooks: Insert debug points or logs to assist in troubleshooting flaky tests

- Result Logging: Include output statements to capture and store test results effectively

Benefits of Test Cases in Automated Testing:

- Ensure consistency across repeated test runs

- Reduce ambiguity by clearly defining inputs and expected outcomes

- Improve the maintainability of test scripts

- Support faster debugging with traceable test logic

- Enable easier onboarding for new team members

- Help prioritize and organize test coverage systematically

This article explores how to write test cases for automation testing.

Understanding Test Automation

Test automation involves executing the tests automatically, managing test data, and utilizing results to improve software quality. It’s a quality assurance measure, but its role consists of the commitment of the entire software production team. From business analysts to developers and DevOps engineers, getting the most out of test automation takes the inclusion of everyone.

It reduces the pressure on manual testers and allows them to focus on higher-value tasks – exploratory tests, reviewing test results, etc. Automation testing is essential to achieve maximum test coverage and effectiveness, shorten testing cycle duration, and greater result accuracy.

Before developing the automation test cases, it is essential to select the ideal test conditions that should be automated based on the following factors:

- Tests that need to be executed across multiple test data sets

- Tests that give maximum test coverage for complex and end-to-end functionalities

- Tests that need to be executed across several hardware or software platforms and on multiple environments

- Tests that consume a lot of time and effort to execute manually

Read More: Automation Testing Tutorial: Getting Started

Which tests should be automated?

Here are the different types of tests that should be automated for a faster and more efficient testing cycle:

Role of Test Cases in Automated Testing

Test cases play a crucial role in automated testing. They are the building blocks for designing, executing, and validating computerized tests. Here are some key functions that test cases fulfill in the context of automated testing:

- Test Coverage: Test cases define the specific scenarios, inputs, and expected outputs that must be tested.

- Test Script Creation: Serve as a blueprint for creating automated test scripts. Each test case is typically mapped to one or more test scripts.

- Test Execution: Automated tests are executed based on the instructions provided by test cases. Test cases define the sequence of steps performed during test execution.

- Test Maintenance: When the software changes due to bug fixes, new features, or updates, the existing test cases must be updated accordingly. Test cases clearly show what needs to be modified or added.

- Test Result Validation: After test execution, automated tests compare the actual and expected results defined in the test cases. This comparison helps identify discrepancies, errors, or bugs in the software under test, allowing the test team to investigate and address any issues.

- Regression Testing: Automated tests can quickly identify any regression issues introduced by new code changes or updates by executing the same test cases repeatedly.

- Test Reporting and Analysis: Test cases provide a structured framework for test reporting and analyzing test results. By associating test cases with specific test outcomes, defects, or issues, it becomes easier to track the overall progress.

How to write Test Cases in Automation Testing?

Writing automated test cases is a more structured and detailed process than creating manual ones. Automation requires breaking down workflows into smaller, precise actions, ensuring that each step is clearly defined for repeatable execution.

While templates may vary across automation tools, effective automated test cases generally include the following components:

- Specifications: Define the required application state, including environment setup, browser launch, and login steps

- Sync & Waits: Add wait conditions to ensure the application is ready before interactions

- Test Steps: Detail input data, navigation flows, and how to reset the application after test execution

- Comments: Document the reasoning behind key steps to improve maintainability

- Debugging Statements: Insert hooks or logs to troubleshoot flaky behavior during execution

- Output Statements: Specify how and where to capture test results for reporting

While both manual and automated tests aim to validate functionality, their development approaches differ significantly. Automated tests demand greater granularity and structure to ensure reliability and scalability.

Pro Tip: Streamline your testing with BrowserStack’s free tools! Instantly generate test data like credit card numbers and random addresses for your critical use cases.

Creating a Test Case for Automated Test

To understand how to create a test case for test automation, build a test case on a scenario where the user has to navigate the google.com website in a Chrome Browser.

- Test Scenario: To authenticate a successful user login on Browserstack.com

- Test Steps:

- Launch Chrome Browser

- The user navigates to the google.com URL.

- Browser: Chrome v 86.

- Test Data: URL of the Google.

- Expected/Intended Results: Once the Chrome Browser is launched, the web page redirects to the google.com webpage.

- Actual Results: As Expected

- Test Status: Pass/Fail: Pass

Read More: How to create Selenium test cases

Converting a Test Case to a Test Script for Automated Tests

To create the test script for the above test case example, instantiate a Chrome browser instance and navigate to the google.com website using TestNG annotations. The actual test case is written under @Test annotation.

For using TestNG annotations, you need to either import external libraries or add maven dependency in your pom.xml file as seen below:

<dependency> <groupId>org.testng</groupId> <artifactId>testng</artifactId> <version>7.5</version> <scope>test</scope> </dependency>

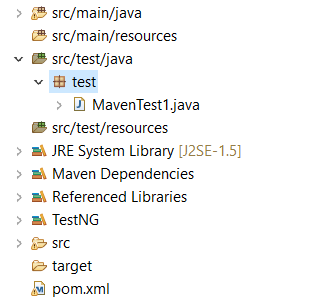

You need to create the test file under src/test/java, as shown below:

package test;

import java.util.concurrent.TimeUnit;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

import org.testng.annotations.AfterTest;

import org.testng.annotations.BeforeTest;

import org.testng.annotations.Test;

public class MavenTest1{

public String baseUrl = "https://www.google.com/";

String driverPath = "C:\\Softwares\\chromedriver_win32 (1)\\chromedriver.exe";

public WebDriver driver ;

@Test

public void test() {

// set the system property for Chrome driver

System.setProperty("webdriver.chrome.driver", driverPath);

// Create driver object for CHROME browser

driver = new ChromeDriver();

driver.manage().timeouts().implicitlyWait(20, TimeUnit.SECONDS);

driver.manage().window().maximize();

driver.get(baseUrl);

// get the current URL of the page

String URL= driver.getCurrentUrl();

System.out.print(URL);

//get the title of the page

String title = driver.getTitle();

System.out.println(title);

}

@BeforeTest

public void beforeTest() {

System.out.println("before test");

}

@AfterTest

public void afterTest() {

driver.quit();

System.out.println("after test");

}

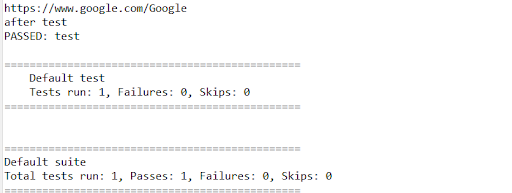

}On executing the test case as TestNG test, it will launch the browser, navigate to google.com and give the following output on your IDE Console as seen below:

- To optimize the Test Automation suite, it is essential to leverage cloud-based platforms like BrowserStack that offer a Cloud Selenium Grid of 3500+ real browsers and devices.

- Testing on a cloud grid lets the QAs test under real user conditions and thus improves the accuracy of the testing, making debugging easier.

- You can also utilize multiple features for more comprehensive testing scenarios – Geolocation Testing, Network Simulation, Testing on dev environments.

- Using Test Management, manage manual and automated test cases in a central repository. Speed up testing by organizing test cases with folders and tags.

- Create and track manual & automated test runs. Upload test results either from Test Observability or JUnit-XML/BDD-JSON reports.

Conclusion

Creating test cases for automation is not just about translating manual tests into code—it’s about designing clear, structured, and maintainable scripts that can run reliably at scale.

Well-written test cases reduce flakiness, improve test coverage, and make debugging easier. By focusing on precision, synchronization, and clarity, teams can build a robust automation suite that consistently validates critical functionality and accelerates the release cycle.