Automated test runs

The automated test run is a process of recording the test result from the report generated by the execution of the automated test cases by software tools in a repetitive and data-intensive manner. The automation testing is triggered manually or scheduled on events and actions. The linked test case results are updated in the BrowserStack Test Management tool.

Supported tools for automation test runs

Test Management allows integrating automation test run results from:

-

JUnit-XML or BDD-JSON based report upload using

curlcommands. - BrowserStack Test Reporting & Analytics using BrowserStack SDK

JUnit-XML or BDD-JSON based report upload

JUnit-XML or BDD-JSON based report upload in Test Management is supported with multiple frameworks.

Generate and import test results using TestNG

Generate and import test results using WebdriverIO

Generate and import test results using Nightwatch JS

Generate and import test results using Appium

Generate and import test results using Cypress

Generate and import test results using Mocha

Generate and import test results using PyTest

Generate and import test results using Playwright

Generate and import test results using Espresso

Generate and import test results using XCUITest

Generate and import test results using Cucumber

You can use CLI commands to upload these reports to see results automatically in Test Runs section.

Test Reporting & Analytics

BrowserStack Test Reporting & Analytics currently supports multiple automation test frameworks including:

Select a test framework to get started

Integrate E2E WebdriverIO tests running locally or on any cloud service.

Integrate unit or E2E TestNG tests running locally or on any cloud service.

Integrate unit or E2E Cypress tests running locally or on any cloud service.

Integrate unit or end-to-end Jest tests running locally or on any cloud service.

Integrate unit or E2E CodeceptJS tests running locally or on any cloud service.

Integrate unit or E2E mocha tests running locally or on any cloud service.

Integrate unit or E2E Cucumber JS tests running locally or on any cloud service.

Integrate unit, API or E2E Playwright tests running locally or any cloud service.

Integrate unit or E2E Nightwatch.js tests running locally or on any cloud service.

Integrate unit or E2E Serenity tests running locally or on any cloud service.

Integrate unit or E2E JUnit5 tests running locally or on any cloud service.

Integrate unit or E2E JUnit4 tests running locally or on any cloud service.

Integrate unit or E2E NUnit tests running locally or on any cloud service.

Integrate unit or end-to-end xUnit tests running locally or on any cloud service.

Integrate unit or E2E MSTest tests running locally or on any cloud service.

Integrate unit or E2E Specflow tests running locally or on any cloud service.

Integrate unit or E2E Behave tests running locally or on any cloud service.

Integrate unit or E2E Pytest tests running locally or on any cloud service.

Integrate unit or E2E Robot tests running locally or on any cloud service.

Integrate unit or E2E Espresso tests running locally or on any cloud service.

Integrate by uploading any JUnit XML Report generated by any test framework.

Integrate by uploading Allure Reports generated by any test framework.

Test Reporting & Analytics’s native integration with Test Management, significantly enhances the efficiency and effectiveness of test management processes. This native integration is achieved through the BrowserStack SDK and supports a wide range of testing frameworks and environments, including local devices, browsers, CI/CD pipelines, and cloud platforms.

Test Reporting & Analytics empowers Test Management as follows:

-

Integration with Various Testing Frameworks

Test Reporting & Analytics supports automated test runs for numerous frameworks like Node.js WebdriverIO, Java TestNG, JavaScript Cypress, Jest, Mocha, Playwright, Nightwatch.js, Java Serenity, C# NUnit, Python Pytest, and JUnit XML Reports. This compatibility ensures Test Management can read and show Test Reporting & Analytics’s build run details and test cases that are run using any of these frameworks.

-

Setup and Configuration

The process begins with configuring the project repository. This involves verifying and updating the

pom.xmlfile with the latest BrowserStack SDK, installing the SDK, and configuring thebrowserstack.ymlfile with necessary details like BrowserStack username, access key, build name, project name, and enabling Test Reporting & Analytics. -

Accessing Test Reporting & Analytics data

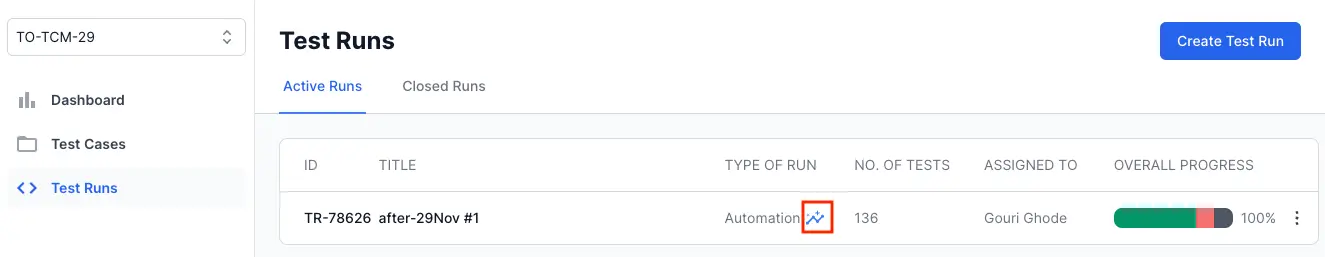

To access Test Reporting & Analytics data, log into BrowserStack Test Management and select the project to which the test report was exported. Then, navigate to the Test Runs section and click on the graph icon in the Type of Run column to view detailed observability data.

-

Integration with CI/CD for Report Generation

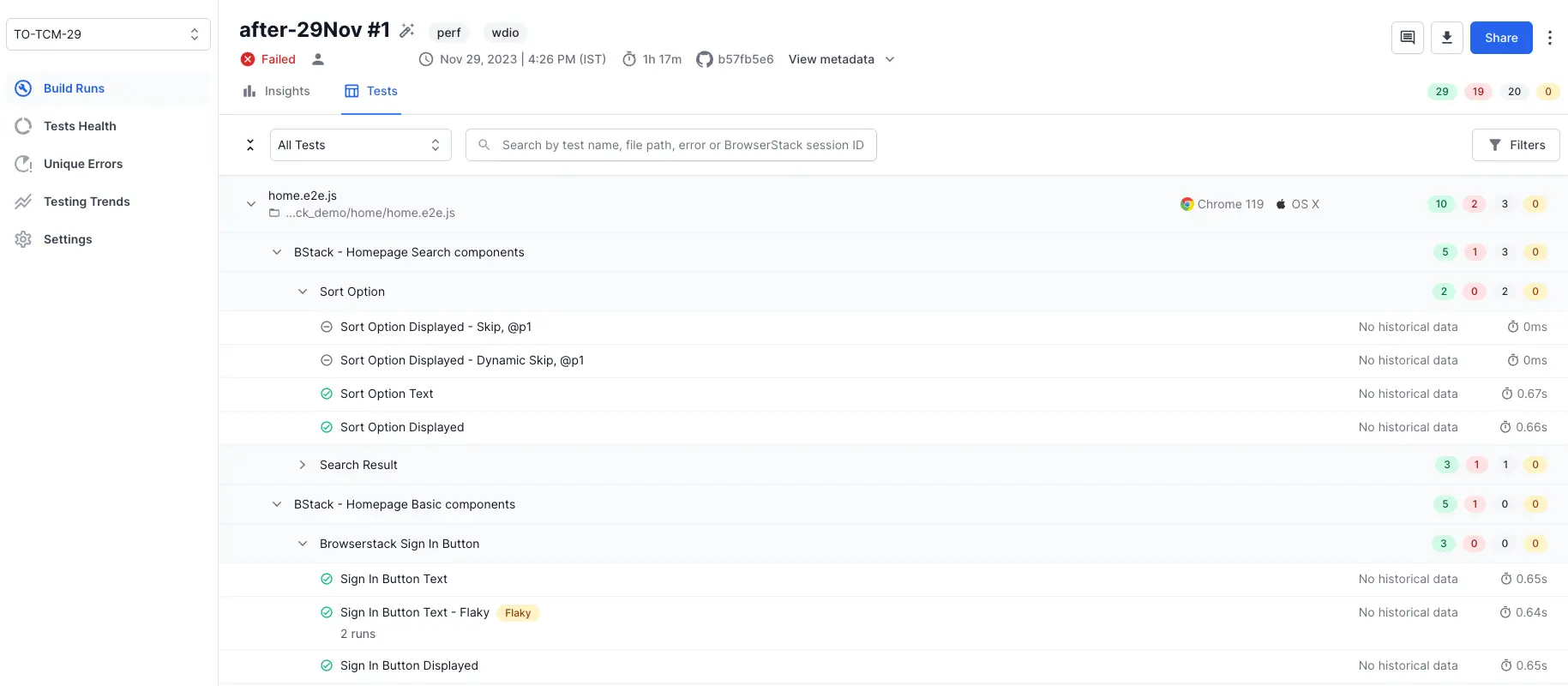

A CI/CD pipeline must be created first for generating and exporting Test Reporting & Analytics reports using any of the pipelines such as Jenkins, CircleCI, Travis CI. After setting up the CI/CD pipeline and pushing the codebase to a version control system like GitHub or Bitbucket, you initiate a test run report through CI/CD tool. Once the test run build is complete, the generated report can be checked in Test Management. For detailed debugging and analytics, you can navigate to corresponding build run in Test Reporting & Analytics from the Test Runs page in Test Management.

Integrating Test Reporting & Analytics into Test Management provides a comprehensive view of the testing process, enabling teams to track, analyze, and optimize their testing efforts more effectively.

Test Reporting & Analytics to Test Management mapping

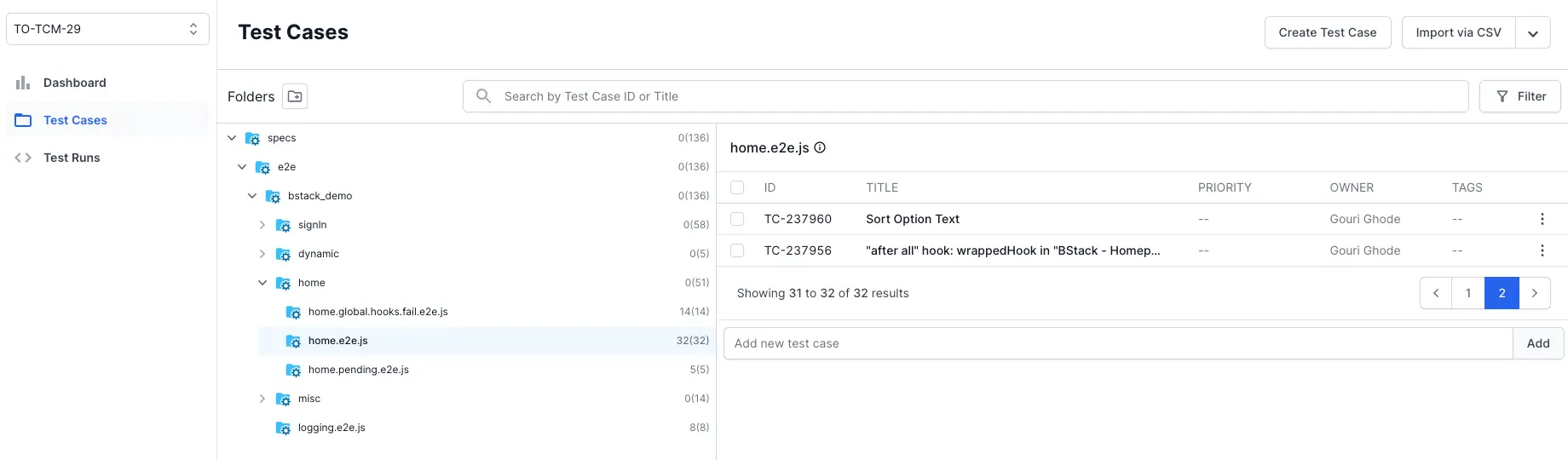

Due to Test Reporting & Analytics and Test Management’s native integration, the folder structure and associated test cases in Test Reporting & Analytics are copied to Test Management. Each test case is copied along with the title, folder, execution status, execution time, configuration and stack trace in case of failures. The stack trace or the error information is recorded in the response field of the corresponding test case in Test Management.

An example of mapping between Test Reporting & Analytics and Test Management is as follows.

Test Reporting & Analytics

Test Management

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

We're sorry to hear that. Please share your feedback so we can do better

Contact our Support team for immediate help while we work on improving our docs.

We're continuously improving our docs. We'd love to know what you liked

Thank you for your valuable feedback!