Most teams approach a Playwright agent as a quicker way to produce tests. Describe a flow, let the agent generate Playwright code, and move on. That assumption feels natural because automation has long been judged by how efficiently it turns intent into scripts.

That framing breaks down the moment the agent runs inside CI. At that point, it is no longer just generating code. It is deciding what to explore, what to rerun, and what to change across executions, while the generated scripts fade into the background.

In practice, a Playwright agent takes on responsibility during execution itself. Once introduced, the focus moves away from reviewing generated scripts and toward defining scope, authority, and feedback, because those boundaries determine how coverage and stability evolve over time.

Overview

What are Playwright Agents?

Playwright Agents, introduced in Playwright 1.56, are an AI-driven system that plans, generates, and maintains end-to-end tests during execution. Instead of treating automation as static code generation, agents operate alongside the test run and adapt based on application behavior.

How Does a Playwright Agent Work?

Playwright Agents work as a coordinated flow across planning, generation, and repair.

- Planner Agent: Converts high-level goals into a structured, human-readable test plan by exploring the application and outlining steps, expectations, and edge cases.

- Generator Agent: Transforms the plan into executable Playwright tests while validating Playwright selectors and Playwright assertions against a live browser.

- Healer Agent: Responds to test failures by re-evaluating the UI, updating affected selectors or steps, and re-running the test when the failure is caused by non-functional changes.

Benefits of Playwright Agents

- Save time on test creation: Automates planning and script generation, reducing manual coding effort.

- Maintain test stability: Self-healing helps handle minor UI changes without failing tests unnecessarily.

- Focus on strategy: Teams can prioritize test coverage, intent, and quality insights rather than repetitive scripting.

- Increase reliability: Tests are generated and validated against a live browser, making them more robust.

- Augment human expertise: Agents assist engineers but do not replace the need for review, ensuring regressions are not masked.

In this article, I will explain how Playwright agents operate during test execution and what fundamentally changes when they are introduced into CI.

What is a Playwright Agent?

A Playwright Agent is a system that operates alongside Playwright tests and actively manages parts of the test lifecycle that are normally handled through static scripts or manual decisions.

Instead of only executing predefined steps, the agent observes application behavior, test outcomes, and execution context, then takes actions based on that information.

These actions can include deciding which flows to explore, adjusting selectors or steps when the UI changes, rerunning failed scenarios with modified inputs, or generating new test logic when gaps are detected.

Why are Playwright Agents Important?

Modern test pipelines deal with frequent UI changes, asynchronous behavior, and large test suites that cannot be fully maintained through static scripts alone. Playwright Agents address these constraints by introducing execution-time reasoning and cross-run awareness into how tests are run and evolved.

This is why they’re important:

- Reduced maintenance pressure: Agents detect selector breakage, DOM structure changes, and timing shifts during execution and adjust test logic or retry strategies accordingly, which limits the need for constant manual script updates after minor UI changes.

- Better handling of non-deterministic behavior: Real applications often fail due to race conditions, delayed network responses, or reactive UI updates. Agents analyze failure patterns across runs and distinguish between genuine regressions and environmental or timing-related noise.

- Continuous test coverage growth: Instead of relying only on prewritten scenarios, agents identify untested paths based on runtime signals such as navigation patterns, API responses, and UI state transitions, then generate or extend tests to cover those gaps.

Read More: How to ensure Maximum Test Coverage?

- Smarter CI execution decisions: Agents decide what to rerun, skip, or prioritize based on recent changes, historical failures, and risk signals, which improves feedback speed without reducing confidence in test results.

- Closer alignment with real application behavior: By observing how the application behaves during execution rather than assuming ideal conditions, agents produce tests that reflect actual user flows, dynamic content updates, and integration dependencies more accurately.

Core Components of Playwright Agents

Introduced in Playwright v1.56, Playwright Agents break the end-to-end testing workflow into clearly defined AI-driven components. Each agent has a focused responsibility and produces concrete outputs that testers can review, control, and integrate into existing pipelines.

1. Planner Agent

The Planner is the first stage in Playwright’s AI-assisted testing pipeline. Its role is to understand what you want to test in human terms and to explore the application’s structure and behavior to translate that intent into a concrete, sequenced plan.

- Role: Take a testing objective expressed in natural language (for example, “Test the guest checkout flow”) and convert it into a structured test blueprint. To do this, it may run a seed test that initializes your application context, navigates pages, and collects relevant UI state while exploring flows programmatically.

- How It Works: The Planner uses MCP tools that drive a real browser through Playwright to interact with the app, collect element information, and break the user journey into discrete steps. It can also absorb contextual documents like product requirement specs or user stories if provided.

- Output: A human-readable Markdown test plan (typically saved under specs/), with clear sections for scenarios, steps, and expected outcomes. This plan is crafted to be both precise for automation generation and understandable to other testers and stakeholders.

- Tester Value: The Markdown plan helps testers review intent, see gaps before automation, document logic, and catch misunderstood or missing criteria early in the pipeline.

Also Read: AI Automation and Testing

2. Generator Agent

The Generator builds on the Planner’s output by producing executable test scripts that conform to Playwright’s test format.

- Role: Translate the structured Markdown plan into runnable Playwright Test files (.spec.ts / .spec.js). It doesn’t simply produce pseudo-code; it verifies selectors and assertions in the running application as it generates code to ensure reliability.

- How It Works: The Generator reads each scenario from the test plan and resolves locators, interaction steps, and expected outcomes against the live UI. It chooses appropriate selectors and constructs valid Playwright statements with proper setup/teardown and assertions.

- Output: A set of test files under your project’s tests/ directory that correspond to the plan’s sections. These tests are formatted and structured to be executed directly by npx playwright test.

- Tester Value: This agent accelerates test creation by removing manual script writing and ensures that selectors and assertions match what the app actually renders at generation time. You still review generated code and patterns for maintainability and best practices.

Also Read: Understanding AI Test Management

3. Healer Agent

The Healer addresses the hardest part of large test suites: maintenance when UI changes, flakiness, or timing issues cause failures.

- Role: Automatically detect failing tests, analyze what went wrong, and suggest or apply fixes to selectors, waits, or other unstable parts of the test logic. The Healer can re-run tests to verify any adjustments.

- How It Works: When a test fails, the Healer replays the failure steps while inspecting the current DOM and application state, and attempts to locate equivalent elements or flows through contextual reasoning. It then proposes updates (for example, new locators or timing adjustments) and verifies whether the test passes with those changes.

- Output: A passing test or, if the functionality itself appears broken, a skipped test with documentation explaining why it could not be healed.

- Tester Value: The Healer reduces the manual burden of updating tests after UI changes. Testers still review healed suggestions and guardrails to ensure that the intent of the test remains correct rather than masking real product regressions.

Also Read: Top 15 AI Testing Tools

Technical Architecture of Playwright Agents

Playwright Agents are built as a layered system where each layer has a distinct technical responsibility. The design separates execution, reasoning, and state management to make automation scalable, maintainable, and auditable.

- Playwright Execution Layer: Executes browser automation, DOM interactions, network requests, tracing, and reporting using standard Playwright APIs. All agent actions are routed through this layer to ensure consistency with regular Playwright tests.

- Model Context Protocol (MCP) Layer: Provides a structured interface for agents to interact with the Playwright execution layer. MCP defines allowed actions, shared context, and result formats, enforcing controlled communication between AI reasoning and browser automation.

- Planner Agent Layer: Analyzes testing goals and explores the application to identify user flows, page states, and UI elements. Produces structured Markdown test plans that capture sequences of actions and expected results.

Read More: Understanding Playwright Architecture

- Generator Agent Layer: Converts Planner outputs into executable Playwright test scripts. It resolves locators, constructs interactions, and embeds assertions by verifying the live UI state, ensuring generated code matches the actual application.

- Healer Agent Layer: Monitors test execution, identifies failures caused by UI or timing changes, and attempts targeted fixes such as updating selectors or adding synchronization logic. Re-executes tests to confirm fixes or flags genuine application issues.

- Shared Context and Artifact Layer: Stores test plans, generated scripts, execution logs, traces, and healing updates. This persistence allows reviewers and testers to inspect decisions, maintain control, and trace test evolution over time.

How Playwright Agents Work Together

Playwright Agents function as a coordinated workflow where each agent performs a distinct role and hands off a concrete output to the next stage, keeping intent, execution, and maintenance clearly separated.

- Planning the test intent and scope: The workflow starts with the Planner Agent, which explores the application and translates a testing goal into a structured Markdown plan. This plan defines user flows, step sequences, validations, and assumptions, creating a stable reference for what the tests are meant to cover before any code exists.

- Generating executable automation: The Generator Agent consumes the Planner’s Markdown output and converts each scenario into runnable Playwright tests. It resolves selectors and assertions against the live UI during generation, ensuring the resulting code reflects actual application behavior rather than inferred structure.

Read More: How to create Test Scenarios in 2025

- Executing and monitoring outcomes: The generated tests are executed using Playwright’s standard runner, producing pass, fail, and diagnostic signals such as DOM state, timing behavior, and interaction failures that feed into the next stage.

- Healing failures and validating fixes: When tests fail, the Healer Agent analyzes the failure context, replays affected steps, and attempts targeted repairs such as updating selectors or synchronization logic. Any change is validated by rerunning the test to confirm stability.

- Feeding results back into the loop: Outcomes from execution and healing inform future planning and generation decisions, allowing test logic to evolve based on real application changes while keeping test intent explicit and reviewable.

How to Setup Playwright Agents

Setting up Playwright Agents involves enabling an agent-driven workflow on top of an existing Playwright test setup so that planning, generation, and healing can run as coordinated steps. The setup keeps Playwright as the execution engine while agents operate as control and reasoning layers.

Follow these steps to configure Playwright Agents in a way that testers can run, review, and control each stage.

Step 1: Set up a standard Playwright project

Install Playwright and initialize a project with the required browsers and test runner configuration. A working Playwright setup is mandatory because agents rely on Playwright APIs to explore the app, generate tests, and rerun failures.

Step 2: Enable Model Context Protocol (MCP) support

Configure MCP so the agent system can safely interact with Playwright tools. MCP acts as the contract between the language model and Playwright, defining what actions can be executed, what context is shared, and how results are returned in a controlled manner.

Step 3: Configure the Planner Agent inputs

Define the testing goal, entry URLs, authentication requirements, and any supporting documents such as user flows or requirements. These inputs guide the Planner in exploring the application and producing meaningful, scoped test plans.

Step 4: Run the Planner to generate test plans

Execute the Planner Agent to explore the application and produce Markdown test plans. Review these plans to validate coverage, assumptions, and scenario sequencing before moving to automation.

Step 5: Run the Generator to create Playwright tests

Use the Generator Agent to convert approved plans into executable Playwright test files. The generated tests should be reviewed like any hand-written automation for structure, assertions, and maintainability.

Step 6: Execute tests and enable the Healer

Run the generated tests using the Playwright test runner with the Healer Agent enabled. When failures occur, the Healer analyzes and attempts targeted repairs, rerunning tests to confirm stability.

Step 7: Review healed changes and lock intent

Inspect any healed updates to ensure they preserve the original test intent and do not mask real defects. Accepted fixes become part of the test suite, while unresolved failures remain visible for investigation.

Limitations and Considerations of Playwright Agents

While Playwright Agents automate planning, generation, and healing, there are important technical and practical considerations testers must be aware of. Understanding these limitations helps teams set realistic expectations and maintain control over test quality.

- Dependency on application stability: Agents rely on consistent UI structures and predictable flows. Frequent or unpredictable DOM changes, dynamic content, or feature flags can lead to incomplete plans, incorrect selectors, or excessive healing attempts.

- Learning curve for MCP and configuration: Proper setup of the Model Context Protocol (MCP) and agent inputs requires understanding the contract between agents and Playwright. Misconfiguration can cause incomplete exploration or failed test generation.

- Review overhead: Although agents produce plans, code, and healed tests automatically, human review is essential to ensure test intent is preserved. Blindly accepting agent outputs can mask functional regressions or misaligned coverage.

- Not fully replacement for manual testing: Agents excel at repetitive flows and stabilization, but they cannot replace exploratory testing, edge-case, or context-driven testing that requires human judgment.

- Resource and execution considerations: Running Planner exploration, Generator validation, and Healer iterations can increase CI/CD runtime and compute usage, especially for large or complex applications.

- Limited handling of complex business logic: Agents may struggle with flows that require conditional reasoning, multi-user interactions, or integrations outside the browser environment unless explicitly modeled in test inputs.

Why Do Automated Tests Often Fail in Real-World Environments?

Automated tests often pass locally, but they can fail in real-world conditions because devices, browsers, and operating systems differ. In addition, variations in screen sizes or browser engines can break selectors and assertions.

Slow networks can also cause timing issues, and dynamic content, reactive frameworks, or hardware-dependent features make tests flaky and unreliable in CI pipelines.

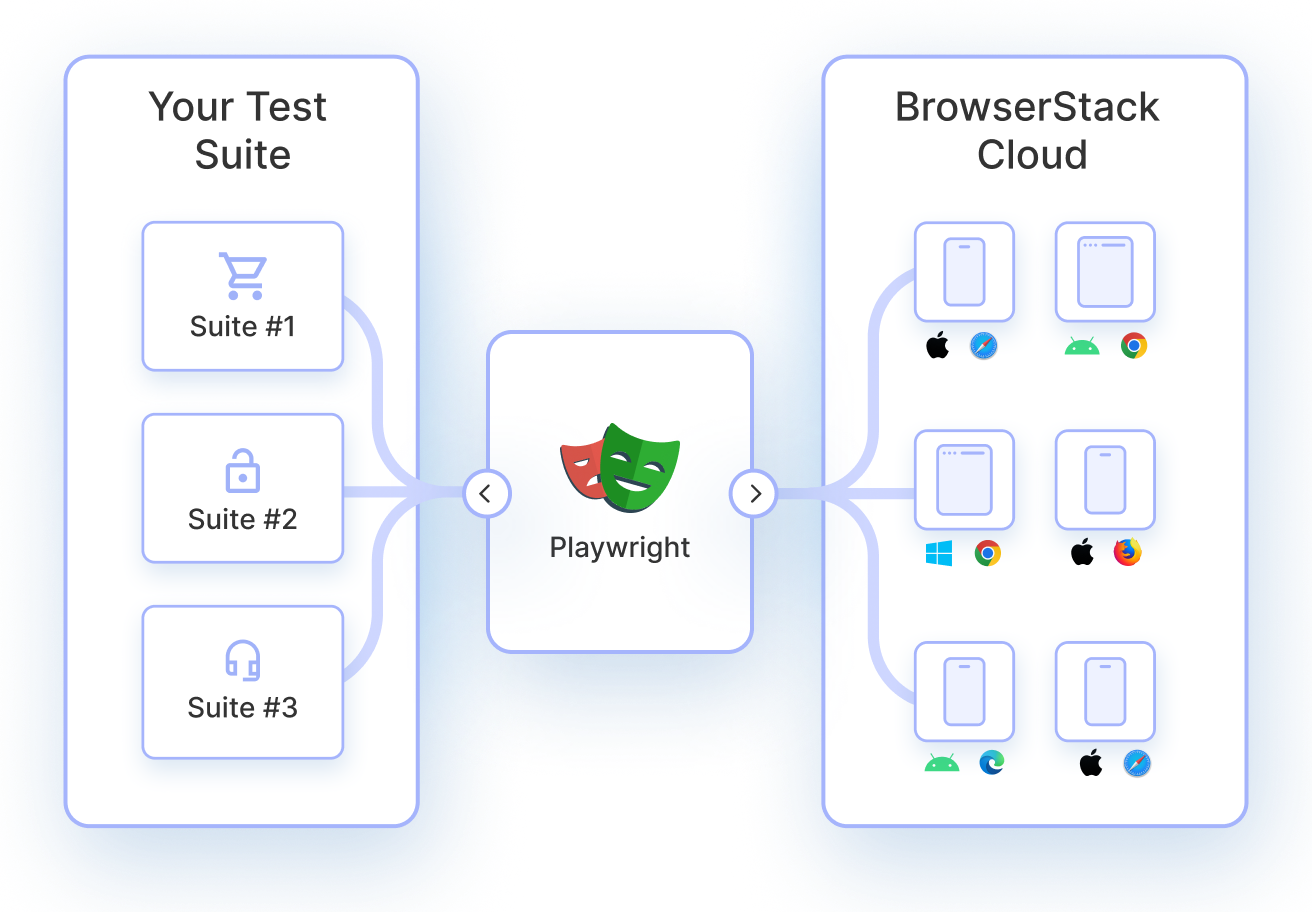

Platforms like BrowserStack solve this by providing a cloud-based infrastructure that lets teams execute automated tests on real devices and browsers at scale. This ensures that tests generated by Playwright Agents are validated in conditions that closely mimic end users’ environments, reducing flakiness and improving confidence in automation results.

By integrating real-world testing into the automation workflow, teams can detect issues earlier, optimize coverage across browsers and devices, and maintain stable end-to-end pipelines.

Here are the features that help Playwright Agent-generated tests run reliably in real-world environments:

- Real Device Cloud: Provides access to a wide range of real devices, ensuring tests run on the exact hardware and OS configurations that users have.

- Parallel Testing: Enables multiple tests to run simultaneously across devices and browsers, reducing overall execution time and accelerating feedback in CI/CD pipelines.

- Local Environment Testing: Allows tests to run against applications hosted locally or behind firewalls, ensuring test coverage in development and staging environments before production deployment.

- Test Reporting & Analytics: Offers detailed logs, video recordings, and performance metrics to help identify why a test failed and verify fixes applied by Playwright Agents’ Healer.

- SIM Enabled Devices: Supports mobile network conditions and SIM-based features, enabling validation of workflows that depend on real-world connectivity or carrier configurations.

Conclusion

Playwright Agents generate, execute, and heal end-to-end tests while handling dynamic content, reactive frameworks, and repetitive workflows. Despite this, real-world differences in devices, browsers, and network conditions can still cause tests to fail.

Run Playwright Agent-generated tests on real devices and browsers through BrowserStack to validate them under actual user conditions. This approach reduces flakiness, provides detailed logs and recordings for troubleshooting, and covers device- or network-specific scenarios, resulting in more stable and reliable CI pipelines.