Generative AI is redefining software testing by moving beyond automation to intelligent creation. From generating test cases and data to adapting test scripts and environments, it empowers QA teams to achieve faster, smarter, and more resilient testing workflows.

Overview

Generative AI in software testing refers to the use of AI models that can create new test cases, scripts, data, and environments automatically.

Key Applications of GenAI in Software Testing:

- Test Case Generation: Automatically generates relevant and diverse test cases based on the application’s behavior and code, reducing manual test creation.

- Test Script Maintenance: Uses AI to automatically update test scripts when application changes occur, minimizing maintenance effort.

- Bug Detection and Prediction: Identifies potential defects or bugs in the early stages of development using predictive AI models.

- Regression Testing Optimization: Focuses testing on areas most likely to be impacted by changes, ensuring faster and more efficient regression testing.

- Automated Test Execution: Runs tests across different environments and configurations, ensuring broad coverage with minimal human intervention.

Benefits for QA Teams:

- Faster Test Creation: Automatically generates test cases and scripts from user stories or code, cutting design time.

- Comprehensive Coverage: Produces diverse scenarios and edge cases that manual methods often miss.

- Synthetic Data Generation: Creates realistic test data, enabling broader and more reliable test validation.

- Reduced Maintenance: Self-healing capabilities keep test suites stable as applications change.

- Early Defect Detection: Identifies high-risk areas proactively, minimizing costly post-release bugs.

- Improved Efficiency: Frees testers from repetitive tasks, allowing focus on exploratory and strategic testing.

This article explores how generative AI is transforming software testing by automating test creation, enhancing coverage, and empowering QA teams with smarter, faster workflows.

What is Generative AI in Software Testing?

Generative AI in software testing refers to the use of AI models that can create new test assets automatically, from test cases and scripts to synthetic data and even environment configurations.

Unlike traditional automation, which executes pre-written instructions, generative AI learns from requirements, codebases, and historical defects to generate meaningful, context-aware test scenarios.

By simulating real-world usage patterns and adapting to application changes, generative AI goes beyond repetitive automation. It acts as an intelligent assistant that helps QA teams achieve broader coverage, reduce effort in test design, and detect issues earlier in the development lifecycle.

Read More: Machine Learning for Automation Testing

Types of Generative AI Testing Tools

Generative AI has inspired a new class of testing tools, each designed to tackle different aspects of the QA lifecycle. Broadly, these tools can be grouped into the following categories:

- Test Case Generation Tools: Automatically create functional, regression, or exploratory test cases from user stories, requirements, or source code.

- Test Script Automation Tools: Generate executable scripts (e.g., Selenium, Playwright) that adapt to changes in applications, reducing scripting and maintenance effort.

- Synthetic Data Generation Tools: Produce realistic and varied datasets for testing, covering sensitive or rare scenarios without exposing production data.

- Self-Healing Test Tools: Continuously monitor application changes and automatically fix broken locators or workflows, ensuring stable test execution.

- Visual Testing Tools: Use AI to detect UI regressions and highlight meaningful changes while filtering out noise. BrowserStack Visual Review Agent identifies only the meaningful visual changes, such as content shifts, layout modifications, or broken elements, while ignoring irrelevant noise. This makes visual reviews faster, clearer, and more reliable..

- Predictive Analytics & Optimization Tools: Analyze historical test runs and code changes to prioritize high-risk areas, optimize execution, and reduce redundant tests. BrowserStack Test Selection agent chooses the most relevant test cases to run based on recent code changes, ensuring faster feedback and efficient regression cycles.

By applying these types of generative AI, teams can achieve end-to-end automation of design, execution, and validation, making QA more adaptive and less labor-intensive.

Core Capabilities of Generative AI in Testing

Generative AI brings intelligence and adaptability into the QA process, equipping teams with capabilities that go beyond traditional automation:

- Automated Test Design: Transforms requirements, code, or user stories into executable test cases and scripts, accelerating test creation and reducing manual effort.

- Self-Healing Automation: Detects changes in UI elements, APIs, or workflows and updates tests automatically, minimizing flaky failures and maintenance overhead.

- Synthetic Data Creation: Produces realistic, diverse, and compliant datasets, including edge cases, that enhance test coverage without relying only on production data.

- Defect Prediction & Prioritization: Analyzes historical defects, code commits, and execution logs to identify high-risk areas, ensuring critical issues are tested first.

- Dynamic Test Environment Setup: Configures test environments on demand, aligning infrastructure with the application’s evolving needs.

- Visual & UX Validation: Generates scenarios to assess layout changes, detect regressions, and maintain consistency across browsers and devices.

Together, these capabilities enable QA teams to move from repetitive scripting to intelligent, adaptive, and proactive testing workflows.

Read More: Top 20 AI Testing and Debugging Tools

Types of Generative AI Models in Software Testing

Different generative AI models power applications in software testing, each with unique strengths. The most relevant include:

- Large Language Models (LLMs): Trained on vast text datasets, LLMs like GPT excel at generating test cases, scripts, and documentation from natural language requirements. They are widely used for test automation and code generation.

- Generative Adversarial Networks (GANs): GANs create highly realistic synthetic data by pitting two neural networks against each other. In testing, they are valuable for producing diverse test datasets, including edge cases and rare user scenarios.

- Diffusion Models: These models iteratively refine random noise into structured outputs. In testing, they can simulate UI variations, user interactions, or even complex environments for visual and usability testing.

- Transformers: Beyond LLMs, transformer-based architectures are used for sequence modeling, such as generating structured API test flows or handling dependencies in complex workflows.

By leveraging these models, generative AI supports tasks ranging from data creation and script generation to environment simulation and visual validation, making QA more robust and adaptive.

Key Benefits for QA Teams

Generative AI introduces practical advantages that directly impact the speed, coverage, and reliability of QA processes:

- Faster Test Creation: Automatically generates test cases and scripts from requirements or code, cutting the time spent on manual design.

- Comprehensive Coverage: Produces diverse test scenarios, including edge cases and negative paths, ensuring broader application validation.

- Smarter Test Data: Creates synthetic yet realistic datasets that mimic production conditions without risking sensitive information.

- Reduced Maintenance Effort: Self-healing capabilities adapt scripts to UI or workflow changes, keeping test suites stable with minimal manual updates.

- Early Defect Detection: Identifies high-risk areas by analyzing code changes, past bugs, and execution patterns, allowing issues to be caught sooner.

- Optimized QA Efficiency: Frees testers from repetitive tasks so they can focus on exploratory testing, usability validation, and strategic quality initiatives.

Read More: Top 15 AI Testing Tools

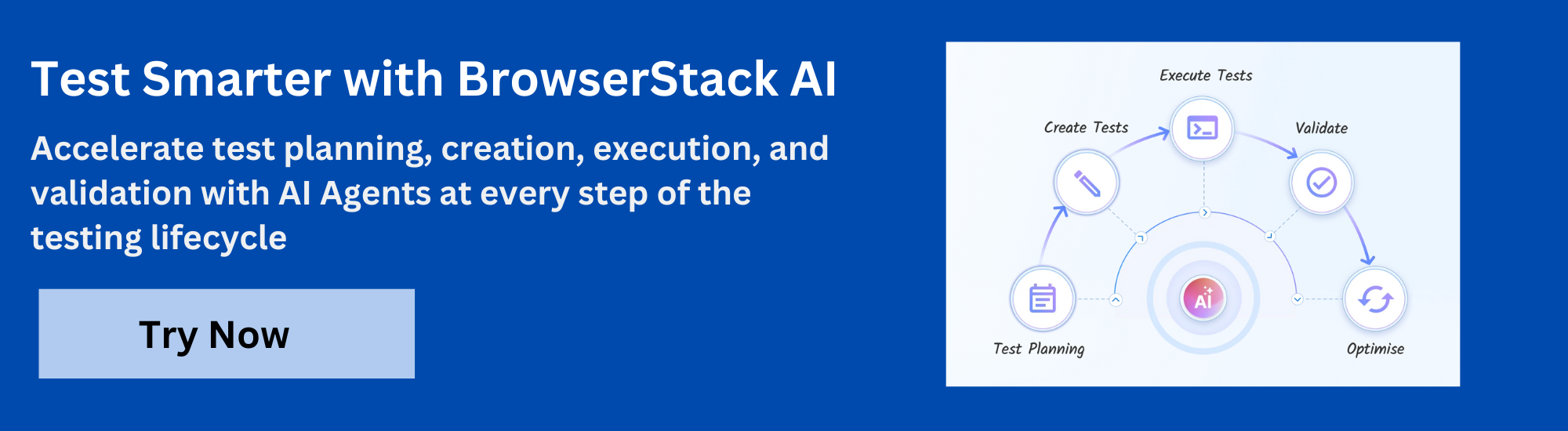

Enhance Software Testing with BrowserStack Generative AI

BrowserStack offers the power of Generative AI directly into the testing lifecycle, helping teams accelerate test creation, reduce maintenance, and optimize execution. Instead of relying on static scripts or manual effort, BrowserStack AI agents generate, adapt, and refine tests in real time.

Key Capabilities of BrowserStack Generative AI:

- AI-Driven Automated Tests Without Coding: Create robust tests quickly using an intuitive recorder that captures browser actions and converts them into automated flows.

- No Learning Curve, Fast Test Creation: Build your first test in just 2-3 minutes, accelerating onboarding and boosting team productivity.

- Intuitive Test Recorder: Record user interactions directly in the browser, with support for complex validations covering both functionality and visual states.

- AI-Driven Self-Healing Tests: Automatically adapt tests when UI elements or workflows change, reducing maintenance and increasing reliability.

- Data-Driven Testing & Modular Design: Use reusable modules and AI-powered data-driven scenarios to expand coverage efficiently.

By combining Generative AI capabilities with enterprise-grade infrastructure, BrowserStack enables QA teams to transform testing into a faster, smarter, and more resilient process. And for complete coverage across functional, regression, accessibility, and visual testing, teams can further extend their strategy with BrowserStack AI Agents:

- Test Case Generator Agent: Creates automated test cases from requirements or user flows in minutes, accelerating coverage.

- Self-Healing Agent: Adapts tests to UI or workflow changes automatically, reducing flaky failures and maintenance.

- Test Selection Agent: Runs only the most relevant tests based on code changes, ensuring faster, optimized feedback.

- Test Deduplication Agent: Identifies and removes redundant test cases, keeping test suites lean and efficient.

- A11y Issue Detection Agent: Finds accessibility issues early, helping teams meet WCAG standards and deliver inclusive apps.

- Visual Review Agent: Highlights meaningful UI changes while filtering out noise, making visual reviews faster and clearer.

Conclusion

Generative AI is reshaping software testing by moving beyond static automation to intelligent creation. From generating test cases and synthetic data to enabling self-healing scripts and smarter test optimization, it empowers QA teams to work faster and with greater accuracy.

Yet, the true value of generative AI lies in pairing its creativity with reliable execution at scale. With BrowserStack’s Generative AI capabilities and AI Agents, teams can streamline test creation, minimize maintenance, and ensure robust coverage across functional, regression, accessibility, and visual testing.