As software systems grow complex, traditional automation struggles to keep pace. Agentic AI offers a new model – self-directed agents that handle testing tasks end-to-end, ensuring faster and more reliable releases.

Overview

AI agents are autonomous programs that can plan, execute, and adapt test cases based on goals, code changes, or system behavior, without needing step-by-step instructions.

How it Works:

- Test Execution Automation: AI agents autonomously execute test cases, interacting with the application just as a human tester would.

- Data Analysis: Analyzes test results to identify patterns, trends, and potential issues, and suggests improvements or next steps.

- Self-Learning: Learns from previous tests and adapts test strategies based on outcomes, continuously improving its testing approach.

- Decision-Making: Makes real-time decisions about which tests to run, when to pause, or which areas to focus on based on previous analysis.

- Error Detection & Reporting: Identifies and reports bugs, defects, or inconsistencies, providing actionable insights to developers.

Key Use Cases in Testing:

- Regression Testing: Automates regression testing to ensure that new code changes don’t break existing functionality, learning and adapting over time.

- Performance Testing: Monitors application performance under various conditions, analyzing system behavior and predicting performance bottlenecks.

- User Interface Testing: Identifies visual defects and UI inconsistencies, automating visual checks and adapting to design changes.

- API Testing: Automatically generates test cases for APIs, executes them, and analyzes responses to ensure API functionality.

- Security Testing: Detects vulnerabilities in applications by simulating various attack scenarios and identifying potential security risks.

This article explores how Agentic AI is reshaping software testing by introducing autonomous AI agents that accelerate test creation, adapt to changes, and optimize QA workflows.

Understanding Agentic AI in Software Testing

Agentic AI in software testing refers to artificial intelligence systems that operate with autonomy, reasoning, and adaptability to carry out testing tasks with minimal human oversight. Unlike traditional automation that depends on predefined scripts, agentic AI behaves like an intelligent tester, capable of making context-driven decisions, exploring applications, and refining its approach based on feedback.

Key Characteristics of Agentic AI in Testing

- Autonomy: Designs, executes, and optimizes test cases independently, reducing reliance on fixed scripts.

- Goal-Oriented Behavior: Focuses on achieving the broader testing objective rather than just executing steps.

- Adaptability: Adjusts seamlessly to UI changes, new features, or evolving workflows.

- Reasoning Capability: Uses natural language understanding, reinforcement learning, and advanced logic to replicate human-like decision making.

Primary Trends in Agentic Testing

As agentic AI gains traction in QA, several trends are shaping how it’s being applied in real-world testing environments:

- Autonomous Test Generation: AI agents are creating test cases directly from requirements, code, or user journeys, cutting down the time spent on manual test design.

- Self-Healing Test Scripts: When applications change, agents can automatically update locators, workflows, or assertions to keep tests running smoothly.

- End-to-End Workflow Testing: Beyond isolated cases, agents now test complete user journeys, such as checkout or onboarding, without manual intervention.

- Adaptive Execution: Tests adjust dynamically to unexpected UI or API behavior, making them more resilient in agile, fast-changing development cycles.

- Defect Management Automation: Agents can analyze logs, cluster issues, file bugs, and even suggest fixes, streamlining defect triage.

- Integration with CI/CD Pipelines: Agentic testing tools are increasingly embedded into DevOps workflows, enabling continuous, intelligent quality checks.

How Agentic AI Enhances the Software Development Lifecycle (SDLC)

Agentic AI doesn’t just improve isolated testing tasks, it influences the entire SDLC by making quality assurance more proactive, adaptive, and continuous.

- Requirements Phase: Converts user stories or acceptance criteria into ready-to-run test cases, ensuring early alignment between business goals and QA.

- Development Phase: Assists developers by generating unit and integration tests on the fly, catching defects before code moves downstream.

- Testing Phase: Executes autonomous regression, functional, and exploratory tests that adapt to evolving features without constant script maintenance.

- Deployment Phase: Integrates into CI/CD pipelines for continuous validation, accelerating release cycles while maintaining reliability.

- Maintenance Phase: Learns from past defects and system logs to predict high-risk areas, guiding smarter test prioritization over time.

By embedding intelligence across the lifecycle, agentic AI shifts QA from a reactive checkpoint to a strategic enabler of faster, higher-quality software delivery.

Advantages of Agentic AI in Software Testing

Agentic AI brings intelligence, adaptability, and resilience into software testing, addressing the limitations of both manual and scripted automation.

- Faster Test Creation: AI agents can automatically generate test cases from requirements, user stories, or source code. This eliminates the need for manual scripting, accelerates coverage, and ensures that new features are tested from the earliest stages.

- Adaptive Execution: Unlike brittle test scripts, agentic AI adapts dynamically when UI elements, APIs, or workflows change. It can update selectors or paths on the fly, preventing common test failures and reducing downtime in continuous testing.

- Reduced Maintenance: Test suites often require heavy upkeep in traditional automation. Agentic AI uses self-healing techniques to keep tests functional, drastically lowering maintenance costs and freeing QA teams to focus on strategic tasks.

- Smarter Defect Detection: By analyzing logs, patterns, and historical issues, AI agents can identify bugs more intelligently. They not only detect failures but also group related defects and highlight high-priority issues, improving triage efficiency.

- Continuous Learning: Each test run adds to the system’s knowledge. Over time, agents refine their strategies, prioritize high-risk areas, and optimize regression cycles, leading to progressively better outcomes with minimal human intervention.

- End-to-End Coverage: Beyond isolated tests, agentic AI validates entire user journeys, such as sign-ups or checkouts. This ensures that critical workflows continue to function seamlessly, even as the application evolves.

Manual Software Testing VS Agentic AI Software Testing

To understand the impact of agentic AI, it’s helpful to compare how it differs from traditional manual testing across key dimensions.

| Aspect | Manual Software Testing | Agentic AI Software Testing |

| Test Creation | Testers write cases manually, which is time-consuming and prone to gaps. | AI agents auto-generate tests from requirements, code, or user flows, ensuring faster and broader coverage. |

| Execution Speed | Relies on human effort, limiting scalability and slowing down release cycles. | Runs tests autonomously and continuously, keeping pace with rapid development and CI/CD pipelines. |

| Adaptability | Struggles with frequent UI or workflow changes, often requiring test redesign. | Adapts dynamically with self-healing capabilities, reducing failures from minor changes. |

| Defect Detection | Depends on tester expertise and manual log analysis, which can miss subtle issues. | Identifies defects intelligently using pattern recognition, clustering, and prioritization for faster triage. |

| Maintenance | Requires ongoing effort to update and manage test suites, especially in agile environments. | Minimizes maintenance with self-updating test cases and learning from past executions. |

| Scalability | Limited by team size and effort, making large-scale testing difficult. | Scales seamlessly through autonomous agents capable of running tests across environments in parallel. |

Best Tools: Boost Your Testing with AI Agents

The adoption of agentic AI is accelerating, with several platforms introducing intelligent agents that make testing faster, more adaptive, and less reliant on human intervention. Among the leading solutions are:

- BrowserStack AI: Purpose-built to bring autonomy into the testing lifecycle. These agents can generate test cases, execute them across real devices and browsers, and adapt dynamically to application changes, helping teams deliver reliable software at scale.

- Testsigma Atto: An AI-powered testing assistant launched in 2025 that uses autonomous agents to generate test cases, adapt scripts, and manage execution seamlessly.

- UiPath Test Suite: Extends robotic process automation with agentic testing features, allowing autonomous test orchestration across enterprise systems.

- Virtuoso: Focuses on AI-driven functional and regression testing with self-healing capabilities, enabling agents to adjust to UI and workflow changes.

- AccelQ Agentic Automation: Offers adaptive test workflows that learn from execution history, ensuring faster regression cycles and lower maintenance overhead.

- SoftServe RAG Framework: Applies retrieval-augmented generation to reuse past test scripts, reducing redundancy and accelerating coverage.

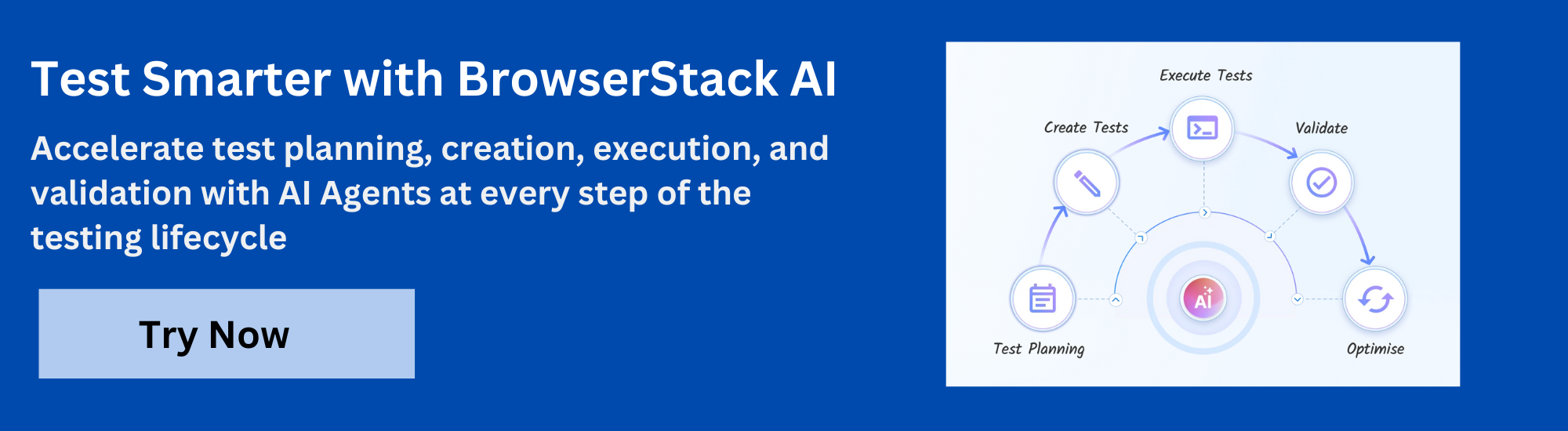

Enhance Your Software Testing with BrowserStack AI Agents

BrowserStack AI Agents bring autonomy and intelligence to every stage of the testing lifecycle. Each agent is purpose-built to solve a critical QA challenge, helping teams test smarter, faster, and at scale.

- Test Case Generator Agent: Automatically creates test cases from user stories, requirements, or code. This reduces manual effort and ensures comprehensive coverage from the start of development.

- Self-Healing Agent: Detects and adapts to changes in the application, such as modified locators, UI shifts, or API updates – keeping test suites stable and reducing flaky failures.

- Low-Code Authoring Agent: Simplifies test creation by allowing teams to write and maintain tests with minimal coding knowledge. It empowers non-technical users to contribute effectively to QA.

- Test Selection Agent: Identifies and runs only the most relevant test cases for a given code change, accelerating feedback loops and optimizing CI/CD pipelines.

- Test Deduplication Agent: Eliminates redundant or overlapping test cases, improving efficiency and ensuring that test suites remain lean without sacrificing coverage.

- A11y Issue Detection Agent: Finds accessibility issues early by scanning for WCAG violations and usability gaps, helping teams deliver inclusive applications.

- Visual Review Agent: Highlights meaningful visual changes in the application while filtering out pixel-level noise. This reduces false positives and makes visual testing reviews faster and clearer.

By combining these specialized agents, BrowserStack enables QA teams to transition from traditional automation to agentic, intelligent, and resilient testing workflows – all within a unified platform.

Conclusion

Agentic AI is transforming software testing from scripted automation into an era of autonomous, intelligent, and adaptive QA. By introducing agents that can generate, heal, select, and optimize tests, teams can achieve faster feedback, stronger coverage, and more resilient pipelines. While manual testing still plays a role in exploratory and usability checks, the future of scalable, reliable QA lies in leveraging agentic AI.

With solutions like BrowserStack AI Agents, organizations can future-proof their testing strategies, reduce maintenance overhead, and deliver high-quality software at the speed modern development demands.