Most teams assume that end-to-end testing is a slow, repetitive process. Writing test scripts, maintaining Playwright selectors, and updating tests as the application changes feels like a grind that everyone simply accepts as part of the job.

But what if the most time-consuming parts of testing, including scripting, setup, and debugging flaky tests, could be largely automated? What if tests could almost write themselves while still reflecting real user behavior?

In fact, AI-powered tools like Playwright MCP (Model Context Protocol) change how end-to-end tests are created and maintained. Instead of manually scripting every step, tests are generated from intent, application structure, and runtime behavior.

Overview

How to automatically generate test cases in Playwright?Playwright

supports automatic test creation in two ways. Playwright Codegen records real user interactions and converts them into test code. Playwright Test Agents use AI and MCP to generate and maintain tests from high-level intent.

Built-in test generator (Codegen)

Playwright includes a built-in test generator called Codegen that converts browser interactions into executable test scripts. It is designed for quickly creating baseline tests by recording what a user does in the browser.

- How it works: You interact with the application in a real browser, and Playwright generates the corresponding test code in real time, prioritizing resilient locators such as roles, visible text, and test IDs.

- CLI usage: Run npx playwright codegen [URL] to launch a browser and the Playwright Inspector, where the test code is generated as actions are performed.

- VS Code usage: Use the Playwright for VS Code extension and select Record new from the Testing panel to start recording directly from the editor.

- What it generates: Actions like click and fill, along with assertions for visibility, text, and values.

- Environment coverage: Supports recording with different viewports, device emulation, color schemes, and geolocation settings.

AI-powered test generation with Playwright Test Agents

For more advanced automation, Playwright provides Test Agents that use large language models through the Model Context Protocol. This approach focuses on intent-driven testing rather than step-by-step recording.

- Planner agent: Explores the application and produces a structured test plan in Markdown that outlines flows and validations.

- Generator agent: Translates the test plan into executable Playwright test code.

- Healer agent: Detects broken tests and adapts locators or steps when the UI changes.

- Level of automation: Enables high-level instructions such as testing a login flow, while the agents handle planning, code generation, and ongoing maintenance.

In this article, I will explain how AI-driven test generation works with Playwright MCP, where it fits into real-world testing workflows, and what teams should watch out for when adopting it.

What Is a Playwright MCP?

Playwright MCP, short for Model Context Protocol, is the layer that enables Playwright to work with AI models in a structured and reliable way during test generation.

Instead of treating an AI model as a simple text generator, MCP defines how the model receives context about the application, the test framework, and the current execution state.

At its core, MCP acts as a bridge between intent and execution. It supplies the AI with details such as page structure, available Playwright locators, prior test steps, and execution feedback.

This allows the model to reason about what to test, how to navigate the application, and how to express those actions using Playwright’s APIs.

With MCP in place, Playwright AI workflows can explore an application, create test scenarios, generate executable test code, and react to failures with awareness of what changed. The protocol ensures that AI-generated tests stay grounded in the actual application and Playwright’s capabilities.

Why Use AI-Based Test Generation in Playwright

AI-based test generation in Playwright becomes relevant when test creation and maintenance start consuming more effort than validation itself. As applications evolve, step-driven scripts and recordings struggle to keep up with UI changes and branching user flows.

This is why teams turn to AI-based generation:

- Intent-driven test creation: AI generates Playwright tests from goals such as validating a checkout or onboarding flow, so test logic reflects user behavior rather than the exact sequence of DOM interactions captured at one point in time.

Read More: AI Test Case Generation Guide

- Lower maintenance under UI change: By reasoning over page structure and runtime feedback, AI adapts locators and steps when the UI shifts, which reduces test breakage caused by layout refactors or component updates.

- Deeper functional coverage: AI explores alternate paths, state transitions, and negative scenarios that are rarely recorded manually, expanding coverage without proportionally increasing test authoring effort.

- Shift in tester effort: Teams spend less time writing and fixing scripts and more time validating assumptions, refining assertions, and deciding where automation adds real value.

- Faster test scalability: AI-generated tests scale across new features and flows without requiring linear increases in scripting time, which helps large applications keep pace with frequent releases.

Also Read: A Guide to Scaling Test Automation

- Consistent test structure: Generated tests follow consistent patterns and conventions, making large test suites easier to review, reason about, and extend over time.

Difference Between Playwright Codegen and Playwright MCP

Playwright Codegen and Playwright MCP solve different problems, even though both aim to reduce manual test writing. Codegen focuses on capturing actions. MCP focuses on understanding intent and application behavior.

Here are the key differences between Playwright Codegen and Playwright MCP:

| Aspect | Playwright Codegen | Playwright MCP |

| How tests are created | Records browser interactions such as clicks, navigation, and form inputs and converts them directly into Playwright code. | Generates tests from high-level goals like validating a login or checkout flow using application context and runtime signals. |

| Level of abstraction | Operates at the interaction level and mirrors exactly what was recorded in a single session. | Operates at the intent and flow level and derives steps dynamically based on page structure and behavior. |

| Awareness of application state | Limited to the state observed during recording with no understanding beyond captured actions. | Reasons about DOM structure, available locators, navigation paths, and execution feedback across runs. |

| Response to UI changes | Tests often break when selectors or layouts change and require manual updates. | Can adapt locators and steps by re-evaluating the UI when changes occur. |

| Maintenance effort | Requires ongoing manual maintenance as the application evolves. | Reduces maintenance by regenerating or healing tests when failures occur. |

| Best-suited scenarios | Quick baselines, learning Playwright APIs, and prototyping simple flows. | Large or fast-changing applications where coverage and long-term maintainability matter. |

Core Building Blocks Behind Playwright AI Test Generation

AI-driven test generation in Playwright works because multiple components collaborate, each handling a specific responsibility in the test lifecycle. Together, these blocks allow tests to be planned, generated, executed, and corrected with minimal manual input.

This is how Playwright MCP turns intent into executable tests:

- Model Context Protocol (MCP): Provides structured context to the AI, including page structure, available locators, Playwright APIs, and execution feedback, so generated tests align with the actual application and framework behavior.

- Application exploration layer: Navigates the application autonomously to understand routes, states, and UI transitions instead of relying on a single recorded session.

Read More: Top Automated Test Case Generation Tools

- Intent-to-plan conversion: Translates high-level goals into a structured test plan that defines flows, validations, and checkpoints before any code is written.

- Test code generation engine: Converts the planned steps into executable Playwright tests that follow framework conventions and reusable patterns.

- Runtime feedback loop: Observes test execution results and feeds failures, DOM changes, and timing issues back into the system for correction.

- Self-healing logic: Adjusts locators and steps when UI changes occur, reducing manual intervention and test churn.

When to Use Playwright MCP and When It Is Not the Right Choice

Playwright MCP is designed for situations where writing and maintaining end-to-end tests becomes harder than validating application behavior. Its value depends on how dynamic the product is and how much flexibility teams need in test generation.

Here’s when to use the Playwright AI test case generator:

- Rapidly changing user interfaces: MCP adapts tests when layouts, components, or selectors change, which reduces test churn in fast-moving front-end codebases.

- Complex, multi-step user journeys: Flows like onboarding, payments, or role-based access benefit from intent-driven generation instead of rigid recorded steps.

- Large test surface areas: MCP helps scale coverage across features and paths without requiring a linear increase in manual scripting.

- Long-term test maintenance challenges: Teams spending more time fixing tests than reviewing failures gain the most from self-adapting generation.

Read More: What is Automated Test Script Maintenance?

Here’s when not to use the Playwright AI test case generator:

- Stable or static applications: When UI and flows rarely change, recorded or handwritten tests are simpler and easier to control.

- Strictly deterministic validations: Precise UI measurements, pixel-level assertions, or timing-sensitive checks require explicit test logic.

- Highly regulated test logic: Scenarios that demand full transparency and fixed steps may not align well with AI-generated flows.

How to Use the Playwright AI Test Generator

Using the Playwright AI test generator follows a step-based flow where intent is converted into executable tests through MCP. Each step builds on the previous one and moves from definition to execution.

Step 1: Define the test intent

Start by stating what needs to be validated, such as verifying a login flow, checking access control, or confirming a checkout journey. Focus on the outcome and rules, not individual UI actions.

Read More: How to Write Test Cases for Login Page

Step 2: Allow application exploration

Let the AI explore the application to understand pages, navigation paths, available elements, and state transitions. This exploration provides the context required for accurate test planning.

Step 3: Generate a structured test plan

The AI produces a test plan that outlines flows, checkpoints, and validations. Review this plan to ensure it reflects expected user behavior and business logic.

Step 4: Convert the plan into Playwright tests

The approved plan is translated into executable Playwright test code that follows framework conventions and uses resilient locator strategies.

Step 5: Execute and capture feedback

Run the generated tests and observe execution results, including failures, timing issues, and DOM changes, which are fed back into the system.

Step 6: Refine intent and regenerate if needed

Update the intent or constraints based on results, allowing the AI to adjust test logic without rewriting scripts manually.

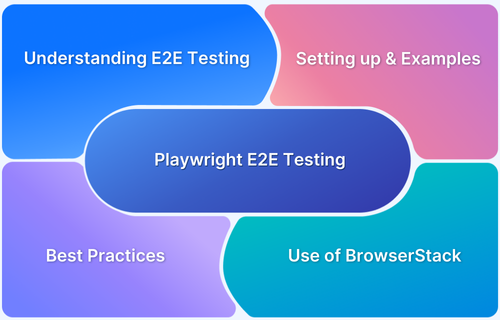

Running AI-Generated Playwright Tests Across Real Browsers

AI-generated tests can only fully validate application behavior when executed on real browsers, because differences in browser rendering engines, event handling, and interactions across browsers can affect user experience.

Limitations to consider:

- Rendering differences: UI elements may display differently across browsers, impacting layout or visibility checks.

- Interaction discrepancies: Actions like clicks, hovers, or drag-and-drop may behave differently depending on the browser engine.

- Timing and responsiveness issues: Animations, transitions, or dynamic content may cause tests to fail if not observed on actual browsers.

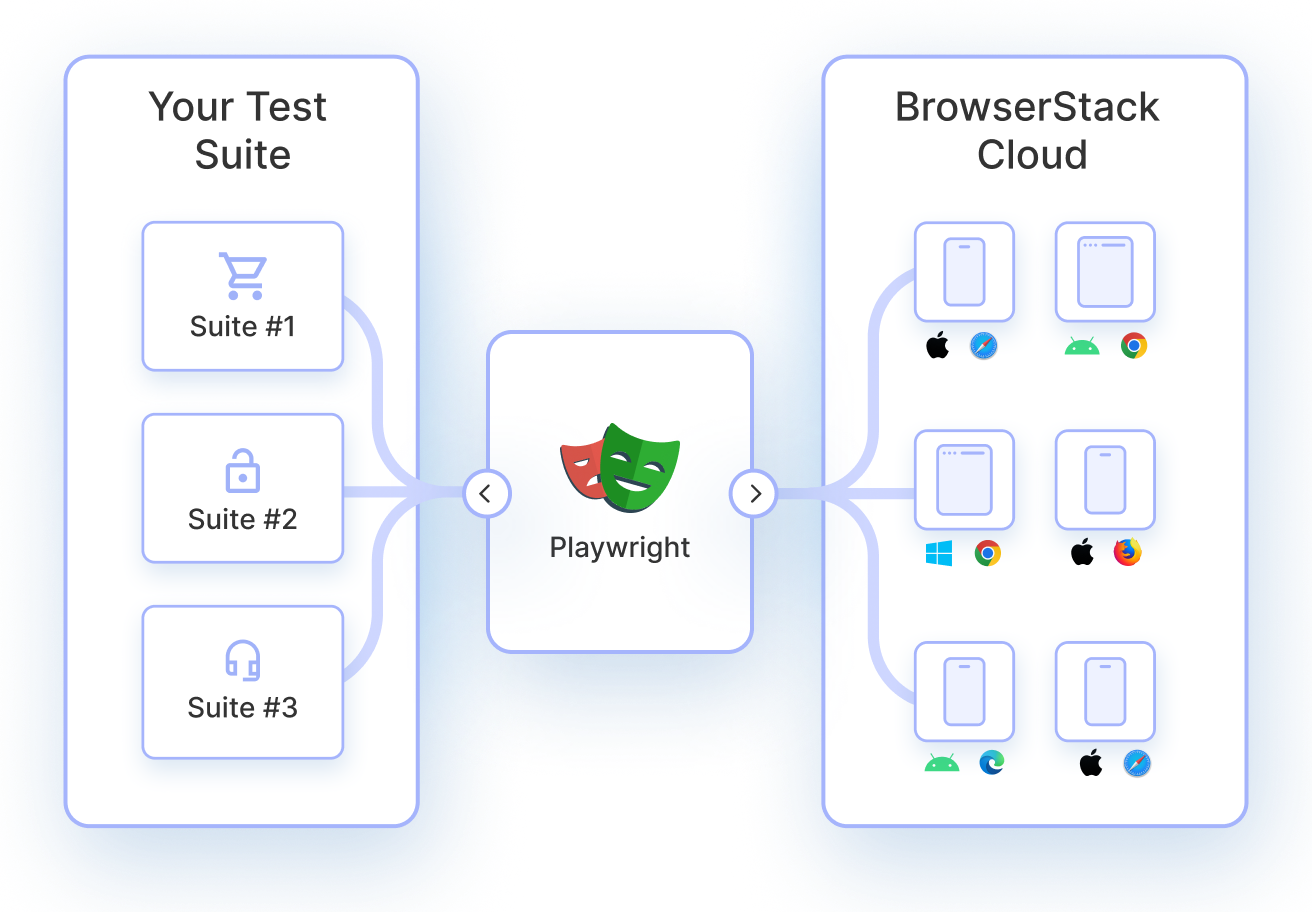

Platforms like BrowserStack allows teams to run AI-generated Playwright tests across real browsers and devices at scale. This ensures that tests reflect actual user conditions, verify true functionality, and catch edge-case failures that automated generation alone cannot anticipate.

Here are the core features that make BrowserStack ideal for validating AI-driven Playwright tests:

- Real Device Cloud: Access a wide range of real browsers and devices and ensure tests run in real user conditions.

- Parallel Testing: Execute multiple AI-generated tests simultaneously, reducing overall test cycles and speeding up validation of complex flows.

- Test Reporting & Analytics: Gain detailed insights into test outcomes, failures, and trends, helping teams refine AI-generated tests and detect hidden issues.

- Web Performance Testing: Monitor how pages perform under real conditions, validating not only functionality but also responsiveness and load behavior.

- Payment Workflows Testing: Safely test critical end-to-end flows like checkout and payment processes, which often involve multiple steps and edge cases that AI-generated tests can uncover.

Conclusion

AI-powered test generation with Playwright MCP transforms how teams approach end-to-end testing. By converting high-level intent into executable tests, it reduces manual scripting, adapts to UI changes, and expands coverage across complex flows and edge cases.

Running these AI-generated tests on real browsers ensures that the tests reflect true user behavior, catch hidden interaction or rendering issues, and validate critical workflows under realistic conditions. Platforms like BrowserStack provide the infrastructure, parallel execution, and analytics needed to scale and monitor these tests effectively.