Most testers assume that avoiding Playwright bot detection is just a matter of running tests in headless mode and adding a few retries.

I thought the same-until my “stable” Playwright tests started getting blocked in a production-like environment.

Pages redirected to challenge screens, logins failed silently, and entire test runs stalled. I tried increasing timeouts, slowing down steps, even rerunning the suite multiple times, but nothing worked. The more I tweaked the tests, the more unreliable they became.

The real shift happened when I realized the issue wasn’t what my tests were doing, but how they were doing it. My automation didn’t behave like a real user-and modern detection systems are built to catch exactly that.

Overview

Playwright bot detection refers to the mechanisms websites use to identify automated browser activity by analyzing browser fingerprints, execution patterns, network behavior, and user interactions that differ from real human users.

How to avoid bot detection with Playwright

Avoiding bot detection with Playwright isn’t about hiding automation-it’s about making your tests behave like real users in real environments. The following strategies align your automation’s setup and behavior with real user interactions.

Core Technical Adjustments

- Run Playwright in headed or real-browser environments where possible

- Use realistic viewport sizes, user agents, and OS/browser combinations

- Avoid automation-specific flags and unnecessary browser modifications

- Maintain persistent browser contexts instead of fresh profiles

Behavioral and Network Strategies

- Simulate natural user interactions with realistic delays, typing, and scrolling

- Avoid instant navigation and overly fast execution flows

- Respect normal page load and resource timing

- Keep request frequency and navigation patterns close to real-user behavior

This article explains how Playwright bot detection works, why Playwright’s defaults can trigger it, and how to build more realistic automation

Understanding How Bot Detection Works for Playwright Users

Bot detection systems don’t detect Playwright directly-they detect non-human patterns. For Playwright users, these patterns usually come from how automation behaves, not from the tool itself.

- Client-Side Signals: Websites inspect browser-level details through JavaScript, such as headless indicators, browser properties, fingerprinting data (canvas, WebGL, fonts), and mismatches in timezone or language. Default Playwright configurations can unintentionally expose these signals.

- Server-Side Traffic Analysis: On the backend, applications monitor request timing, navigation flow, and session continuity. Playwright scripts often trigger red flags by sending requests too quickly, skipping intermediate pages, or starting every run with a fresh session.

- Behavioral Analysis: Real users hesitate, scroll, pause, and interact inconsistently. Automated flows that click and type instantly, with perfect precision, are easy to identify as non-human.

Read More: Vitest vs Playwright

Ethical, Legal, and Compliance Considerations

Before focusing on how to avoid bot detection with Playwright, it’s important to clarify why and where these techniques should be applied. Bot detection exists to prevent abuse, fraud, and unauthorized access-not to block legitimate testing.

For QA engineers and developers, Playwright automation should be used within approved environments such as development, staging, or production systems you own or have explicit permission to test. Attempting to bypass safeguards on third-party platforms or protected user flows can violate terms of service and legal agreements.

From a compliance perspective:

- Respect application security controls and rate limits

- Avoid automating flows protected by explicit anti-bot policies without authorization

- Treat CAPTCHAs and challenge pages as signals to adjust test behavior, not obstacles to defeat

- Ensure automation aligns with internal security and governance standards

Ethical Playwright usage focuses on reducing false positives in legitimate test automation, not evading security mechanisms. When approached responsibly, improving realism in automation enhances test reliability without crossing legal or ethical boundaries.

Read More: Top Playwright Alternatives in 2026

Playwright and Bot Detection: The Default Behavior

Playwright is engineered to deliver fast, reliable, and deterministic browser automation. While these characteristics are highly effective for test execution, they can differ significantly from real-world user behavior and, as a result, may trigger bot detection systems.

By default, Playwright typically:

- Runs browsers in headless mode

- Creates new, stateless browser contexts for each execution

- Performs interactions instantly without natural pauses

- Exposes automation-related browser properties

Individually, these behaviors are not inherently problematic. However, when combined, they form a usage pattern that appears highly structured and non-human. In contrast, real users operate with persistent sessions, variable interaction timing, and imperfect navigation flows.

Recognizing these default behaviors is essential for Playwright users, as reducing bot detection risk requires aligning automation execution with realistic browser and user interaction patterns rather than modifying test logic itself.

These default behaviors are often amplified by local machines and custom CI setups that don’t fully reflect real user environments.

Platforms that provide access to real, production-like browsers, such as BrowserStack Automate, help reduce these gaps by running Playwright tests in environments that closely mirror how real users access modern applications.

Running Playwright in a More Human-Like Way

Reducing bot detection with Playwright starts by configuring the browser environment and execution flow to better reflect real user behavior. Rather than relying on default automation settings, Playwright tests should be tuned to operate under conditions that closely resemble how users actually browse and interact with applications.

This includes running tests in realistic browser modes, maintaining consistent environments, and avoiding overly optimized execution paths that rarely occur in real-world usage. Small adjustments at this level can significantly improve test stability and reduce false positives caused by bot detection systems.

Simulating Human-Like Interactions

User interaction patterns are a critical signal for bot detection. Automated actions that execute instantly or with perfect precision often stand out as synthetic.

To make interactions more human-like:

- Introduce natural delays between actions such as clicks and navigation

- Simulate real typing behavior instead of instantly setting input values

- Scroll pages gradually rather than jumping directly to elements

- Allow time for visual rendering and content consumption before interaction

These interaction strategies not only reduce detection risks but also result in tests that more accurately reflect real user experiences, uncovering issues that purely deterministic automation might miss.

Read More: TestCafe vs Cypress: Core Differences

Browser Fingerprinting and How to Reduce It

Browser fingerprinting is one of the most common ways websites distinguish automated sessions from real users. Instead of relying on a single “bot flag,” fingerprinting collects multiple browser and device characteristics and combines them into a unique identity.

If your Playwright session looks inconsistent, unusual, or overly uniform across runs, it can trigger risk scoring and lead to blocks or verification challenges.

What Sites Commonly Fingerprint

Detection systems often evaluate a mix of these signals:

- Device and browser identity: user-agent, platform, browser version

- Locale signals: language, timezone, region settings

- Rendering fingerprints: canvas and WebGL output differences

- Fonts and media capabilities: available fonts, audio/video support

- Hardware hints: screen size, CPU cores, memory indicators

Playwright can be flagged when these signals do not align-for example, a user-agent that claims “Windows Chrome” while other properties suggest a different platform, or a timezone that doesn’t match the IP region.

How to Reduce Fingerprinting Risk in Playwright

For legitimate testing, the objective is not to “spoof everything,” but to maintain consistency and realism.

Practical approaches include:

- Keep browser identity coherent: Ensure user-agent, viewport, platform, and locale are aligned.

- Use stable execution environments: Run tests on consistent browser versions and OS images.

- Avoid excessive customization: Unnecessary overrides often create suspicious combinations.

- Prefer persistence over fresh profiles: Persistent contexts reduce “new user every run” patterns.

- Match regional signals: Align timezone and language settings with the expected test geography.

Read More: How to uninstall Playwright

Handling Cookies, Storage, and Sessions

Playwright automation often gets flagged when every run starts with a completely fresh browser state. Repeated logins, missing cookies, and discarded sessions create behavior patterns that differ from how real users interact with applications.

To reduce detection risk:

- Reuse cookies and storage for returning-user test flows

- Avoid logging in repeatedly within the same execution

- Use persistent browser contexts where applicable

- Reset sessions only for tests that explicitly require a first-time user state

Playwright’sStorageState API makes this approach practical by allowing you to capture and restore a complete browser session, including cookies and storage.

// After login or key interactions, save full context

await context.storageState({ path: ‘user-session.json’ });// Reuse in new contexts (cookies + storage preserved)

const context = await browser.newContext({

storageState: ‘user-session.json’,

userAgent: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64)…’

});

Managing session state intentionally makes Playwright automation appear consistent and credible, improving test stability while reducing unnecessary bot detection signals.

Read More: Playwright vs Selenium

Network Behavior and Request Patterns

From a server’s perspective, automated traffic is often identified by how quickly and consistently requests are made. Playwright scripts tend to execute actions back-to-back, creating request timing and navigation patterns that differ from real user behavior.

Real users pause between actions to read content, make decisions, or wait for pages to load. When automation removes these pauses entirely, it can generate unnaturally dense or uniform request sequences that increase the likelihood of detection.

To make network behavior more realistic, introduce natural delays between major actions and allow pages and resources to load fully before proceeding.

For example, adding a small, randomized pause can help simulate human “think time”:

// Human-like think time (1-4 seconds)

await page.waitForTimeout(1000 + Math.random() * 3000);

Additional best practices include:

- Avoiding rapid or continuous navigation without pauses

- Following realistic user navigation paths

- Letting all required assets load naturally

- Keeping timing between actions variable rather than perfectly consistent

Aligning request patterns with normal user behavior improves both test reliability and credibility, reducing unnecessary bot detection triggers in Playwright automation.

Dealing with CAPTCHA and Challenge Pages

CAPTCHA and challenge pages are designed to stop suspicious traffic and should not be treated as test failures in legitimate Playwright automation. When they appear during automated runs, they usually indicate configuration or behavior issues rather than problems in the application under test.

For QA workflows, the correct approach is prevention through environment configuration, not attempting to solve or bypass challenges during execution. CAPTCHAs should be disabled, bypassed via test keys, or explicitly handled in staging and test environments with support from development and security teams.

When a challenge page does appear, Playwright tests should detect it and fail fast so the issue can be investigated. This avoids unpredictable test behavior and prevents false positives.

// Detect CAPTCHA or challenge page and stop execution

if (await page.locator(‘[data-sitekey], iframe[src*=”captcha”]’).count() > 0) {

console.warn(‘CAPTCHA detected – verify test environment configuration’);

throw new Error(‘CAPTCHA encountered during automated test’);

}

Best practices for reliable QA automation include:

- Disabling CAPTCHAs or using test keys in non-production environments

- Whitelisting automation IPs where appropriate

- Coordinating with development teams to enable CAPTCHA bypass flags for testing

- Avoiding CAPTCHA handling logic in production test runs

Treating CAPTCHA occurrences as configuration signals rather than automation problems keeps Playwright tests stable, compliant, and aligned with security best practices.

Using Proxies and IP Reputation Wisely

IP reputation is a key signal in bot detection. Even well-configured Playwright tests can be flagged if traffic consistently comes from IPs that are low-trust, overused, or geographically inconsistent with browser settings.

For legitimate QA automation, proxies should be used intentionally, not as a blanket solution. Excessive IP rotation or switching IPs mid-session often raises more suspicion than running tests from a stable, reputable network.

Recommended practices include:

- Prefer stable, trusted IPs over frequent rotation

- Keep IP location aligned with browser locale and timezone

- Avoid changing IPs during an active or authenticated session

- Use proxies only when testing geo-specific behavior or network conditions

When proxies are required, Playwright allows explicit configuration at browser launch:

const browser = await chromium.launch({

proxy: {

server: ‘http://proxy.example.com:3128’

}

});This approach ensures the proxy is applied consistently for the entire browser session, which better reflects real user behavior.

Used correctly, proxies help simulate realistic access scenarios without undermining session credibility or triggering unnecessary bot detection signals.

Read More: How to handle Captcha in Selenium

Monitoring Detection Signals During Automation

Bot detection rarely appears without warning. In most cases, applications show early signals that automation is being flagged, often before a full block occurs.

Monitoring these signals helps you diagnose whether failures are caused by genuine application issues or by detection-driven interruptions.

Common detection indicators include:

- Unexpected redirects to verification or “access denied” pages

- Sudden increases in CAPTCHA or challenge prompts

- Login failures without clear UI or API errors

- Pages loading with missing content or partial rendering

- Repeated 403, 401, or 429 responses during normal flows

To make debugging easier, instrument your Playwright runs to capture what the application is returning at the network layer.

For example, logging suspicious response codes can quickly reveal whether detection controls are being triggered:

page.on(‘response’, (res) => {

const status = res.status();

if ([401, 403, 429].includes(status)) {

console.warn(`Detection signal: ${status} on ${res.url()}`);

}

});When these signals appear, treat them as a prompt to review your setup: session persistence, interaction timing, browser consistency, and network behavior. Monitoring detection indicators early improves test reliability and prevents teams from wasting time debugging false failures that are not caused by the application itself.

Identifying detection signals early makes it clear when failures are environmental rather than functional. This is where running Playwright tests in consistent, real-browser environments becomes critical for reducing false positives and improving overall test reliability.

Enhance Playwright Automation with BrowserStack Automate

As bot detection becomes more sophisticated, many issues in Playwright automation stem not from test logic, but from inconsistent or synthetic execution environments. Local machines, custom Docker images, or lightly configured CI runners can introduce subtle differences in browser behavior that increase the likelihood of detection.

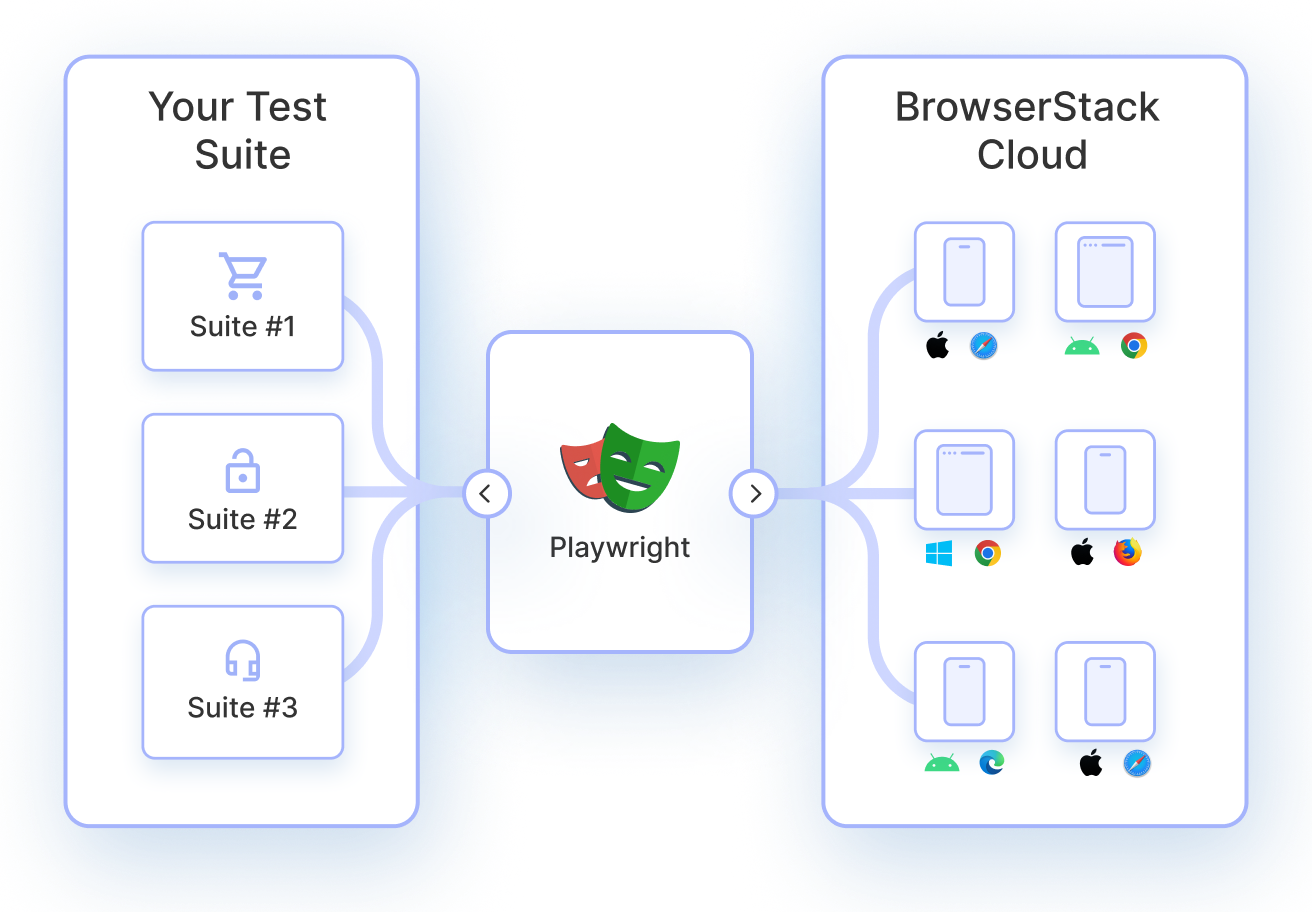

Running Playwright tests on BrowserStack Automate helps address this challenge by providing access to real desktop and mobile browsers running on real operating systems. This reduces discrepancies in browser fingerprints, rendering behavior, and network characteristics that are difficult to reproduce reliably in self-managed setups.

Key benefits include:

- Real browser execution: Run Playwright tests on real Chrome, Firefox, Edge, and Safari browsers instead of emulated or patched environments.

- Cross-OS and cross-browser coverage: Validate behavior across multiple operating systems and browser versions without managing local infrastructure.

- Seamless CI/CD integration: Integrate Playwright tests easily with popular CI tools, ensuring consistent environments across pipelines.

- Secure and stable test infrastructure: Avoid instability caused by shared runners, outdated browsers, or custom Docker images.

- Detailed debugging artifacts: Access logs, screenshots, videos, and network data to quickly identify whether failures are functional or environment-related.

- Scalable parallel execution: Run large Playwright suites in parallel without increasing detection risk from aggressive local execution.

By combining realistic browser environments with scalable infrastructure, BrowserStack Automate helps teams reduce false positives, improve test reliability, and ensure Playwright automation reflects real user behavior-especially in production-like testing scenarios.

Conclusion

Avoiding bot detection with Playwright is less about tricks or workarounds and more about realism, consistency, and responsible automation practices. Most detection issues arise when automated tests behave in ways real users never would-moving too fast, starting from a clean state every time, or running in environments that don’t reflect production conditions.

By understanding how detection systems work and adjusting Playwright’s browser setup, session handling, interaction timing, and network behavior, teams can significantly reduce false positives and improve test stability. Treating CAPTCHA and challenge pages as configuration signals rather than obstacles further reinforces ethical and compliant testing.

Ultimately, reliable Playwright automation depends on running tests in environments that closely match real user experiences. When execution conditions, behavior, and infrastructure align, automation becomes more trustworthy-allowing teams to focus on validating application quality instead of debugging detection-related failures.

Useful Resources for Playwright

- Playwright Automation Framework

- Playwright Java Tutorial

- Playwright Python tutorial

- Playwright Debugging

- End to End Testing using Playwright

- Visual Regression Testing Using Playwright

- Mastering End-to-End Testing with Playwright and Docker

- Page Object Model in Playwright

- Scroll to Element in Playwright

- Understanding Playwright Assertions

- Cross Browser Testing using Playwright

- Playwright Selectors

- Playwright and Cucumber Automation

Tool Comparisons: