Many people assume that once you can send requests and parse HTML with Scrapy, you can scrape any website reliably. It feels simple, predictable, and sufficient for most tasks.

However, when I was scraping a job listings website, I noticed that some listings never appeared in my Scrapy spider. While the page initially loaded in the browser, the content was dynamic and only appeared after scrolling or applying filters.

That’s when I realized that standard Scrapy could not handle dynamic content, and my scripts were silently missing important data.

To solve this, I turned to Playwright. By combining it with Scrapy, I could finally interact with dynamic pages, wait for content to load, and scrape data that was previously invisible.

Overview

What is Scrapy Playwright?

Scrapy Playwright is an integration that allows Scrapy spiders to use Playwright to interact with websites using browser rendering engines. It enables Scrapy to scrape content loaded via JavaScript, dynamic updates, and client-side interactions while preserving Scrapy’s efficient crawling and scheduling model.

Key Benefits of Scrapy Playwright

- JavaScript rendering support: Enables Scrapy to extract data from pages where content is loaded through client-side JavaScript rather than static HTML.

- Page interaction capabilities: Allows spiders to click buttons, submit forms, scroll pages, and wait for specific elements or network activity before extraction.

- Controlled browser execution: Supports headed and headless browsers using Chromium, Firefox, and WebKit, while allowing screenshots and in-page JavaScript execution for debugging or advanced extraction.

- Efficient Scrapy integration: Operates as a download handler, so Playwright is only used when explicitly enabled, keeping the rest of the crawl fast and resource-efficient.

How to Use Scrapy Playwright?

To use Scrapy Playwright, install the integration and the required browser binaries.

pip install scrapy-playwrightplaywright install

These commands install the Scrapy Playwright package and the default Playwright browsers, including Chromium, Firefox, and WebKit. Once installed, Playwright can be enabled selectively within Scrapy requests as needed.

In this tutorial, I’ll show you step by step how to use Scrapy Playwright to scrape modern websites reliably, including handling dynamic content, infinite scrolling, AJAX calls, and more.

What is Scrapy Playwright?

Scrapy is a Python framework used for extracting data from websites. It is designed to crawl static pages, follow links across a site, and export structured data in formats such as CSV, JSON, or databases.

Scrapy Playwright is an integration of Scrapy with Playwright. It allows Scrapy spiders to interact with modern web pages that use JavaScript, AJAX, or dynamic content loading.

Unlike standard Scrapy, which only handles static HTML, Scrapy Playwright can wait for content to appear, execute scripts, and perform user-like interactions such as clicking buttons, filling forms, and scrolling pages.

Why You Should Use Scrapy Playwright?

Scrapy Playwright combines the best of both worlds. It extends Scrapy’s power to handle modern, dynamic web pages reliably.

Here’s why to use Scrapy Playwright:

- Dynamic Content Rendering: Scrapy Playwright can detect and wait for elements rendered after JavaScript execution to ensure your spider captures data that would otherwise be invisible.

- Precise Interaction Control: Beyond clicking buttons or filling forms, it allows conditional actions, such as triggering events only when certain page states or data conditions are met.

- Session and Context Management: Maintain multiple browser contexts with independent cookies and storage, enabling multi-user simulations or session-specific scraping without data contamination.

- Optimized Playwright Performance: Abort unnecessary requests, control resource loading, and reduce bandwidth usage for faster scraping of JavaScript-heavy sites.

- Advanced Playwright Debugging and Visibility: Capture detailed page logs, screenshots, and videos during scraping sessions to debug dynamic content or unexpected page behavior effectively.

- Infinite Scrolling and Lazy Loading Handling: Automatically scroll or trigger events to load additional content while avoiding redundant page reloads, ensuring completeness without overloading servers.

- Custom JavaScript Execution: Inject scripts to manipulate or extract data in ways that traditional selectors cannot handle, offering flexibility for highly dynamic or interactive sites.

Prerequisites to Install Scrapy Playwright

Before you start scraping websites with Playwright, you need to prepare your environment. Ensuring the right setup will save time and prevent common errors.

1. Python Installation

Scrapy Playwright requires Python 3.7 or higher. If Python is not installed, download it from the official website. Verify the installation with:

python –version

2. Install Scrapy

Scrapy provides the core crawling and parsing capabilities. Install it using pip:

pip install scrapy

Confirm the installation by running:

scrapy version

3. Install Playwright and Scrapy Playwright

Playwright powers browser automation for dynamic content. Install both Scrapy Playwright and the required browser binaries:

pip install scrapy-playwrightplaywright install

The playwright install command downloads browser engines (Chromium, Firefox, WebKit) so your spiders can simulate real user interactions.

Also Read: How to install Playwright in 2025

4. Optional: Virtual Environment

It is recommended to use a Python virtual environment to manage dependencies and avoid conflicts:

python -m venv venvsource venv/bin/activate # Linux/macOS

venvScriptsactivate # Windows

This ensures a clean environment for your Scrapy Playwright project.

Core Scraping Operations with Scrapy Playwright

Once your environment is ready, it’s time to start scraping. Scrapy Playwright combines Scrapy’s crawling power with Playwright’s browser automation, allowing you to interact with dynamic web pages as if you were a real user.

Below are the core use cases of Scrapy Playwright along with how to do it.

1. Opening a URL

In a standard Scrapy workflow, opening a URL means sending an HTTP request and immediately parsing the returned response. This works well when the server delivers complete HTML that already contains the data of interest.

However, many modern websites do not return fully populated HTML responses. Instead, the initial response often contains only a basic page structure, while the actual content is loaded later through JavaScript. Because Scrapy does not execute client-side code, it parses the page too early and never sees the rendered data.

Scrapy Playwright changes this behavior by opening the URL inside a real browser. This allows JavaScript to execute and network requests to complete before Scrapy begins parsing.

import scrapyclass ExampleSpider(scrapy.Spider):

name = “example”

def start_requests(self):

yield scrapy.Request(

url=”https://example.com”,

meta={“playwright”: True}

)

In this example, the request is flagged to use Playwright. Scrapy waits until the browser finishes rendering the page before passing the response to the spider. When data becomes available without changing selectors, it usually indicates that rendering, not crawling logic, was the missing step.

2. Extracting Text from Elements

Extracting text is usually the simplest part of scraping. With standard Scrapy, once a response is received, the HTML is assumed to be complete and ready to parse. However, this assumption breaks on dynamic pages.

On many modern websites, the initial HTML loads first, while key elements appear only after JavaScript finishes running or after additional network requests complete. Because of this, the DOM can exist in an incomplete state when extraction starts.

Scrapy Playwright solves this by rendering the page in a browser before passing the response to Scrapy. This means the DOM you work with already includes dynamically loaded elements, not just the initial HTML shell.

The following code extracts text from elements after the page has been rendered:

async def parse(self, response): title = response.css(“h1::text”).get()

salary = response.css(“.salary::text”).get()

yield {“title”: title, “salary”: salary}

In this example, the parse method runs only after Playwright finishes loading the page. The selectors query the final DOM state, which includes elements created by JavaScript. The extracted values are then returned as structured data.

However, extraction can still fail if it starts before the page reaches a stable state. This usually shows up as missing values or partially populated fields, even though the data is visible in the browser. When this happens, it indicates that additional waits or load-state checks are required before extracting data.

3. Automating Form Interactions

Many websites expose data only after user interaction. Search boxes, filters, and pagination controls often trigger JavaScript events rather than URL-based navigation. With standard Scrapy, these interactions cannot be simulated because no browser context exists.

Because of this, attempting to modify query parameters or follow links often fails to reproduce the behavior seen in the browser.

Scrapy Playwright enables spiders to interact with page elements directly inside the browser, allowing form-driven workflows to be automated reliably.

async def parse(self, response): page = response.meta[“playwright_page”]

await page.fill(“input[name=’keyword’]”, “engineer”)

await page.click(“button[type=’submit’]”)

await page.wait_for_load_state(“networkidle”)

Here, the spider fills an input field, triggers a submit action, and waits until network activity settles. This sequence mirrors how a real user interacts with the page. When results do not appear after submission, the issue is usually related to missing waits or incomplete navigation rather than incorrect selectors.

Also Read: How to Use waitForUrl in Playwright

4. Capturing Screenshots

When scraping dynamic websites, missing data is not always caused by faulty logic. Pages may fail silently due to timing issues, blocked resources, or incomplete interactions. Logs alone often do not reveal what the browser actually rendered.

Scrapy Playwright allows screenshots to be captured at any point during execution, providing visual confirmation of the page state.

async def parse(self, response): page = response.meta[“playwright_page”]

await page.screenshot(path=”page.png”)

This captures the current browser view exactly as Playwright sees it. When extracted data does not match expectations, screenshots help confirm whether the page reached the intended state before parsing began.

Scraping Dynamic and JavaScript-heavy Websites

Modern web applications rely heavily on JavaScript to fetch data, update layouts, and render content after the initial page load. Scraping these pages requires more than downloading HTML because critical data often appears only after scripts execute.

5. Scraping JavaScript-rendered Pages

In a standard Scrapy crawl, the response represents only what the server returns. This approach works when pages are server-rendered, but it fails when the visible content is injected by JavaScript after the page loads.

However, many modern websites return an almost empty HTML document and rely on client-side frameworks to fetch data and render components. When Scrapy parses such responses, selectors return empty or incomplete results because the DOM never reaches its final state.

Scrapy Playwright resolves this by loading the page in a real browser environment before Scrapy processes the response. JavaScript executes fully, network requests complete, and the DOM reflects what a user actually sees.

yield scrapy.Request( url=”https://example.com”,

meta={“playwright”: True}

)

This configuration ensures that Scrapy receives a rendered response instead of a raw HTML shell. When previously missing elements become accessible without changing selectors, it indicates that JavaScript rendering was the blocking factor.

6. Waiting for Dynamic Elements

Even after JavaScript execution begins, individual elements may still load asynchronously based on user actions, API responses, or delayed scripts. Extracting data as soon as the page opens often results in missing nodes because the target elements are not yet attached to the DOM.

Scrapy Playwright allows spiders to wait explicitly for specific elements before parsing starts, which aligns extraction with the actual availability of data.

yield scrapy.Request( url=”https://example.com”,

meta={

“playwright”: True,

“playwright_page_methods”: [

PageMethod(“wait_for_selector”, “.job-card”)

]

}

)

This approach ensures that parsing begins only after the required elements exist. When selectors intermittently return None despite correct logic, it usually indicates that the page structure was accessed before dynamic components finished rendering.

7. Waiting for Page Load States

Some pages appear visually complete while still performing background work such as fetching data, hydrating components, or updating layouts. Relying solely on element presence can be misleading because the page may still be transitioning between states.

Playwright waitforloadstate provides finer control over when Scrapy should proceed.

PageMethod(“wait_for_load_state”, “networkidle”)

Waiting for a stable load state reduces the risk of scraping transient DOM structures that change moments later. When extracted values vary across runs without changes in selectors, delayed network activity is often the underlying cause.

8. Waiting for a Specific Amount of Time

In certain cases, pages trigger delayed animations, polling-based updates, or timed content injections that do not expose reliable selectors or load signals. While explicit waits are not ideal, controlled delays can act as a fallback when no deterministic condition exists.

Scrapy Playwright allows timed waits to accommodate such behavior.

PageMethod(“wait_for_timeout”, 3000)

This technique should be used sparingly because it introduces fixed latency and reduces efficiency. When no selector or load state consistently signals readiness, timed waits can stabilize extraction but should be combined with retries or validation logic to avoid masking deeper issues.

9. Capturing AJAX Data

Many modern websites load critical data through background API calls rather than embedding it directly in the HTML. In these cases, scraping the rendered DOM may work, but it often adds unnecessary complexity and increases the risk of parsing fragile markup.

Scrapy Playwright can intercept network traffic and capture AJAX responses directly, which allows spiders to extract structured data at its source.

PageMethod(“route”, “**/api/jobs*”, lambda route, request: route.continue_())

By monitoring specific endpoints, it becomes possible to parse clean JSON responses instead of relying on UI-level selectors. When DOM extraction feels brittle or breaks after minor UI changes, the underlying issue is often that the data originates from an API rather than the page itself.

10. Running Custom JavaScript Code

Some scraping scenarios require logic that cannot be expressed through selectors alone. Pages may compute values client-side, transform text dynamically, or expose data only through JavaScript variables.

Scrapy Playwright allows execution of custom JavaScript within the page context, which enables direct access to in-memory data and browser APIs.

PageMethod( “evaluate”,

“document.querySelectorAll(‘.job-card’).length”

)

Executing JavaScript inside the browser removes the guesswork involved in reverse-engineering rendered output. When extracted values appear inconsistent with what is visible in the browser, it often indicates that the final data exists only within the JavaScript runtime.

11. Scrolling Infinite Pages

Infinite scrolling replaces traditional pagination with dynamic content loading triggered by scroll events. Scraping only the initial viewport results in incomplete datasets because additional items load progressively as the user scrolls.

Scrapy Playwright can simulate scrolling behavior to trigger content loading repeatedly.

PageMethod( “evaluate”,

“window.scrollBy(0, document.body.scrollHeight)”

)

Scrolling must be paired with waits to allow new content to load before continuing. When spiders return a fixed number of records regardless of page size, it typically means that scroll-driven requests were never triggered.

Also Read: How to Scroll to Element in Playwright

Optimizing Scrapy Playwright for Large-scale Scraping

As scraping workloads grow, using Playwright inside Scrapy requires deliberate optimization. Browser contexts, pages, and network requests can easily become bottlenecks if they are not managed carefully.

Let’s understand how to scale Scrapy Playwright reliably by controlling concurrency, reducing unnecessary browser work, and maintaining stability during long-running or high-volume crawls.

12. Scraping Multiple Pages

Scraping a single page is rarely the end goal. Most real-world targets involve navigating across category pages, pagination links, or dynamically generated URLs. When Playwright is introduced, it becomes important to control when browser automation is actually required.

Scrapy Playwright allows mixing browser-driven requests with standard Scrapy requests so that only pages requiring JavaScript incur the overhead of a browser.

yield scrapy.Request( url=next_page,

callback=self.parse,

meta={“playwright”: True}

)

Overusing Playwright for every request can slow crawls significantly. When performance drops unexpectedly, the usual cause is treating static and dynamic pages the same instead of selectively enabling browser rendering.

13. Managing Playwright Sessions and Concurrency

At scale, each Playwright page consumes memory and CPU. Without session control, spiders can exhaust system resources or stall under load.

Scrapy Playwright provides persistent browser contexts through session management, which allows cookies, authentication state, and page data to be reused safely across requests.

meta={ “playwright”: True,

“playwright_context”: “session_1”

}Reusing contexts reduces browser startups and stabilizes long-running crawls. When spiders slow down over time or crash after several hundred requests, unmanaged browser contexts are usually the underlying problem.

14. Running Playwright in Headless Mode

Headless execution is essential for production scraping where visual output is unnecessary. Running browsers with a UI increases resource consumption and limits concurrency.

Playwright runs headlessly by default, but explicit configuration ensures consistent behavior across environments.

PLAYWRIGHT_LAUNCH_OPTIONS = { “headless”: True

}If behavior differs between local and CI environments, the discrepancy often comes from inconsistent browser launch settings rather than scraping logic.

15. Aborting Unwanted Requests

Dynamic pages frequently load analytics scripts, ads, videos, and tracking pixels that are irrelevant to scraping. Allowing these requests to execute wastes bandwidth and slows page rendering.

Scrapy Playwright can block unnecessary resource types before they are downloaded.

PageMethod( “route”,

“**/*”,

lambda route, request: route.abort()

if request.resource_type in [“image”, “font”, “media”]

else route.continue_()

)

Reducing network noise improves page stability and crawl speed. When pages take longer to load despite minimal scraping logic, excessive third-party requests are often the hidden cause.

16. Restarting Disconnected Browsers

Long-running crawls can encounter browser crashes, memory leaks, or lost connections. Without recovery logic, a single browser failure can halt the entire scrape.

Scrapy Playwright automatically restarts disconnected browsers when configured correctly, allowing spiders to continue without manual intervention.

PLAYWRIGHT_BROWSER_TYPE = “chromium”

If spiders stop responding after extended runtimes, the issue is usually browser lifecycle management rather than site blocking or parsing errors.

How to Use Proxies with Scrapy Playwright (Without Getting Blocked)

When scraping at scale, repeated requests from a single IP often lead to throttling or blocking. Playwright Proxies help distribute traffic, but using them with browser automation requires more than setting an HTTP proxy.

With Scrapy Playwright, proxies must be applied at the browser context level so that page loads, JavaScript execution, and AJAX calls all originate from the same IP. This is essential for maintaining session consistency on dynamic websites.

Scrapy Playwright handles this by passing proxy settings directly to the Playwright browser context.

yield scrapy.Request( url=”https://example.com/jobs”,

meta={

“playwright”: True,

“playwright_context_kwargs”: {

“proxy”: {

“server”: “http://proxy-server:8000”,

“username”: “user”,

“password”: “pass”,

}

}

},

callback=self.parse

)

This configuration ensures that the entire browser session uses the proxy, including scripts, assets, and background network calls.

Problems with proxies rarely fail loudly. Instead, they usually appear as subtle data issues:

- Pages render, but key elements are missing.

- AJAX responses fail silently.

- CAPTCHAs appear intermittently despite low request volume.

These symptoms typically indicate unstable proxies or inconsistent IP usage during rendering.

To reduce blocking risk and improve stability:

- Rotate proxies per browser context rather than per request.

- Avoid highly shared or low-quality proxies for JavaScript-heavy sites.

- Validate proxy behavior under real browser conditions before scaling.

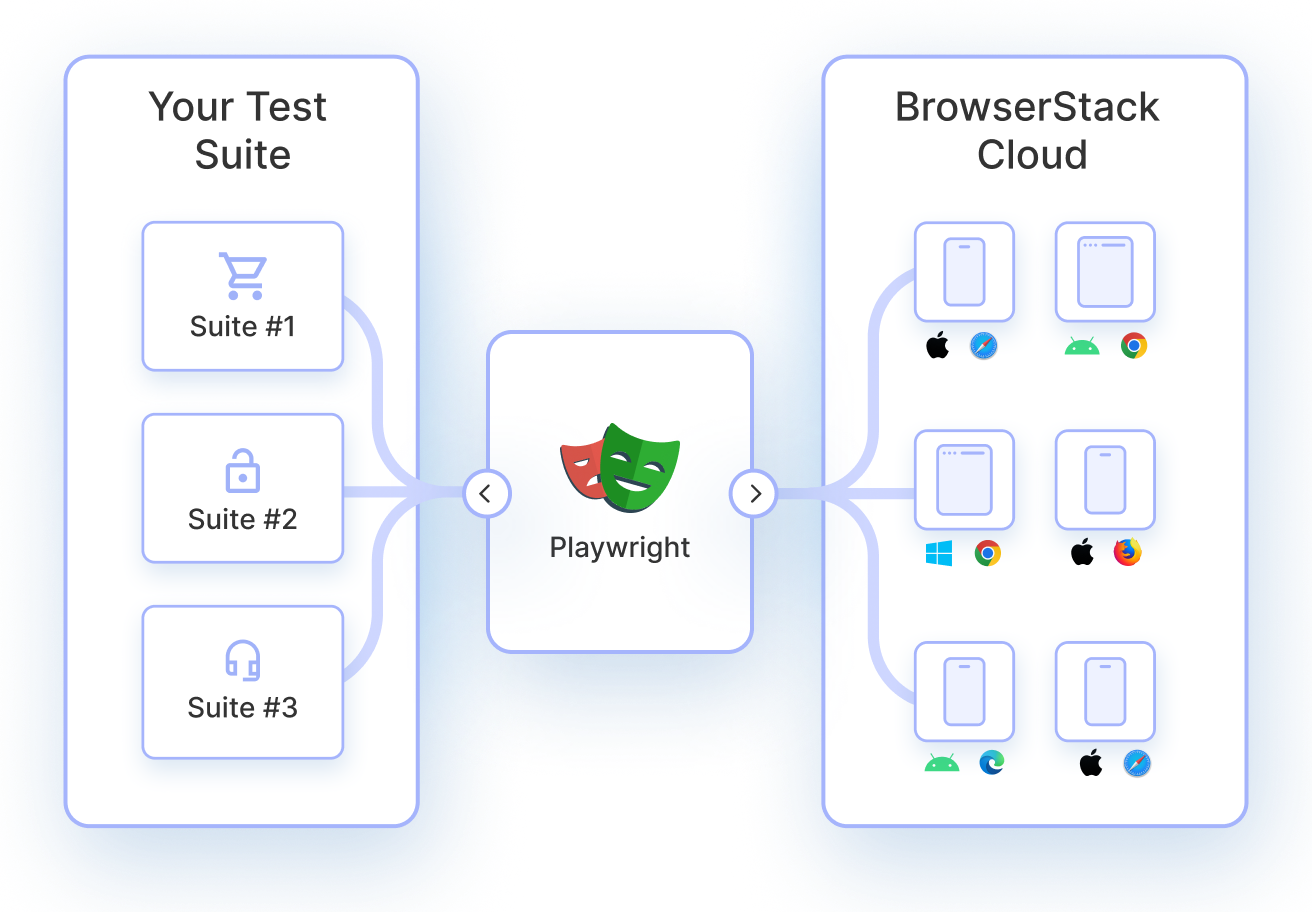

How BrowserStack Can Help Validate Scrapy Playwright on Real Devices?

Scrapy Playwright scripts are usually developed and tested in controlled local environments. While this works for initial development, it often hides issues that appear only when scraping runs at scale or in different environments.

Common limitations when validating Scrapy Playwright locally include:

- Headless browsers behave differently from full browsers.

- Dynamic content loads inconsistently across browser engines.

- Layout or interaction differences on mobile viewports.

- Limited visibility into failures that occur intermittently.

- Resource constraints when running multiple browser sessions in parallel.

These limitations make it difficult to know whether failures are caused by scraping logic, browser behavior, or the execution environment itself.

BrowserStack addresses this by providing access to real browsers and real devices in cloud-hosted environments. Instead of relying on local machines or simulated setups, Scrapy Playwright workflows can be validated against actual browser engines, operating systems, and device configurations.

Key ways BrowserStack fits into a Scrapy Playwright workflow include:

- Cross-browser validation: Confirm that Playwright-driven interactions behave consistently across Chromium, Firefox, and WebKit.

- Real Device Cloud: Validate scraping behavior on actual mobile and desktop browsers where layouts and JavaScript execution differ.

- Test Analytics: Review past test sessions to track coverage, failures, and execution patterns to identify flaky behavior and improve scraping reliability.

- Multi-Device Testing: Run multiple Playwright sessions concurrently without managing local browser infrastructure.

Conclusion

Scrapy Playwright makes it possible to scrape modern websites that rely on dynamic rendering, client-side JavaScript, and asynchronous data loading. By combining Scrapy’s efficient crawling and request handling with Playwright’s browser automation, it enables reliable interaction with pages that would otherwise return incomplete or inconsistent data.

As scraping workflows scale, validating behavior across different browsers and environments becomes critical. BrowserStack complements Scrapy Playwright by allowing scripts to be tested on real browsers and devices, helping uncover environment-specific issues early and improving the reliability of large-scale scraping runs.